Research

My research field is natural language processing: in my research group, we develop machine learning models and algorithms that analyse language written by humans.

I have worked on variety of research problems within the field of NLP and my interests are quite wide. A common thread in the various research projects is that they are related to high-level questions such as: What information does this text express? How can the computer determine what this text means? To make some progress on these high-level goals, we need to be cross-disciplinary: we have to apply methods and models not only from machine learning but also fields such as linguistics, information theory, statistics, and algorithms.

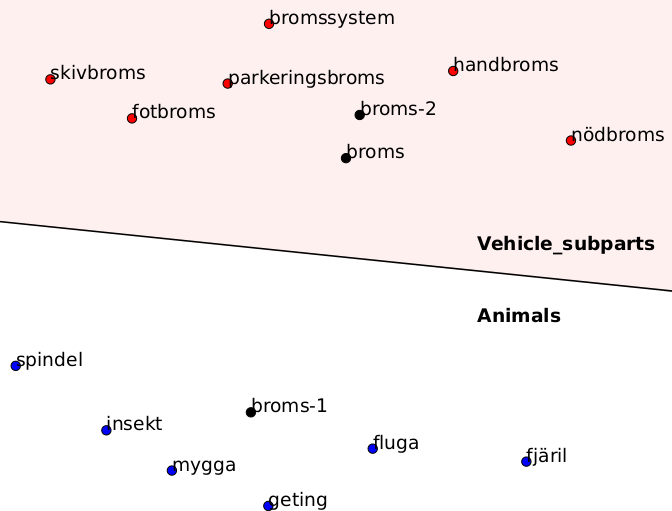

Most of the current research in our group involves questions around learned representations of words and text. Is it possible to improve the representations by grounding them in other information sources? How are NLP systems influenced by the information in the representations? For instance, we have tried to use visual information when training text representations and we have developed different training methods for visual supervision. To investigate how text representations are affected by the visual training, we designed experimental protocols to measure these effects. In addition to the visual modality, we are interested in representation models that combine text-based supervision with different types of structured knowledge, and we developed a number of methods for injecting such knowledge into word representations, either as a post-processing step or during training. In a recently started project, we will investigate how text-based causal inference methods are influenced by text representations, and develop new methods to control what information is expressed by representations.

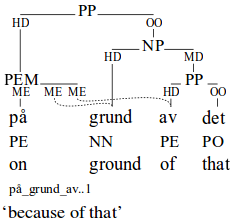

In my earlier research, until around 2013, many of the research projects involved defining some sort of structured representation of the meaning content of a text, and the job of the machine would then be to extract this type of structure automatically from the text. Most of my work as a PhD student focused on machine learning models and algorithms for extracting different types of event representations: for instance, we developed systems that extract semantic roles and semantic frames. I also developed algorithms for integrating dependency parsing and semantic role labeling. Similar methods could also be applied for extracting opinion structures. My first NLP research project was on the extraction of event structures and temporal ordering in traffic accident reports.

Research group

See our group's page.

Current PhD students

- Mehrdad Farahani: works on representation learning and explaining retrieval-augmented models.

- Nicolas Audinet de Pieuchon: works on representations for text-based causal inference.

- Ehsan Doostmohammadi (as co-advisor with Marco Kuhlmann): works on multimodal models.

- Denitsa Saynova (as co-advisor with Moa Johansson): works on applications of NLP models in social science.

- Livia Qian (as co-advisor with Gabriel Skantze): works on conversational representation models.

- Mengyu Huang (as co-advisor with Selpi): works on multimodal learning.

Past PhD students

- Olof Mogren, now at RISE [thesis]

- Luis Nieto Piña, now at AFRY [thesis]

- Lovisa Hagström, now at Eghed [thesis]

- Tobias Norlund, now at AI Sweden [licentiate thesis]

- Mikael Kågebäck, now at Sleep Cycle (as co-advisor with Devdatt Dubhashi) [thesis]

- Prasanth Kolachina (as co-advisor with Aarne Ranta) [thesis]

- Felix Morger (as co-advisor with Lars Borin and Aleksandrs Berdicevskis) [thesis]

Projects

-

2023 — 2027Countering bias in AI methods in the social sciencesThis project will investigate how text representations influence causal estimates.

Funded by the Wallenberg AI, Autonomous Systems and Software Program (WASP-HS). -

2022 — 2026Representation learning for conversational AIThis project will investigate how general representations of spoken conversation can be learned in a self-supervised fashion.

Funded by the Wallenberg AI, Autonomous Systems and Software Program (WASP-AI). -

2020 — 2024Interpreting and grounding pre-trained representations for natural language processingIn this project, we will develop new methods for the interpretation, grounding, and integration of deep contextualized representations of language, and to evaluate the usefulness of these methods in downstream applications.

Funded by the Wallenberg AI, Autonomous Systems and Software Program (WASP-AI).

Past projects

-

2014 — 2016KOALA – Korp's linguistic annotationsThe project Koala – Korp's linguistic annotations – is aimed at developing an infrastructure for text-based research with high-quality annotations.

2014 — 2016KOALA – Korp's linguistic annotationsThe project Koala – Korp's linguistic annotations – is aimed at developing an infrastructure for text-based research with high-quality annotations.

Funded by Riksbankens Jubileumsfond. -

2014 — 2018Corpus-driven induction of linguistic knowledgeThis project explores synergies between knowledge-based and distributional representations of semantics.

2014 — 2018Corpus-driven induction of linguistic knowledgeThis project explores synergies between knowledge-based and distributional representations of semantics.

Funded by the Swedish Research Council (VR). -

2013 — 2017Towards a knowledge-based culturomicsThis program focuses on extracting and correlating information from large volumes of text using a combination of knowledge-based and statistical methods. A central aim is to develop methodology and applications in support of research in disciplines where text is an important primary research data source, such as the humanities and social sciences.

2013 — 2017Towards a knowledge-based culturomicsThis program focuses on extracting and correlating information from large volumes of text using a combination of knowledge-based and statistical methods. A central aim is to develop methodology and applications in support of research in disciplines where text is an important primary research data source, such as the humanities and social sciences.

Funded by the Swedish Research Council (VR).