The term GF is used for different things:

This tutorial is primarily about the GF program and the GF programming language. It will guide you

A grammar is a definition of a language. From this definition, different language processing components can be derived:

A GF grammar can be seen as a declarative program from which these processing tasks can be automatically derived. In addition, many other tasks are readily available for GF grammars:

A typical GF application is based on a multilingual grammar involving translation on a special domain. Existing applications of this idea include

The specialization of a grammar to a domain makes it possible to obtain much better translations than in an unlimited machine translation system. This is due to the well-defined semantics of such domains. Grammars having this character are called application grammars. They are different from most grammars written by linguists just because they are multilingual and domain-specific.

However, there is another kind of grammars, which we call resource grammars. These are large, comprehensive grammars that can be used on any domain. The GF Resource Grammar Library has resource grammars for 10 languages. These grammars can be used as libraries to define application grammars. In this way, it is possible to write a high-quality grammar without knowing about linguistics: in general, to write an application grammar by using the resource library just requires practical knowledge of the target language. and all theoretical knowledge about its grammar is given by the libraries.

This tutorial is mainly for programmers who want to learn to write application grammars. It will go through GF's programming concepts without entering too deep into linguistics. Thus it should be accessible to anyone who has some previous programming experience.

A separate document has been written on how to write resource grammars: the Resource HOWTO. In this tutorial, we will just cover the programming concepts that are used for solving linguistic problems in the resource grammars.

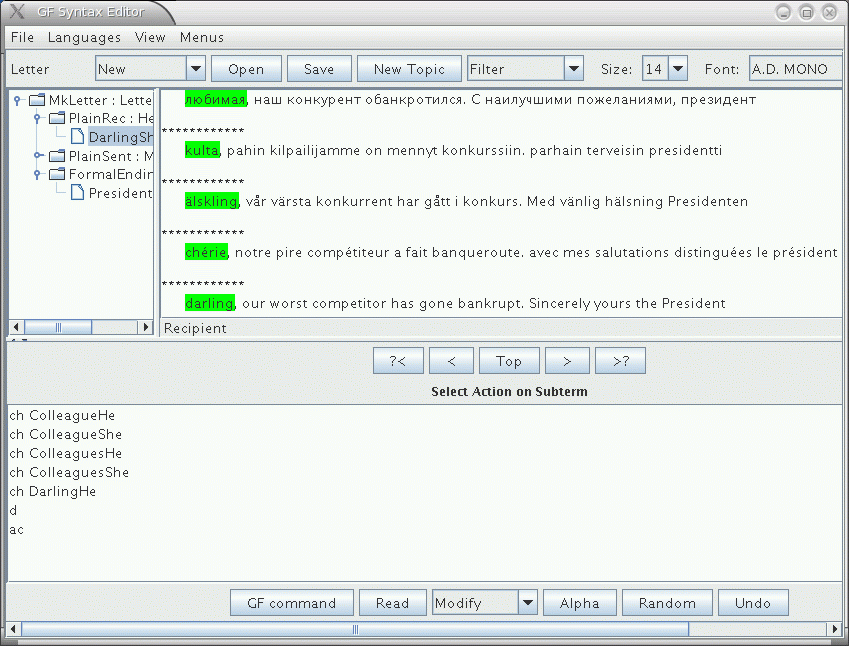

The easiest way to use GF is probably via the interactive syntax editor. Its use does not require any knowledge of the GF formalism. There is a separate Editor User Manual by Janna Khegai, covering the use of the editor. The editor is also a platform for many kinds of GF applications, implementing the slogan

write a document in a language you don't know, while seeing it in a language you know.

The tutorial gives a hands-on introduction to grammar writing. We start by building a small grammar for the domain of food: in this grammar, you can say things like

this Italian cheese is delicious

in English and Italian.

The first English grammar

food.cf

is written in a context-free

notation (also known as BNF). The BNF format is often a good

starting point for GF grammar development, because it is

simple and widely used. However, the BNF format is not

good for multilingual grammars. While it is possible to

"translate" by just changing the words contained in a

BNF grammar to words of some other

language, proper translation usually involves more.

For instance, the order of words may have to be changed:

Italian cheese ===> formaggio italiano

The full GF grammar format is designed to support such changes, by separating between the abstract syntax (the logical structure) and the concrete syntax (the sequence of words) of expressions.

There is more than words and word order that makes languages different. Words can have different forms, and which forms they have vary from language to language. For instance, Italian adjectives usually have four forms where English has just one:

delicious (wine, wines, pizza, pizzas)

vino delizioso, vini deliziosi, pizza deliziosa, pizze deliziose

The morphology of a language describes the forms of its words. While the complete description of morphology belongs to resource grammars, this tutorial will explain the programming concepts involved in morphology. This will moreover make it possible to grow the fragment covered by the food example. The tutorial will in fact build a miniature resource grammar in order to give an introduction to linguistically oriented grammar writing.

Thus it is by elaborating the initial food.cf example that

the tutorial makes a guided tour through all concepts of GF.

While the constructs of the GF language are the main focus,

also the commands of the GF system are introduced as they

are needed.

To learn how to write GF grammars is not the only goal of this tutorial. We will also explain the most important commands of the GF system. With these commands, simple applications of grammars, such as translation and quiz systems, can be built simply by writing scripts for the system.

More complicated applications, such as natural-language

interfaces and dialogue systems, moreover require programming in

some general-purpose language. Thus we will briefly explain how

GF grammars are used as components of Haskell programs.

Chapters on using them in Java and Javascript programs are

forthcoming; a comprehensive manual on GF embedded in Java, by Björn Bringert, is

available in

http://www.cs.chalmers.se/~bringert/gf/gf-java.html.

The GF program is open-source free software, which you can download via the GF Homepage:

http://www.cs.chalmers.se/~aarne/GF

There you can download

If you want to compile GF from source, you need a Haskell compiler. To compile the interactive editor, you also need a Java compilers. But normally you don't have to compile, and you definitely don't need to know Haskell or Java to use GF.

We are assuming the availability of a Unix shell. Linux and Mac OS X users have it automatically, the latter under the name "terminal". Windows users are recommended to install Cywgin, the free Unix shell for Windows.

To start the GF program, assuming you have installed it, just type

% gf

in the shell.

You will see GF's welcome message and the prompt >.

The command

> help

will give you a list of available commands.

As a common convention in this Tutorial, we will use

% as a prompt that marks system commands

> as a prompt that marks GF commands

Thus you should not type these prompts, but only the lines that follow them.

Now you are ready to try out your first grammar. We start with one that is not written in the GF language, but in the much more common BNF notation (Backus Naur Form). The GF program understands a variant of this notation and translates it internally to GF's own representation.

To get started, type (or copy) the following lines into a file named

food.cf:

Is. S ::= Item "is" Quality ; That. Item ::= "that" Kind ; This. Item ::= "this" Kind ; QKind. Kind ::= Quality Kind ; Cheese. Kind ::= "cheese" ; Fish. Kind ::= "fish" ; Wine. Kind ::= "wine" ; Italian. Quality ::= "Italian" ; Boring. Quality ::= "boring" ; Delicious. Quality ::= "delicious" ; Expensive. Quality ::= "expensive" ; Fresh. Quality ::= "fresh" ; Very. Quality ::= "very" Quality ; Warm. Quality ::= "warm" ;

For those who know ordinary BNF, the notation we use includes one extra element: a label appearing as the first element of each rule and terminated by a full stop.

The grammar we wrote defines a set of phrases usable for speaking about food.

It builds sentences (S) by assigning Qualitys to

Items. Items are build from Kinds by prepending the

word "this" or "that". Kinds are either atomic, such as

"cheese" and "wine", or formed by prepending a Quality to a

Kind. A Quality is either atomic, such as "Italian" and "boring",

or built by another Quality by prepending "very". Those familiar with

the context-free grammar notation will notice that, for instance, the

following sentence can be built using this grammar:

this delicious Italian wine is very very expensive

The first GF command needed when using a grammar is to import it.

The command has a long name, import, and a short name, i.

You can type either

> import food.cf

or

> i food.cf

to get the same effect. The effect is that the GF program compiles your grammar into an internal representation, and shows a new prompt when it is ready. It will also show how much CPU time is consumed:

> i food.cf

- parsing cf food.cf 12 msec

16 msec

>

You can now use GF for parsing:

> parse "this cheese is delicious"

Is (This Cheese) Delicious

> p "that wine is very very Italian"

Is (That Wine) (Very (Very Italian))

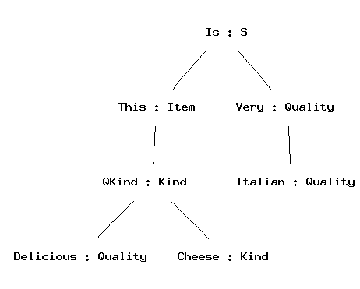

The parse (= p) command takes a string

(in double quotes) and returns an abstract syntax tree - the thing

beginning with Is. Trees are built from the rule labels given in the

grammar, and record the ways in which the rules are used to produce the

strings. A tree is, in general, something easier than a string

for a machine to understand and to process further.

Strings that return a tree when parsed do so in virtue of the grammar you imported. Try parsing something else, and you fail

> p "hello world"

Unknown words: hello world

Exercise. Extend the grammar food.cf by ten new food kinds and

qualities, and run the parser with new kinds of examples.

Exercise. Add a rule that enables questions of the form is this cheese Italian.

Exercise. Add the rule

IsVery. S ::= Item "is" "very" Quality ;

and see what happens when parsing this wine is very very Italian.

You have just made the grammar ambiguous: it now assigns several

trees to some strings.

Exercise. Modify the grammar so that at most one Quality may

attach to a given Kind. Thus boring Italian fish will no longer

be recognized.

You can also use GF for linearizing

(linearize = l). This is the inverse of

parsing, taking trees into strings:

> linearize Is (That Wine) Warm

that wine is warm

What is the use of this? Typically not that you type in a tree at

the GF prompt. The utility of linearization comes from the fact that

you can obtain a tree from somewhere else. One way to do so is

random generation (generate_random = gr):

> generate_random

Is (This (QKind Italian Fish)) Fresh

Now you can copy the tree and paste it to the linearize command.

Or, more conveniently, feed random generation into linearization by using

a pipe.

> gr | l

this Italian fish is fresh

Pipes in GF work much the same way as Unix pipes: they feed the output of one command into another command as its input.

The gibberish code with parentheses returned by the parser does not

look like trees. Why is it called so? From the abstract mathematical

point of view, trees are a data structure that

represents nesting: trees are branching entities, and the branches

are themselves trees. Parentheses give a linear representation of trees,

useful for the computer. But the human eye may prefer to see a visualization;

for this purpose, GF provides the command visualizre_tree = vt, to which

parsing (and any other tree-producing command) can be piped:

> parse "this delicious cheese is very Italian" | vt

This command uses the programs Graphviz and Ghostview, which you might not have, but which are freely available on the web.

Random generation is a good way to test a grammar; it can also be fun. So you may want to generate ten strings with one and the same command:

> gr -number=10 | l

that wine is boring

that fresh cheese is fresh

that cheese is very boring

this cheese is Italian

that expensive cheese is expensive

that fish is fresh

that wine is very Italian

this wine is Italian

this cheese is boring

this fish is boring

To generate all sentence that a grammar

can generate, use the command generate_trees = gt.

> generate_trees | l

that cheese is very Italian

that cheese is very boring

that cheese is very delicious

that cheese is very expensive

that cheese is very fresh

...

this wine is expensive

this wine is fresh

this wine is warm

You get quite a few trees but not all of them: only up to a given

depth of trees. To see how you can get more, use the

help = h command,

> help gt

Exercise. If the command gt generated all

trees in your grammar, it would never terminate. Why?

Exercise. Measure how many trees the grammar gives with depths 4 and 5,

respectively. You use the Unix word count command wc to count lines.

Hint. You can pipe the output of a GF command into a Unix command by

using the escape ?, as follows:

> generate_trees | ? wc

A pipe of GF commands can have any length, but the "output type" (either string or tree) of one command must always match the "input type" of the next command.

The intermediate results in a pipe can be observed by putting the

tracing flag -tr to each command whose output you

want to see:

> gr -tr | l -tr | p

Is (This Cheese) Boring

this cheese is boring

Is (This Cheese) Boring

This facility is good for test purposes: for instance, you may want to see if a grammar is ambiguous, i.e. contains strings that can be parsed in more than one way.

Exercise. Extend the grammar food.cf so that it produces ambiguous strings,

and try out the ambiguity test.

To save the outputs of GF commands into a file, you can

pipe it to the write_file = wf command,

> gr -number=10 | l | write_file exx.tmp

You can read the file back to GF with the

read_file = rf command,

> read_file exx.tmp | p -lines

Notice the flag -lines given to the parsing

command. This flag tells GF to parse each line of

the file separately. Without the flag, the grammar could

not recognize the string in the file, because it is not

a sentence but a sequence of ten sentences.

To see GF's internal representation of a grammar

that you have imported, you can give the command

print_grammar = pg,

> print_grammar

The output is quite unreadable at this stage, and you may feel happy that you did not need to write the grammar in that notation, but that the GF grammar compiler produced it.

However, we will now start the demonstration

how GF's own notation gives you

much more expressive power than the .cf

format. We will introduce the .gf format by presenting

another way of defining the same grammar as in

food.cf.

Then we will show how the full GF grammar format enables you

to do things that are not possible in the context-free format.

A GF grammar consists of two main parts:

The context-free format fuses these two things together, but it is always possible to take them apart. For instance, the sentence formation rule

Is. S ::= Item "is" Quality ;

is interpreted as the following pair of GF rules:

fun Is : Item -> Quality -> S ;

lin Is item quality = {s = item.s ++ "is" ++ quality.s} ;

The former rule, with the keyword fun, belongs to the abstract syntax.

It defines the function

Is which constructs syntax trees of form

(Is item quality).

The latter rule, with the keyword lin, belongs to the concrete syntax.

It defines the linearization function for

syntax trees of form (Is item quality).

Rules in a GF grammar are called judgements, and the keywords

fun and lin are used for distinguishing between two

judgement forms. Here is a summary of the most important

judgement forms:

| form | reading |

cat C |

C is a category |

fun f : A |

f is a function of type A |

| form | reading |

lincat C = T |

category C has linearization type T |

lin f = t |

function f has linearization t |

We return to the precise meanings of these judgement forms later. First we will look at how judgements are grouped into modules, and show how the food grammar is expressed by using modules and judgements.

A GF grammar consists of modules, into which judgements are grouped. The most important module forms are

abstract A = M, abstract syntax A with judgements in

the module body M.

concrete C of A = M, concrete syntax C of the

abstract syntax A, with judgements in the module body M.

The nonterminals of a context-free grammar, i.e. categories, are called basic types in the type system of GF. In addition to them, there are function types such as

Item -> Quality -> S

This type is read "a function from iterms and qualities to sentences". The last type in the arrow-separated sequence is the value type of the function type, the earlier types are its argument types.

The linearization type of a category is a record type, with zero of more fields of different types. The simplest record type used for linearization in GF is

{s : Str}

which has one field, with label s and type Str.

Examples of records of this type are

{s = "foo"}

{s = "hello" ++ "world"}

Whenever a record r of type {s : Str} is given,

r.s is an object of type Str. This is

a special case of the projection rule, allowing the extraction

of fields from a record:

{ ... p : T ... } then r.p : T

The type Str is really the type of token lists, but

most of the time one can conveniently think of it as the type of strings,

denoted by string literals in double quotes.

Notice that

"hello world"

is not recommended as an expression of type Str. It denotes

a token with a space in it, and will usually

not work with the lexical analysis that precedes parsing. A shorthand

exemplified by

["hello world and people"] === "hello" ++ "world" ++ "and" ++ "people"

can be used for lists of tokens. The expression

[]

denotes the empty token list.

To express the abstract syntax of food.cf in

a file Food.gf, we write two kinds of judgements:

cat judgement.

fun judgement,

with the type formed from the nonterminals of the rule.

abstract Food = {

cat

S ; Item ; Kind ; Quality ;

fun

Is : Item -> Quality -> S ;

This, That : Kind -> Item ;

QKind : Quality -> Kind -> Kind ;

Wine, Cheese, Fish : Kind ;

Very : Quality -> Quality ;

Fresh, Warm, Italian, Expensive, Delicious, Boring : Quality ;

}

Notice the use of shorthands permitting the sharing of the keyword in subsequent judgements,

cat S ; Item ; === cat S ; cat Item ;

and of the type in subsequent fun judgements,

fun Wine, Fish : Kind ; ===

fun Wine : Kind ; Fish : Kind ; ===

fun Wine : Kind ; fun Fish : Kind ;

The order of judgements in a module is free.

Exercise. Extend the abstract syntax Food with ten new

kinds and qualities, and with questions of the form

is this wine Italian.

Each category introduced in Food.gf is

given a lincat rule, and each

function is given a lin rule. Similar shorthands

apply as in abstract modules.

concrete FoodEng of Food = {

lincat

S, Item, Kind, Quality = {s : Str} ;

lin

Is item quality = {s = item.s ++ "is" ++ quality.s} ;

This kind = {s = "this" ++ kind.s} ;

That kind = {s = "that" ++ kind.s} ;

QKind quality kind = {s = quality.s ++ kind.s} ;

Wine = {s = "wine"} ;

Cheese = {s = "cheese"} ;

Fish = {s = "fish"} ;

Very quality = {s = "very" ++ quality.s} ;

Fresh = {s = "fresh"} ;

Warm = {s = "warm"} ;

Italian = {s = "Italian"} ;

Expensive = {s = "expensive"} ;

Delicious = {s = "delicious"} ;

Boring = {s = "boring"} ;

}

Exercise. Extend the concrete syntax FoodEng so that it

matches the abstract syntax defined in the exercise of the previous

section. What happens if the concrete syntax lacks some of the

new functions?

GF uses suffixes to recognize different file formats. The most important ones are:

.gf = file name

.gfc file.

Import FoodEng.gf and see what happens:

> i FoodEng.gf

- compiling Food.gf... wrote file Food.gfc 16 msec

- compiling FoodEng.gf... wrote file FoodEng.gfc 20 msec

The GF program does not only read the file

FoodEng.gf, but also all other files that it

depends on - in this case, Food.gf.

For each file that is compiled, a .gfc file

is generated. The GFC format (="GF Canonical") is the

"machine code" of GF, which is faster to process than

GF source files. When reading a module, GF decides whether

to use an existing .gfc file or to generate

a new one, by looking at modification times.

Exercise. What happens when you import FoodEng.gf for

a second time? Try this in different situations:

empty (e), which clears the memory

of GF.

FoodEng.gf, be it only an added space.

Food.gf.

The main advantage of separating abstract from concrete syntax is that one abstract syntax can be equipped with many concrete syntaxes. A system with this property is called a multilingual grammar.

Multilingual grammars can be used for applications such as

translation. Let us build an Italian concrete syntax for

Food and then test the resulting

multilingual grammar.

concrete FoodIta of Food = {

lincat

S, Item, Kind, Quality = {s : Str} ;

lin

Is item quality = {s = item.s ++ "è" ++ quality.s} ;

This kind = {s = "questo" ++ kind.s} ;

That kind = {s = "quello" ++ kind.s} ;

QKind quality kind = {s = kind.s ++ quality.s} ;

Wine = {s = "vino"} ;

Cheese = {s = "formaggio"} ;

Fish = {s = "pesce"} ;

Very quality = {s = "molto" ++ quality.s} ;

Fresh = {s = "fresco"} ;

Warm = {s = "caldo"} ;

Italian = {s = "italiano"} ;

Expensive = {s = "caro"} ;

Delicious = {s = "delizioso"} ;

Boring = {s = "noioso"} ;

}

Exercise. Write a concrete syntax of Food for some other language.

You will probably end up with grammatically incorrect output - but don't

worry about this yet.

Exercise. If you have written Food for German, Swedish, or some

other language, test with random or exhaustive generation what constructs

come out incorrect, and prepare a list of those ones that cannot be helped

with the currently available fragment of GF.

Import the two grammars in the same GF session.

> i FoodEng.gf

> i FoodIta.gf

Try generation now:

> gr | l

quello formaggio molto noioso è italiano

> gr | l -lang=FoodEng

this fish is warm

Translate by using a pipe:

> p -lang=FoodEng "this cheese is very delicious" | l -lang=FoodIta

questo formaggio è molto delizioso

Generate a multilingual treebank, i.e. a set of trees with their translations in different languages:

> gr -number=2 | tree_bank

Is (That Cheese) (Very Boring)

quello formaggio è molto noioso

that cheese is very boring

Is (That Cheese) Fresh

quello formaggio è fresco

that cheese is fresh

The lang flag tells GF which concrete syntax to use in parsing and

linearization. By default, the flag is set to the last-imported grammar.

To see what grammars are in scope and which is the main one, use the command

print_options = po:

> print_options

main abstract : Food

main concrete : FoodIta

actual concretes : FoodIta FoodEng

You can change the main grammar by the command change_main = cm:

> change_main FoodEng

main abstract : Food

main concrete : FoodEng

actual concretes : FoodIta FoodEng

If translation is what you want to do with a set of grammars, a convenient

way to do it is to open a translation_session = ts. In this session,

you can translate between all the languages that are in scope.

A dot . terminates the translation session.

> ts

trans> that very warm cheese is boring

quello formaggio molto caldo è noioso

that very warm cheese is boring

trans> questo vino molto italiano è molto delizioso

questo vino molto italiano è molto delizioso

this very Italian wine is very delicious

trans> .

>

This is a simple language exercise that can be automatically

generated from a multilingual grammar. The system generates a set of

random sentences, displays them in one language, and checks the user's

answer given in another language. The command translation_quiz = tq

makes this in a subshell of GF.

> translation_quiz FoodEng FoodIta

Welcome to GF Translation Quiz.

The quiz is over when you have done at least 10 examples

with at least 75 % success.

You can interrupt the quiz by entering a line consisting of a dot ('.').

this fish is warm

questo pesce è caldo

> Yes.

Score 1/1

this cheese is Italian

questo formaggio è noioso

> No, not questo formaggio è noioso, but

questo formaggio è italiano

Score 1/2

this fish is expensive

You can also generate a list of translation exercises and save it in a

file for later use, by the command translation_list = tl

> translation_list -number=25 FoodEng FoodIta | write_file transl.txt

The number flag gives the number of sentences generated.

The module system of GF makes it possible to extend a

grammar in different ways. The syntax of extension is

shown by the following example. We extend Food by

adding a category of questions and two new functions.

abstract Morefood = Food ** {

cat

Question ;

fun

QIs : Item -> Quality -> Question ;

Pizza : Kind ;

}

Parallel to the abstract syntax, extensions can be built for concrete syntaxes:

concrete MorefoodEng of Morefood = FoodEng ** {

lincat

Question = {s : Str} ;

lin

QIs item quality = {s = "is" ++ item.s ++ quality.s} ;

Pizza = {s = "pizza"} ;

}

The effect of extension is that all of the contents of the extended and extending module are put together. We also say that the new module inherits the contents of the old module.

Specialized vocabularies can be represented as small grammars that only do "one thing" each. For instance, the following are grammars for fruit and mushrooms

abstract Fruit = {

cat Fruit ;

fun Apple, Peach : Fruit ;

}

abstract Mushroom = {

cat Mushroom ;

fun Cep, Agaric : Mushroom ;

}

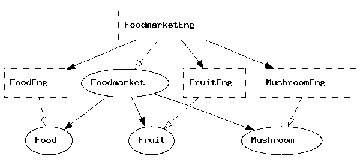

They can afterwards be combined into bigger grammars by using multiple inheritance, i.e. extension of several grammars at the same time:

abstract Foodmarket = Food, Fruit, Mushroom ** {

fun

FruitKind : Fruit -> Kind ;

MushroomKind : Mushroom -> Kind ;

}

At this point, you would perhaps like to go back to

Food and take apart Wine to build a special

Drink module.

When you have created all the abstract syntaxes and

one set of concrete syntaxes needed for Foodmarket,

your grammar consists of eight GF modules. To see how their

dependences look like, you can use the command

visualize_graph = vg,

> visualize_graph

and the graph will pop up in a separate window.

The graph uses

Just as the visualize_tree = vt command, the open source tools

Ghostview and Graphviz are needed.

To document your grammar, you may want to print the

graph into a file, e.g. a .png file that

can be included in an HTML document. You can do this

by first printing the graph into a file .dot and then

processing this file with the dot program (from the Graphviz package).

> pm -printer=graph | wf Foodmarket.dot

> ! dot -Tpng Foodmarket.dot > Foodmarket.png

The latter command is a Unix command, issued from GF by using the

shell escape symbol !. The resulting graph was shown in the previous section.

The command print_multi = pm is used for printing the current multilingual

grammar in various formats, of which the format -printer=graph just

shows the module dependencies. Use help to see what other formats

are available:

> help pm

> help -printer

> help help

Another form of system commands are those usable in GF pipes. The escape symbol

is then ?.

> generate_trees | ? wc

In comparison to the .cf format, the .gf format looks rather

verbose, and demands lots more characters to be written. You have probably

done this by the copy-paste-modify method, which is a common way to

avoid repeating work.

However, there is a more elegant way to avoid repeating work than the copy-and-paste method. The golden rule of functional programming says that

A function separates the shared parts of different computations from the changing parts, its arguments, or parameters. In functional programming languages, such as Haskell, it is possible to share much more code with functions than in imperative languages such as C and Java.

GF is a functional programming language, not only in the sense that

the abstract syntax is a system of functions (fun), but also because

functional programming can be used to define concrete syntax. This is

done by using a new form of judgement, with the keyword oper (for

operation), distinct from fun for the sake of clarity.

Here is a simple example of an operation:

oper ss : Str -> {s : Str} = \x -> {s = x} ;

The operation can be applied to an argument, and GF will compute the application into a value. For instance,

ss "boy" ===> {s = "boy"}

(We use the symbol ===> to indicate how an expression is

computed into a value; this symbol is not a part of GF)

Thus an oper judgement includes the name of the defined operation,

its type, and an expression defining it. As for the syntax of the defining

expression, notice the lambda abstraction form \x -> t of

the function.

Operator definitions can be included in a concrete syntax. But they are not really tied to a particular set of linearization rules. They should rather be seen as resources usable in many concrete syntaxes.

The resource module type can be used to package

oper definitions into reusable resources. Here is

an example, with a handful of operations to manipulate

strings and records.

resource StringOper = {

oper

SS : Type = {s : Str} ;

ss : Str -> SS = \x -> {s = x} ;

cc : SS -> SS -> SS = \x,y -> ss (x.s ++ y.s) ;

prefix : Str -> SS -> SS = \p,x -> ss (p ++ x.s) ;

}

Resource modules can extend other resource modules, in the same way as modules of other types can extend modules of the same type. Thus it is possible to build resource hierarchies.

Any number of resource modules can be

opened in a concrete syntax, which

makes definitions contained

in the resource usable in the concrete syntax. Here is

an example, where the resource StringOper is

opened in a new version of FoodEng.

concrete Food2Eng of Food = open StringOper in {

lincat

S, Item, Kind, Quality = SS ;

lin

Is item quality = cc item (prefix "is" quality) ;

This k = prefix "this" k ;

That k = prefix "that" k ;

QKind k q = cc k q ;

Wine = ss "wine" ;

Cheese = ss "cheese" ;

Fish = ss "fish" ;

Very = prefix "very" ;

Fresh = ss "fresh" ;

Warm = ss "warm" ;

Italian = ss "Italian" ;

Expensive = ss "expensive" ;

Delicious = ss "delicious" ;

Boring = ss "boring" ;

}

Exercise. Use the same string operations to write FoodIta

more concisely.

GF, like Haskell, permits partial application of functions. An example of this is the rule

lin This k = prefix "this" k ;

which can be written more concisely

lin This = prefix "this" ;

The first form is perhaps more intuitive to write but, once you get used to partial application, you will appreciate its conciseness and elegance. The logic of partial application is known as currying, with a reference to Haskell B. Curry. The idea is that any n-place function can be defined as a 1-place function whose value is an n-1 -place function. Thus

oper prefix : Str -> SS -> SS ;

can be used as a 1-place function that takes a Str into a

function SS -> SS. The expected linearization of This is exactly

a function of such a type, operating on an argument of type Kind

whose linearization is of type SS. Thus we can define the

linearization directly as prefix "this".

Exercise. Define an operation infix analogous to prefix,

such that it allows you to write

lin Is = infix "is" ;

To test a resource module independently, you must import it

with the flag -retain, which tells GF to retain oper definitions

in the memory; the usual behaviour is that oper definitions

are just applied to compile linearization rules

(this is called inlining) and then thrown away.

> i -retain StringOper.gf

The command compute_concrete = cc computes any expression

formed by operations and other GF constructs. For example,

> compute_concrete prefix "in" (ss "addition")

{

s : Str = "in" ++ "addition"

}

Using operations defined in resource modules is a way to avoid repetitive code. In addition, it enables a new kind of modularity and division of labour in grammar writing: grammarians familiar with the linguistic details of a language can make their knowledge available through resource grammar modules, whose users only need to pick the right operations and not to know their implementation details.

In the following sections, we will go through some such linguistic details. The programming constructs needed when doing this are useful for all GF programmers, even if they don't hand-code the linguistics of their applications but get them from libraries. It is also useful to know something about the linguistic concepts of inflection, agreement, and parts of speech.

Suppose we want to say, with the vocabulary included in

Food.gf, things like

all Italian wines are delicious

The new grammatical facility we need are the plural forms of nouns and verbs (wines, are), as opposed to their singular forms.

The introduction of plural forms requires two things:

Different languages have different rules of inflection and agreement. For instance, Italian has also agreement in gender (masculine vs. feminine). We want to express such special features of languages in the concrete syntax while ignoring them in the abstract syntax.

To be able to do all this, we need one new judgement form and many new expression forms. We also need to generalize linearization types from strings to more complex types.

Exercise. Make a list of the possible forms that nouns, adjectives, and verbs can have in some languages that you know.

We define the parameter type of number in Englisn by using a new form of judgement:

param Number = Sg | Pl ;

To express that Kind expressions in English have a linearization

depending on number, we replace the linearization type {s : Str}

with a type where the s field is a table depending on number:

lincat Kind = {s : Number => Str} ;

The table type Number => Str is in many respects similar to

a function type (Number -> Str). The main difference is that the

argument type of a table type must always be a parameter type. This means

that the argument-value pairs can be listed in a finite table. The following

example shows such a table:

lin Cheese = {s = table {

Sg => "cheese" ;

Pl => "cheeses"

}

} ;

The table consists of branches, where a pattern on the

left of the arrow => is assigned a value on the right.

The application of a table to a parameter is done by the selection

operator !. For instance,

table {Sg => "cheese" ; Pl => "cheeses"} ! Pl

is a selection that computes into the value "cheeses".

This computation is performed by pattern matching: return

the value from the first branch whose pattern matches the

selection argument. Thus

table {Sg => "cheese" ; Pl => "cheeses"} ! Pl

===> "cheeses"

Exercise. In a previous exercise, we make a list of the possible

forms that nouns, adjectives, and verbs can have in some languages that

you know. Now take some of the results and implement them by

using parameter type definitions and tables. Write them into a resource

module, which you can test by using the command compute_concrete.

All English common nouns are inflected in number, most of them in the same way: the plural form is obtained from the singular by adding the ending s. This rule is an example of a paradigm - a formula telling how the inflection forms of a word are formed.

From the GF point of view, a paradigm is a function that takes a lemma -

also known as a dictionary form - and returns an inflection

table of desired type. Paradigms are not functions in the sense of the

fun judgements of abstract syntax (which operate on trees and not

on strings), but operations defined in oper judgements.

The following operation defines the regular noun paradigm of English:

oper regNoun : Str -> {s : Number => Str} = \x -> {

s = table {

Sg => x ;

Pl => x + "s"

}

} ;

The gluing operator + tells that

the string held in the variable x and the ending "s"

are written together to form one token. Thus, for instance,

(regNoun "cheese").s ! Pl ---> "cheese" + "s" ---> "cheeses"

Exercise. Identify cases in which the regNoun paradigm does not

apply in English, and implement some alternative paradigms.

Exercise. Implement a paradigm for regular verbs in English.

Exercise. Implement some regular paradigms for other languages you have considered in earlier exercises.

Some English nouns, such as mouse, are so irregular that

it makes no sense to see them as instances of a paradigm. Even

then, it is useful to perform data abstraction from the

definition of the type Noun, and introduce a constructor

operation, a worst-case function for nouns:

oper mkNoun : Str -> Str -> Noun = \x,y -> {

s = table {

Sg => x ;

Pl => y

}

} ;

Thus we can define

lin Mouse = mkNoun "mouse" "mice" ;

and

oper regNoun : Str -> Noun = \x ->

mkNoun x (x + "s") ;

instead of writing the inflection tables explicitly.

The grammar engineering advantage of worst-case functions is that

the author of the resource module may change the definitions of

Noun and mkNoun, and still retain the

interface (i.e. the system of type signatures) that makes it

correct to use these functions in concrete modules. In programming

terms, Noun is then treated as an abstract datatype.

In addition to the completely regular noun paradigm regNoun,

some other frequent noun paradigms deserve to be

defined, for instance,

sNoun : Str -> Noun = \kiss -> mkNoun kiss (kiss + "es") ;

What about nouns like fly, with the plural flies? The already available solution is to use the longest common prefix fl (also known as the technical stem) as argument, and define

yNoun : Str -> Noun = \fl -> mkNoun (fl + "y") (fl + "ies") ;

But this paradigm would be very unintuitive to use, because the technical stem

is not an existing form of the word. A better solution is to use

the lemma and a string operator init, which returns the initial segment (i.e.

all characters but the last) of a string:

yNoun : Str -> Noun = \fly -> mkNoun fly (init fly + "ies") ;

The operation init belongs to a set of operations in the

resource module Prelude, which therefore has to be

opened so that init can be used. Its dual is last:

> cc init "curry"

"curr"

> cc last "curry"

"y"

As generalizations of the library functions init and last, GF has

two predefined funtions:

Predef.dp, which "drops" suffixes of any length,

and Predef.tk, which "takes" a prefix

just omitting a number of characters from the end. For instance,

> cc Predef.tk 3 "worried"

"worr"

> cc Predef.dp 3 "worried"

"ied"

The prefix Predef is given to a handful of functions that could

not be defined internally in GF. They are available in all modules

without explicit open of the module Predef.

We have so far built all expressions of the table form

from branches whose patterns are constants introduced in

param definitions, as well as constant strings.

But there are more expressive patterns. Here is a summary of the possible forms:

_ matches anything

"s", matches the same string

P | ... | Q matches anything that

one of the disjuncts matches

Pattern matching is performed in the order in which the branches appear in the table: the branch of the first matching pattern is followed.

As syntactic sugar, one-branch tables can be written concisely,

\\P,...,Q => t === table {P => ... table {Q => t} ...}

Finally, the case expressions common in functional

programming languages are syntactic sugar for table selections:

case e of {...} === table {...} ! e

It may be hard for the user of a resource morphology to pick the right

inflection paradigm. A way to help this is to define a more intelligent

paradigm, which chooses the ending by first analysing the lemma.

The following variant for English regular nouns puts together all the

previously shown paradigms, and chooses one of them on the basis of

the final letter of the lemma (found by the prelude operator last).

regNoun : Str -> Noun = \s -> case last s of {

"s" | "z" => mkNoun s (s + "es") ;

"y" => mkNoun s (init s + "ies") ;

_ => mkNoun s (s + "s")

} ;

This definition displays many GF expression forms not shown befores; these forms are explained in the next section.

The paradigms regNoun does not give the correct forms for

all nouns. For instance, mouse - mice and

fish - fish must be given by using mkNoun.

Also the word boy would be inflected incorrectly; to prevent

this, either use mkNoun or modify

regNoun so that the "y" case does not

apply if the second-last character is a vowel.

Exercise. Extend the regNoun paradigm so that it takes care

of all variations there are in English. Test it with the nouns

ax, bamboo, boy, bush, hero, match.

Hint. The library functions Predef.dp and Predef.tk

are useful in this task.

Exercise. The same rules that form plural nouns in English also

apply in the formation of third-person singular verbs.

Write a regular verb paradigm that uses this idea, but first

rewrite regNoun so that the analysis needed to build s-forms

is factored out as a separate oper, which is shared with

regVerb.

A common idiom is to

gather the oper and param definitions

needed for inflecting words in

a language into a morphology module. Here is a simple

example, MorphoEng.

--# -path=.:prelude

resource MorphoEng = open Prelude in {

param

Number = Sg | Pl ;

oper

Noun, Verb : Type = {s : Number => Str} ;

mkNoun : Str -> Str -> Noun = \x,y -> {

s = table {

Sg => x ;

Pl => y

}

} ;

regNoun : Str -> Noun = \s -> case last s of {

"s" | "z" => mkNoun s (s + "es") ;

"y" => mkNoun s (init s + "ies") ;

_ => mkNoun s (s + "s")

} ;

mkVerb : Str -> Str -> Verb = \x,y -> mkNoun y x ;

regVerb : Str -> Verb = \s -> case last s of {

"s" | "z" => mkVerb s (s + "es") ;

"y" => mkVerb s (init s + "ies") ;

"o" => mkVerb s (s + "es") ;

_ => mkVerb s (s + "s")

} ;

}

The first line gives as a hint to the compiler the

search path needed to find all the other modules that the

module depends on. The directory prelude is a subdirectory of

GF/lib; to be able to refer to it in this simple way, you can

set the environment variable GF_LIB_PATH to point to this

directory.

We can now enrich the concrete syntax definitions to comprise morphology. This will involve a more radical variation between languages (e.g. English and Italian) then just the use of different words. In general, parameters and linearization types are different in different languages - but this does not prevent the use of a common abstract syntax.

The rule of subject-verb agreement in English says that the verb phrase must be inflected in the number of the subject. This means that a noun phrase (functioning as a subject), inherently has a number, which it passes to the verb. The verb does not have a number, but must be able to receive whatever number the subject has. This distinction is nicely represented by the different linearization types of noun phrases and verb phrases:

lincat NP = {s : Str ; n : Number} ;

lincat VP = {s : Number => Str} ;

We say that the number of NP is an inherent feature,

whereas the number of NP is a variable feature (or a

parametric feature).

The agreement rule itself is expressed in the linearization rule of the predication function:

lin PredVP np vp = {s = np.s ++ vp.s ! np.n} ;

The following section will present

FoodsEng, assuming the abstract syntax Foods

that is similar to Food but also has the

plural determiners These and Those.

The reader is invited to inspect the way in which agreement works in

the formation of sentences.

The grammar uses both

Prelude and

MorphoEng.

We will later see how to make the grammar even

more high-level by using a resource grammar library

and parametrized modules.

--# -path=.:resource:prelude

concrete FoodsEng of Foods = open Prelude, MorphoEng in {

lincat

S, Quality = SS ;

Kind = {s : Number => Str} ;

Item = {s : Str ; n : Number} ;

lin

Is item quality = ss (item.s ++ (mkVerb "are" "is").s ! item.n ++ quality.s) ;

This = det Sg "this" ;

That = det Sg "that" ;

These = det Pl "these" ;

Those = det Pl "those" ;

QKind quality kind = {s = \\n => quality.s ++ kind.s ! n} ;

Wine = regNoun "wine" ;

Cheese = regNoun "cheese" ;

Fish = mkNoun "fish" "fish" ;

Very = prefixSS "very" ;

Fresh = ss "fresh" ;

Warm = ss "warm" ;

Italian = ss "Italian" ;

Expensive = ss "expensive" ;

Delicious = ss "delicious" ;

Boring = ss "boring" ;

oper

det : Number -> Str -> Noun -> {s : Str ; n : Number} = \n,d,cn -> {

s = d ++ cn.s ! n ;

n = n

} ;

}

The reader familiar with a functional programming language such as

Haskell must have noticed the similarity

between parameter types in GF and algebraic datatypes (data definitions

in Haskell). The GF parameter types are actually a special case of algebraic

datatypes: the main restriction is that in GF, these types must be finite.

(It is this restriction that makes it possible to invert linearization rules into

parsing methods.)

However, finite is not the same thing as enumerated. Even in GF, parameter constructors can take arguments, provided these arguments are from other parameter types - only recursion is forbidden. Such parameter types impose a hierarchic order among parameters. They are often needed to define the linguistically most accurate parameter systems.

To give an example, Swedish adjectives

are inflected in number (singular or plural) and

gender (uter or neuter). These parameters would suggest 2*2=4 different

forms. However, the gender distinction is done only in the singular. Therefore,

it would be inaccurate to define adjective paradigms using the type

Gender => Number => Str. The following hierarchic definition

yields an accurate system of three adjectival forms.

param AdjForm = ASg Gender | APl ;

param Gender = Utr | Neutr ;

Here is an example of pattern matching, the paradigm of regular adjectives.

oper regAdj : Str -> AdjForm => Str = \fin -> table {

ASg Utr => fin ;

ASg Neutr => fin + "t" ;

APl => fin + "a" ;

}

A constructor can be used as a pattern that has patterns as arguments. For instance, the adjectival paradigm in which the two singular forms are the same, can be defined

oper plattAdj : Str -> AdjForm => Str = \platt -> table {

ASg _ => platt ;

APl => platt + "a" ;

}

Even though morphology is in GF

mostly used as an auxiliary for syntax, it

can also be useful on its own right. The command morpho_analyse = ma

can be used to read a text and return for each word the analyses that

it has in the current concrete syntax.

> rf bible.txt | morpho_analyse

In the same way as translation exercises, morphological exercises can

be generated, by the command morpho_quiz = mq. Usually,

the category is set to be something else than S. For instance,

> cd GF/lib/resource-1.0/

> i french/IrregFre.gf

> morpho_quiz -cat=V

Welcome to GF Morphology Quiz.

...

réapparaître : VFin VCondit Pl P2

réapparaitriez

> No, not réapparaitriez, but

réapparaîtriez

Score 0/1

Finally, a list of morphological exercises can be generated

off-line and saved in a

file for later use, by the command morpho_list = ml

> morpho_list -number=25 -cat=V | wf exx.txt

The number flag gives the number of exercises generated.

A linearization type may contain more strings than one. An example of where this is useful are English particle verbs, such as switch off. The linearization of a sentence may place the object between the verb and the particle: he switched it off.

The following judgement defines transitive verbs as discontinuous constituents, i.e. as having a linearization type with two strings and not just one.

lincat TV = {s : Number => Str ; part : Str} ;

This linearization rule shows how the constituents are separated by the object in complementization.

lin PredTV tv obj = {s = \\n => tv.s ! n ++ obj.s ++ tv.part} ;

There is no restriction in the number of discontinuous constituents

(or other fields) a lincat may contain. The only condition is that

the fields must be of finite types, i.e. built from records, tables,

parameters, and Str, and not functions.

A mathematical result

about parsing in GF says that the worst-case complexity of parsing

increases with the number of discontinuous constituents. This is

potentially a reason to avoid discontinuous constituents.

Moreover, the parsing and linearization commands only give accurate

results for categories whose linearization type has a unique Str

valued field labelled s. Therefore, discontinuous constituents

are not a good idea in top-level categories accessed by the users

of a grammar application.

Sometimes there are many alternative ways to define a concrete syntax.

For instance, the verb negation in English can be expressed both by

does not and doesn't. In linguistic terms, these expressions

are in free variation. The variants construct of GF can

be used to give a list of strings in free variation. For example,

NegVerb verb = {s = variants {["does not"] ; "doesn't} ++ verb.s ! Pl} ;

An empty variant list

variants {}

can be used e.g. if a word lacks a certain form.

In general, variants should be used cautiously. It is not

recommended for modules aimed to be libraries, because the

user of the library has no way to choose among the variants.

Large libraries, such as the GF Resource Grammar Library, may define hundreds of names, which can be unpractical for both the library writer and the user. The writer has to invent longer and longer names which are not always intuitive, and the user has to learn or at least be able to find all these names. A solution to this problem, adopted by languages such as C++, is overloading: the same name can be used for several functions. When such a name is used, the compiler performs overload resolution to find out which of the possible functions is meant. The resolution is based on the types of the functions: all functions that have the same name must have different types.

In C++, functions with the same name can be scattered everywhere in the program.

In GF, they must be grouped together in overload groups. Here is an example

of an overload group, defining four ways to define nouns in Italian:

oper mkN = overload {

mkN : Str -> N = -- regular nouns

mkN : Str -> Gender -> N = -- regular nouns with unexpected gender

mkN : Str -> Str -> N = -- irregular nouns

mkN : Str -> Str -> Gender -> N = -- irregular nouns with unexpected gender

}

All of the following uses of mkN are easy to resolve:

lin Pizza = mkN "pizza" ; -- Str -> N

lin Hand = mkN "mano" Fem ; -- Str -> Gender -> N

lin Man = mkN "uomo" "uomini" ; -- Str -> Str -> N

In this chapter, we go through constructs that are not necessary in simple grammars or when the concrete syntax relies on libraries. But they are useful when writing advanced concrete syntax implementations, such as resource grammar libraries. This chapter can safely be skipped if the reader prefers to continue to the chapter on using libraries.

Local definitions ("let expressions") are used in functional

programming for two reasons: to structure the code into smaller

expressions, and to avoid repeated computation of one and

the same expression. Here is an example, from

MorphoIta:

oper regNoun : Str -> Noun = \vino ->

let

vin = init vino ;

o = last vino

in

case o of {

"a" => mkNoun Fem vino (vin + "e") ;

"o" | "e" => mkNoun Masc vino (vin + "i") ;

_ => mkNoun Masc vino vino

} ;

Record types and records can be extended with new fields. For instance,

in German it is natural to see transitive verbs as verbs with a case.

The symbol ** is used for both constructs.

lincat TV = Verb ** {c : Case} ;

lin Follow = regVerb "folgen" ** {c = Dative} ;

To extend a record type or a record with a field whose label it already has is a type error.

A record type T is a subtype of another one R, if T has all the fields of R and possibly other fields. For instance, an extension of a record type is always a subtype of it.

If T is a subtype of R, an object of T can be used whenever an object of R is required. For instance, a transitive verb can be used whenever a verb is required.

Contravariance means that a function taking an R as argument can also be applied to any object of a subtype T.

Product types and tuples are syntactic sugar for record types and records:

T1 * ... * Tn === {p1 : T1 ; ... ; pn : Tn}

<t1, ..., tn> === {p1 = T1 ; ... ; pn = Tn}

Thus the labels p1, p2,... are hard-coded.

Record types of parameter types are also parameter types. A typical example is a record of agreement features, e.g. French

oper Agr : PType = {g : Gender ; n : Number ; p : Person} ;

Notice the term PType rather than just Type referring to

parameter types. Every PType is also a Type, but not vice-versa.

Pattern matching is done in the expected way, but it can moreover utilize partial records: the branch

{g = Fem} => t

in a table of type Agr => T means the same as

{g = Fem ; n = _ ; p = _} => t

Tuple patterns are translated to record patterns in the same way as tuples to records; partial patterns make it possible to write, slightly surprisingly,

case <g,n,p> of {

<Fem> => t

...

}

To define string operations computed at compile time, such as in morphology, it is handy to use regular expression patterns:

+ q : token consisting of p followed by q

* : token p repeated 0 or more times

(max the length of the string to be matched)

- p : matches anything that p does not match

@ p : bind to x what p matches

| q : matches what either p or q matches

The last three apply to all types of patterns, the first two only to token strings. As an example, we give a rule for the formation of English word forms ending with an s and used in the formation of both plural nouns and third-person present-tense verbs.

add_s : Str -> Str = \w -> case w of {

_ + "oo" => w + "s" ; -- bamboo

_ + ("s" | "z" | "x" | "sh" | "o") => w + "es" ; -- bus, hero

_ + ("a" | "o" | "u" | "e") + "y" => w + "s" ; -- boy

x + "y" => x + "ies" ; -- fly

_ => w + "s" -- car

} ;

Here is another example, the plural formation in Swedish 2nd declension.

The second branch uses a variable binding with @ to cover the cases where an

unstressed pre-final vowel e disappears in the plural

(nyckel-nycklar, seger-segrar, bil-bilar):

plural2 : Str -> Str = \w -> case w of {

pojk + "e" => pojk + "ar" ;

nyck + "e" + l@("l" | "r" | "n") => nyck + l + "ar" ;

bil => bil + "ar"

} ;

Semantics: variables are always bound to the first match, which is the first

in the sequence of binding lists Match p v defined as follows. In the definition,

p is a pattern and v is a value. The semantics is given in Haskell notation.

Match (p1|p2) v = Match p1 ++ U Match p2 v

Match (p1+p2) s = [Match p1 s1 ++ Match p2 s2 |

i <- [0..length s], (s1,s2) = splitAt i s]

Match p* s = [[]] if Match "" s ++ Match p s ++ Match (p+p) s ++... /= []

Match -p v = [[]] if Match p v = []

Match c v = [[]] if c == v -- for constant and literal patterns c

Match x v = [[(x,v)]] -- for variable patterns x

Match x@p v = [[(x,v)]] + M if M = Match p v /= []

Match p v = [] otherwise -- failure

Examples:

x + "e" + y matches "peter" with x = "p", y = "ter"

x + "er"* matches "burgerer" with ``x = "burg"

Exercise. Implement the German Umlaut operation on word stems. The operation changes the vowel of the stressed stem syllable as follows: a to ä, au to äu, o to ö, and u to ü. You can assume that the operation only takes syllables as arguments. Test the operation to see whether it correctly changes Arzt to Ärzt, Baum to Bäum, Topf to Töpf, and Kuh to Küh.

Sometimes a token has different forms depending on the token

that follows. An example is the English indefinite article,

which is an if a vowel follows, a otherwise.

Which form is chosen can only be decided at run time, i.e.

when a string is actually build. GF has a special construct for

such tokens, the pre construct exemplified in

oper artIndef : Str =

pre {"a" ; "an" / strs {"a" ; "e" ; "i" ; "o"}} ;

Thus

artIndef ++ "cheese" ---> "a" ++ "cheese"

artIndef ++ "apple" ---> "an" ++ "apple"

This very example does not work in all situations: the prefix u has no general rules, and some problematic words are euphemism, one-eyed, n-gram. It is possible to write

oper artIndef : Str =

pre {"a" ;

"a" / strs {"eu" ; "one"} ;

"an" / strs {"a" ; "e" ; "i" ; "o" ; "n-"}

} ;

GF has the following predefined categories in abstract syntax:

cat Int ; -- integers, e.g. 0, 5, 743145151019

cat Float ; -- floats, e.g. 0.0, 3.1415926

cat String ; -- strings, e.g. "", "foo", "123"

The objects of each of these categories are literals

as indicated in the comments above. No fun definition

can have a predefined category as its value type, but

they can be used as arguments. For example:

fun StreetAddress : Int -> String -> Address ;

lin StreetAddress number street = {s = number.s ++ street.s} ;

-- e.g. (StreetAddress 10 "Downing Street") : Address

FIXME: The linearization type is {s : Str} for all these categories.

In this chapter, we will take a look at the GF resource grammar library.

We will use the library to implement a slightly extended Food grammar

and port it to some new languages.

The GF Resource Grammar Library contains grammar rules for 10 languages (in addition, 2 languages are available as incomplete implementations, and a few more are under construction). Its purpose is to make these rules available for application programmers, who can thereby concentrate on the semantic and stylistic aspects of their grammars, without having to think about grammaticality. The targeted level of application grammarians is that of a skilled programmer with a practical knowledge of the target languages, but without theoretical knowledge about their grammars. Such a combination of skills is typical of programmers who, for instance, want to localize software to new languages.

The current resource languages are

Arabic (incomplete)

Catalan (incomplete)

Danish

English

Finnish

French

German

Italian

Norwegian

Russian

Spanish

Swedish

The first three letters (Eng etc) are used in grammar module names.

The incomplete Arabic and Catalan implementations are

enough to be used in many applications; they both contain, amoung other

things, complete inflectional morphology.

The resource library API is devided into language-specific and language-independent parts. To put it roughly,

SyntaxL for each language L

ParadigmsL for each language L

A full documentation of the API is available on-line in the resource synopsis. For our examples, we will only need a fragment of the full API.

In the first examples,

we will make use of the following categories, from the module Syntax.

| Category | Explanation | Example | |

|---|---|---|---|

Utt |

sentence, question, word... | "be quiet" | |

Adv |

verb-phrase-modifying adverb, | "in the house" | |

AdA |

adjective-modifying adverb, | "very" | |

S |

declarative sentence | "she lived here" | |

Cl |

declarative clause, with all tenses | "she looks at this" | |

AP |

adjectival phrase | "very warm" | |

CN |

common noun (without determiner) | "red house" | |

NP |

noun phrase (subject or object) | "the red house" | |

Det |

determiner phrase | "those seven" | |

Predet |

predeterminer | "only" | |

Quant |

quantifier with both sg and pl | "this/these" | |

Prep |

preposition, or just case | "in" | |

A |

one-place adjective | "warm" | |

N |

common noun | "house" | |

We will need the following syntax rules from Syntax.

| Function | Type | Example | |

|---|---|---|---|

mkUtt |

S -> Utt |

John walked | |

mkUtt |

Cl -> Utt |

John walks | |

mkCl |

NP -> AP -> Cl |

John is very old | |

mkNP |

Det -> CN -> NP |

the first old man | |

mkNP |

Predet -> NP -> NP |

only John | |

mkDet |

Quant -> Det |

this | |

mkCN |

N -> CN |

house | |

mkCN |

AP -> CN -> CN |

very big blue house | |

mkAP |

A -> AP |

old | |

mkAP |

AdA -> AP -> AP |

very very old | |

We will also need the following structural words from Syntax.

| Function | Type | Example | |

|---|---|---|---|

all_Predet |

Predet |

all | |

defPlDet |

Det |

the (houses) | |

this_Quant |

Quant |

this | |

very_AdA |

AdA |

very | |

For French, we will use the following part of ParadigmsFre.

| Function | Type | Example | |

|---|---|---|---|

Gender |

Type |

- | |

masculine |

Gender |

- | |

feminine |

Gender |

- | |

mkN |

(cheval : Str) -> N |

- | |

mkN |

(foie : Str) -> Gender -> N |

- | |

mkA |

(cher : Str) -> A |

- | |

mkA |

(sec,seche : Str) -> A |

- | |

For German, we will use the following part of ParadigmsGer.

| Function | Type | Example | |

|---|---|---|---|

Gender |

Type |

- | |

masculine |

Gender |

- | |

feminine |

Gender |

- | |

neuter |

Gender |

- | |

mkN |

(Stufe : Str) -> N |

- | |

mkN |

(Bild,Bilder : Str) -> Gender -> N |

- | |

mkA |

Str -> A |

- | |

mkA |

(gut,besser,beste : Str) -> A |

gut,besser,beste | |

Exercise. Try out the morphological paradigms in different languages. Do in this way:

> i -path=alltenses:prelude -retain alltenses/ParadigmsGer.gfr

> cc mkN "Farbe"

> cc mkA "gut" "besser" "beste"

We start with an abstract syntax that is like Food before, but

has a plural determiner (all wines) and some new nouns that will

need different genders in most languages.

abstract Food = {

cat

S ; Item ; Kind ; Quality ;

fun

Is : Item -> Quality -> S ;

This, All : Kind -> Item ;

QKind : Quality -> Kind -> Kind ;

Wine, Cheese, Fish, Beer, Pizza : Kind ;

Very : Quality -> Quality ;

Fresh, Warm, Italian, Expensive, Delicious, Boring : Quality ;

}

The French implementation opens SyntaxFre and ParadigmsFre

to get access to the resource libraries needed. In order to find

the libraries, a path directive is prepended; it is interpreted

relative to the environment variable GF_LIB_PATH.

--# -path=.:present:prelude

concrete FoodFre of Food = open SyntaxFre,ParadigmsFre in {

lincat

S = Utt ;

Item = NP ;

Kind = CN ;

Quality = AP ;

lin

Is item quality = mkUtt (mkCl item quality) ;

This kind = mkNP (mkDet this_Quant) kind ;

All kind = mkNP all_Predet (mkNP defPlDet kind) ;

QKind quality kind = mkCN quality kind ;

Wine = mkCN (mkN "vin") ;

Beer = mkCN (mkN "bière") ;

Pizza = mkCN (mkN "pizza" feminine) ;

Cheese = mkCN (mkN "fromage" masculine) ;

Fish = mkCN (mkN "poisson") ;

Very quality = mkAP very_AdA quality ;

Fresh = mkAP (mkA "frais" "fraîche") ;

Warm = mkAP (mkA "chaud") ;

Italian = mkAP (mkA "italien") ;

Expensive = mkAP (mkA "cher") ;

Delicious = mkAP (mkA "délicieux") ;

Boring = mkAP (mkA "ennuyeux") ;

}

The lincat definitions in FoodFre assign resource categories

to application categories. In a sense, the application categories

are semantic, as they correspond to concepts in the grammar application,

whereas the resource categories are syntactic: they give the linguistic

means to express concepts in any application.

The lin definitions likewise assign resource functions to application

functions. Under the hood, there is a lot of matching with parameters to

take care of word order, inflection, and agreement. But the user of the

library sees nothing of this: the only parameters you need to give are

the genders of some nouns, which cannot be correctly inferred from the word.

In French, for example, the one-argument mkN assigns the noun the feminine

gender if and only if it ends with an e. Therefore the words fromage and

pizza are given genders. One can of course always give genders manually, to

be on the safe side.

As for inflection, the one-argument adjective pattern mkA takes care of

completely regular adjective such as chaud-chaude, but also of special

cases such as italien-italienne, cher-chère, and délicieux-délicieuse.

But it cannot form frais-fraîche properly. Once again, you can give more

forms to be on the safe side. You can also test the paradigms in the GF

program.

Exercise. Compile the grammar FoodFre and generate and parse some sentences.

Exercise. Write a concrete syntax of Food for English or some other language

included in the resource library. You can also compare the output with the hand-written

grammars presented earlier in this tutorial.

Exercise. In particular, try to write a concrete syntax for Italian, even if you don't know Italian. What you need to know is that "beer" is birra and "pizza" is pizza, and that all the nouns and adjectives in the grammar are regular.

If you did the exercise of writing a concrete syntax of Food for some other

language, you probably noticed that much of the code looks exactly the same

as for French. The immediate reason for this is that the Syntax API is the

same for all languages; the deeper reason is that all languages (at least those

in the resource package) implement the same syntactic structures and tend to use them

in similar ways. Thus it is only the lexical parts of a concrete syntax that

you need to write anew for a new language. In brief,

But programming by copy-and-paste is not worthy of a functional programmer.

Can we write a function that takes care of the shared parts of grammar modules?

Yes, we can. It is not a function in the fun or oper sense, but

a function operating on modules, called a functor. This construct

is familiar from the functional languages ML and OCaml, but it does not

exist in Haskell. It also bears some resemblance to templates in C++.

Functors are also known as parametrized modules.

In GF, a functor is a module that opens one or more interfaces.

An interface is a module similar to a resource, but it only

contains the types of opers, not their definitions. You can think

of an interface as a kind of a record type. Thus a functor is a kind

of a function taking records as arguments and producins a module

as value.

Let us look at a functor implementation of the Food grammar.

Consider its module header first:

incomplete concrete FoodI of Food = open Syntax, LexFood in

In the functor-function analogy, FoodI would be presented as a function

with the following type signature:

FoodI : instance of Syntax -> instance of LexFood -> concrete of Food

It takes as arguments two interfaces:

Syntax, the resource grammar interface

LexFood, the domain-specific lexicon interface

Functors opening Syntax and a domain lexicon interface are in fact

so typical in GF applications, that this structure could be called a design patter

for GF grammars. The idea in this pattern is, again, that

the languages use the same syntactic structures but different words.

Before going to the details of the module bodies, let us look at how functors are concretely used. An interface has a header such as

interface LexFood = open Syntax in

To give an instance of it means that all opers are given definitione (of

appropriate types). For example,

instance LexFoodGer of LexFood = open SyntaxGer, ParadigmsGer in

Notice that when an interface opens an interface, such as Syntax, then its instance

opens an instance of it. But the instance may also open some resources - typically,

a domain lexicon instance opens a Paradigms module.

In the function-functor analogy, we now have

SyntaxGer : instance of Syntax

LexFoodGer : instance of LexFood

Thus we can complete the German implementation by "applying" the functor:

FoodI SyntaxGer LexFoodGer : concrete of Food

The GF syntax for doing so is

concrete FoodGer of Food = FoodI with

(Syntax = SyntaxGer),

(LexFood = LexFoodGer) ;

Notice that this is the complete module, not just a header of it.

The module body is received from FoodI, by instantiating the

interface constants with their definitions given in the German

instances.

A module of this form, characterized by the keyword with, is

called a functor instantiation.

Here is the complete code for the functor FoodI:

incomplete concrete FoodI of Food = open Syntax, LexFood in {

lincat

S = Utt ;

Item = NP ;

Kind = CN ;

Quality = AP ;

lin

Is item quality = mkUtt (mkCl item quality) ;

This kind = mkNP (mkDet this_Quant) kind ;

All kind = mkNP all_Predet (mkNP defPlDet kind) ;

QKind quality kind = mkCN quality kind ;

Wine = mkCN wine_N ;

Beer = mkCN beer_N ;

Pizza = mkCN pizza_N ;

Cheese = mkCN cheese_N ;

Fish = mkCN fish_N ;

Very quality = mkAP very_AdA quality ;

Fresh = mkAP fresh_A ;

Warm = mkAP warm_A ;

Italian = mkAP italian_A ;

Expensive = mkAP expensive_A ;

Delicious = mkAP delicious_A ;

Boring = mkAP boring_A ;

}

Let us now define the LexFood interface:

interface LexFood = open Syntax in {

oper

wine_N : N ;

beer_N : N ;

pizza_N : N ;

cheese_N : N ;

fish_N : N ;

fresh_A : A ;

warm_A : A ;

italian_A : A ;

expensive_A : A ;

delicious_A : A ;

boring_A : A ;

}

In this interface, only lexical items are declared. In general, an

interface can declare any functions and also types. The Syntax

interface does so.

Here is the German instance of the interface:

instance LexFoodGer of LexFood = open SyntaxGer, ParadigmsGer in {

oper

wine_N = mkN "Wein" ;

beer_N = mkN "Bier" "Biere" neuter ;

pizza_N = mkN "Pizza" "Pizzen" feminine ;

cheese_N = mkN "Käse" "Käsen" masculine ;

fish_N = mkN "Fisch" ;

fresh_A = mkA "frisch" ;

warm_A = mkA "warm" "wärmer" "wärmste" ;

italian_A = mkA "italienisch" ;

expensive_A = mkA "teuer" ;

delicious_A = mkA "köstlich" ;

boring_A = mkA "langweilig" ;

}

Just to complete the picture, we repeat the German functor instantiation

for FoodI, this time with a path directive that makes it compilable.

--# -path=.:present:prelude

concrete FoodGer of Food = FoodI with

(Syntax = SyntaxGer),

(LexFood = LexFoodGer) ;

Exercise. Compile and test FoodGer.

Exercise. Refactor FoodFre into a functor instantiation.

Once we have an application grammar defined by using a functor, adding a new language is simple. Just two modules need to be written:

The functor instantiation is completely mechanical to write. Here is one for Finnish:

--# -path=.:present:prelude

concrete FoodFin of Food = FoodI with

(Syntax = SyntaxFin),

(LexFood = LexFoodFin) ;

The domain lexicon instance requires some knowledge of the words of the language: what words are used for which concepts, how the words are inflected, plus features such as genders. Here is a lexicon instance for Finnish:

instance LexFoodFin of LexFood = open SyntaxFin, ParadigmsFin in {

oper

wine_N = mkN "viini" ;

beer_N = mkN "olut" ;

pizza_N = mkN "pizza" ;

cheese_N = mkN "juusto" ;

fish_N = mkN "kala" ;

fresh_A = mkA "tuore" ;

warm_A = mkA "lämmin" ;

italian_A = mkA "italialainen" ;

expensive_A = mkA "kallis" ;

delicious_A = mkA "herkullinen" ;

boring_A = mkA "tylsä" ;

}

Exercise. Instantiate the functor FoodI to some language of

your choice.

One purpose with the resource grammars was stated to be a division of labour between linguists and application grammarians. We can now reflect on what this means more precisely, by asking ourselves what skills are required of grammarians working on different components.

Building a GF application starts from the abstract syntax. Writing an abstract syntax requires

If the concrete syntax is written by means of a functor, the programmer has to decide what parts of the implementation are put to the interface and what parts are shared in the functor. This requires

Instantiating a ready-made functor to a new language is less demanding. It requires essentially

Notice that none of these tasks requires the use of GF records, tables, or parameters. Thus only a small fragment of GF is needed; the rest of GF is only relevant for those who write the libraries.

Of course, grammar writing is not always straightforward usage of libraries. For example, GF can be used for other languages than just those in the libraries - for both natural and formal languages. A knowledge of records and tables can, unfortunately, also be needed for understanding GF's error messages.

Exercise. Design a small grammar that can be used for controlling an MP3 player. The grammar should be able to recognize commands such as play this song, with the following variations:

The implementation goes in the following phases:

A functor implementation using the resource Syntax interface

works as long as all concepts are expressed by using the same structures

in all languages. If this is not the case, the deviant linearization can

be made into a parameter and moved to the domain lexicon interface.

Let us take a slightly contrived example: assume that English has

no word for Pizza, but has to use the paraphrase Italian pie.

This paraphrase is no longer a noun N, but a complex phrase

in the category CN. An obvious way to solve this problem is

to change interface LexEng so that the constant declared for

Pizza gets a new type:

oper pizza_CN : CN ;