This tutorial gives a hands-on introduction to grammar writing in GF. It has been written for all programmers who want to learn to write grammars in GF. It will go through the programming concepts of GF, and also explain, without presupposing them, the main ingredients of GF: linguistics, functional programming, and type theory. This knowledge will be introduced as a part of grammar writing practice. Thus the tutorial should be accessible to anyone who has some previous experience from any programming language; the basics of using computers are also presupposed, e.g. the use of text editors and the management of files.

We start in the second chapter by building a "Hello World" grammar, which covers greetings in three languages: English (hello world), Finnish (terve maailma), and Italian (ciao mondo). This multilingual grammar is based on the most central idea of GF: the distinction between abstract syntax (the logical structure) and concrete syntax (the sequence of words).

From the "Hello World" example, we proceed in the third chapter to a larger grammar for the domain of food. In this grammar, you can say things like

formaggio italiano

vino delizioso, vini deliziosi, pizza deliziosa, pizze deliziose

The complete description of morphology and syntax in natural languages is in GF preferably left to the resource grammar library. Its use is therefore an important part of GF programming, and it is covered in the fifth chapter. How to contribute to resource grammars as an author will only be covered in Part III; however, the tutorial does go through all the programming concepts of GF, including those involved in resource grammars.

In addition to multilinguality, semantics is an important aspect of GF grammars. The "purely linguistic" aspects (morphology and syntax) belong to the concrete syntax part of GF, whereas semantics is expressed in the abstract syntax. After the presentation of concrete syntax constructs, we proceed in the sixth chapter to the enrichment of abstract syntax with dependent types, variable bindings, and semantic definitions. the seventh chapter concludes the tutorial by technical tips for implementing formal languages. It will also illustrate the close relation between GF grammars and compilers by actually implementing a small compiler from C-like statements and expressions to machine code similar to Java Virtual Machine.

English and Italian are used as example languages in many grammars. Of course, we will not presuppose that the reader knows any Italian. We have chosen Italian because it has a rich structure that illustrates very well the capacities of GF. Moreover, even those readers who don't know Italian, will find many of its words familiar, due to the Latin heritage. The exercises will encourage the reader to port the examples to other languages as well; in particular, it should be instructive for the reader to look at her own native language from the point of view of writing a grammar implementation.

To learn how to write GF grammars is not the only goal of this tutorial. We will also explain the most important commands of the GF system, mostly in passing. With these commands, simple application programs such as translation and quiz systems, can be built simply by writing scripts for the GF system. More complicated applications, such as natural-language interfaces and dialogue systems, moreover require programming in some general-purpose language; such applications are covered in the eighth chapter.

In this chapter, we will introduce the GF system and write the first GF grammar, a "Hello World" grammar. While extremely small, this grammar already illustrates how GF can be used for the tasks of translation and multilingual generation.

We use the term GF for three different things:

The relation between these things is obvious: the GF system is an implementation of the GF programming language, which in turn is built on the ideas of the GF theory. The main focus of this book is on the GF programming language. We learn how grammars are written in this language. At the same time, we learn the way of thinking in the GF theory. To make this all useful and fun, and to encourage experimenting, we make the grammars run on a computer by using the GF system.

A GF program is called a grammar. A grammar is, traditionally, a definition of a language. From this definition, different language processing components can be derived:

A GF grammar is thus a declarative program from which these procedures can be automatically derived. In general, a GF grammar is multilingual: it defines many languages and translations between them.

The GF system is open-source free software, which can be downloaded via the GF Homepage:

gf.digitalgrammars.com

In particular, many of the examples in this book are included in the

subdirectory examples/tutorial of the source distribution package.

This directory is also available

online.

If you want to compile GF from source, you need a Haskell compiler. To compile the interactive editor, you also need a Java compilers. But normally you don't have to compile anything yourself, and you definitely don't need to know Haskell or Java to use GF.

We are assuming the availability of a Unix shell. Linux and Mac OS X users have it automatically, the latter under the name "terminal". Windows users are recommended to install Cywgin, the free Unix shell for Windows.

To start the GF system, assuming you have installed it, just type

gf in the Unix (or Cygwin) shell:

% gf

You will see GF's welcome message and the prompt >.

The command

> help

will give you a list of available commands.

As a common convention in this book, we will use

% as a prompt that marks system commands

> as a prompt that marks GF commands

Thus you should not type these prompts, but only the characters that follow them.

The tradition in programming language tutorials is to start with a program that prints "Hello World" on the terminal. GF should be no exception. But our program has features that distinguish it from most "Hello World" programs:

A GF program, in general, is a multilingual grammar. Its main parts are

The abstract syntax defines, in a language-independent way, what meanings can be expressed in the grammar. In the "Hello World" grammar we want to express Greetings, where we greet a Recipient, which can be World or Mum or Friends. Here is the entire GF code for the abstract syntax:

-- a "Hello World" grammar

abstract Hello = {

flags startcat = Greeting ;

cat Greeting ; Recipient ;

fun

Hello : Recipient -> Greeting ;

World, Mum, Friends : Recipient ;

}

The code has the following parts:

Hello

Greeting is the

main category, i.e. the one in which parsing and generation are

performed by default

Greeting and Recipient

are categories, i.e. types of meanings

Hello constructing a greeting from a recipient,

as well as the three possible recipients

A concrete syntax defines a mapping from the abstract meanings to their expressions in a language. We first give an English concrete syntax:

concrete HelloEng of Hello = {

lincat Greeting, Recipient = {s : Str} ;

lin

Hello recip = {s = "hello" ++ recip.s} ;

World = {s = "world"} ;

Mum = {s = "mum"} ;

Friends = {s = "friends"} ;

}

The major parts of this code are:

Hello, itself named HelloEng

Greeting and Recipient are records with a string s

Hello greeting

has a function telling that the word hello is prefixed to the string

s contained in the record recip

To make the grammar truly multilingual, we add a Finnish and an Italian concrete syntax:

concrete HelloFin of Hello = {

lincat Greeting, Recipient = {s : Str} ;

lin

Hello recip = {s = "terve" ++ recip.s} ;

World = {s = "maailma"} ;

Mum = {s = "äiti"} ;

Friends = {s = "ystävät"} ;

}

concrete HelloIta of Hello = {

lincat Greeting, Recipient = {s : Str} ;

lin

Hello recip = {s = "ciao" ++ recip.s} ;

World = {s = "mondo"} ;

Mum = {s = "mamma"} ;

Friends = {s = "amici"} ;

}

Now we have a trilingual grammar usable for translation and many other tasks, which we will now start experimenting with.

In order to compile the grammar in GF, each of the four modules

has to be put into a file named Modulename.gf:

Hello.gf HelloEng.gf HelloFin.gf HelloIta.gf

The first GF command needed when using a grammar is to import it.

The command has a long name, import, and a short name, i.

When you have started GF (by the shell command gf), you can thus type either

> import HelloEng.gf

or

> i HelloEng.gf

to get the same effect. In general, all GF commands have a long and a short name; short names are convenient when typing commands by hand, whereas long command names are more readable in scripts, i.e. files that include sequences of commands.

The effect of import is that the GF system compiles your grammar

into an internal representation, and shows a new prompt when it is ready.

It will also show how much CPU time was consumed:

> i HelloEng.gf

- compiling Hello.gf... wrote file Hello.gfc 8 msec

- compiling HelloEng.gf... wrote file HelloEng.gfc 12 msec

12 msec

>

You can now use GF for parsing:

> parse "hello world"

Hello World

The parse (= p) command takes a string

(in double quotes) and returns an abstract syntax tree --- the meaning

of the string as defined in the abstract syntax.

A tree is, in general, something easier than a string

for a machine to understand and to process further, although this

is not so obvious in this simple grammar. The syntax for trees is that

of function application, which in GF is written

function argument1 ... argumentn

Parentheses are only needed for grouping. For instance, f (a b) is

f applied to the application of a to b. This is different

from f a b, which is f applied to a and b.

Strings that return a tree when parsed do so in virtue of the grammar you imported. Try to parse something that is not in grammar, and you will fail

> parse "hello dad"

Unknown words: dad

> parse "world hello"

no tree found

In the first example, the failure is caused by an unknown word. In the second example, the combination of words is ungrammatical.

In addition to parsing, you can also use GF for linearization

(linearize = l). This is the inverse of

parsing, taking trees into strings:

> linearize Hello World

hello world

What is the use of this? Typically not that you type in a tree at the GF prompt. The utility of linearization comes from the fact that you can obtain a tree from somewhere else --- for instance, from a parser. A prime example of this is translation: you parse with one concrete syntax and linearize with another. Let us now do this by first importing the Italian grammar:

> import HelloIta.gf

We can now parse with HelloEng and pipe the result

into linearizing with HelloIta:

> parse -lang=HelloEng "hello mum" | linearize -lang=HelloIta

ciao mamma

Notice that, since there are now two concrete syntaxes read into the system, the commands use a language flag to indicate which concrete syntax is used in each operation. If no language flag is given, the last-imported language is applied.

To conclude the translation exercise, we import the Finnish grammar and pipe English parsing into multilingual generation:

> parse -lang=HelloEng "hello friends" | linearize -multi

terve ystävät

ciao amici

hello friends

Exercise. Test the parsing and translation examples shown above, as well as some other examples, in different combinations of languages.

Exercise. Extend the grammar Hello.gf and some of the

concrete syntaxes by five new recipients and one new greeting

form.

Exercise. Add a concrete syntax for some other languages you might know.

Exercise. Add a pair of greetings that are expressed in one and the same way in one language and in two different ways in another. For instance, good morning and good afternoon in English are both expressed as buongiorno in Italian. Test what happens when you translate buongiorno to English in GF.

Exercise. Inject errors in the Hello grammars, for example, leave out

some line, omit a variable in a lin rule, or change the name in one occurrence

of a variable. Inspect the error messages generated by GF.

A normal "hello world" program written in C is executable from the

Unix shell and print its output on the terminal. This is possible in GF

as well, by using the gf program in a Unix pipe. Invoking gf

can be made with grammar names as arguments,

% gf HelloEng.gf HelloFin.gf HelloIta.gf

which has the same effect as opening gf and then importing the

grammars. A command can be send to this gf state by piping it from

Unix's echo command:

% echo "l -multi Hello Wordl" | gf HelloEng.gf HelloFin.gf HelloIta.gf

which will execute the command and then quit. Alternatively, one can write a script, a file containing the lines

import HelloEng.gf

import HelloFin.gf

import HelloIta.gf

linearize -multi Hello World

If we name this script hello.gfs, we can do

$ gf -batch -s <hello.gfs s

ciao mondo

terve maailma

hello world

The options -batch and -s ("silent") remove prompts, CPU time,

and other messages. Writing GF scripts and Unix shell scripts that call

GF is the simplest way to build application programs that use GF grammars.

In the eighth chapter, we will see how to build stand-alone programs that don't need

the GF system to run.

Exercise. (For Unix hackers.) Write a GF application that reads an English string from the standard input and writes an Italian translation to the output.

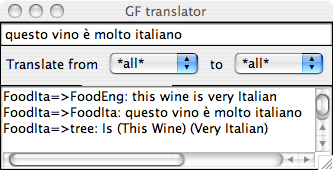

Now we have built our first multilingual grammar and seen the basic functionalities of GF: parsing and linearization. We have tested these functionalities inside the GF program. In the forthcoming chapters, we will build larger grammars and can then get more out of these functionalities. But we will also introduce new ones:

The usefulness of GF would be quite limited if grammars were usable only inside the GF system. In the eighth chapter, we will see other ways of using grammars:

All GF functionalities, both those inside the GF program and those

ported to other environments,

are of course already applicable to the simplest of grammars,

such as the Hello grammars presented above. But the main focus

of this tutorial will be on grammar writing. Thus we will show

how larger and more expressive grammars can be built by using

the constructs of the GF programming language, before entering the

applications.

As the last section of each chapter, we will give a summary of the GF language features covered in the chapter. The presentation is rather technical and intended as a reference for later use, rather than to be read at once. The summaries may cover some new features, which complement the discussion in the main chapter.

A GF grammar consists of modules, into which judgements are grouped. The most important module forms are

abstract A = {...} , abstract syntax A with judgements in

the module body {...}.

concrete C of A = {...}, concrete syntax C of the

abstract syntax A, with judgements in the module body {...}.

Each module is written in a file named Modulename.gf.

Rules in a module body are called judgements. Keywords such as

fun and lin are used for distinguishing between

judgement forms. Here is a summary of the most important

judgement forms, which we have considered by now:

| form | reading | module type | |

|---|---|---|---|

cat C |

C is a category | abstract | |

fun f : A |

f is a function of type A | abstract | |

lincat C = T |

category C has linearization type T | concrete | |

lin f x1 ... xn = t |

function f has linearization t | concrete | |

flags p = v |

flag p has value v | any | |

Both abstract and concrete modules may moreover contain comments of the forms

-- anything until a newline

{- anything except hyphen followed by closing brace -}

Judgements are terminated by semicolons. Shorthands permit the sharing of the keyword in subsequent judgements,

cat C ; D ; === cat C ; cat D ;

and of the right-hand-side in subsequent judgements of the same form

fun f, g : A ; === fun f : A ; g : A ;

We will use the symbol === to indicate syntactic sugar when

speaking about GF. Thus it is not a symbol of the GF language.

Each judgement declares a name, which is an identifier.

An identifier is a letter followed by a sequence of letters, digits, and

characters ' or _. Each identifier can only be

defined once in the same module (that is, as next to the judgement keyword;

local variables such as those in lin judgemenrs can be

reused in other judgements).

Names are in scope in the rest of the module, i.e. usable in the other judgements of the module (subject to type restrictions, of course). Also the name of the module is an identifier in scope.

The order of judgements in a module is free. In particular, an identifier need not be declared before it is used.

A type in an abstract syntax are either a basic type,

i.e. one introduced in a cat judgement, or a

function type of the form

A1 -> ... -> An -> A

where each of A1, ..., An, A is a basic type.

The last type in the arrow-separated sequence

is the value type of the function type, and the earlier types are

its argument types.

In a concrete syntax, the available types include

Str

{ r1 : T1 ; ... ; rn : Tn }

Token lists are often briefly called strings.

Each semi-colon separated part in a record type is called a field. The identifier introduced by the left-hand-side of a field is called a label.

A term in abstract syntax is a function application of form

f a1 ... an

where f is a function declared in a fun judgement and a1 ... an

are terms. These terms are also called abstract syntax trees, or just

trees.

The tree above is well-typed and has the type A, if

f : A1 -> ... -> An -> A

and each ai has type an.

A term used in concrete syntax has one the forms

"foo", of type Str

"foo" ++ "bar",

{ r1 = t1 ; ... ; rn = Tn },

of type { r1 : R1 ; ... ; rn : Rn }

t.r of a term t that has a record type,

with the record label r; the projection has the corresponding record

field type

x bound by the left-hand-side of a lin rule,

of the corresponding linearization type

Each quoted string is treated as one token, and strings concatenated by

++ are treated as separate tokens. Tokens are, by default, written with

a space in between. This behaviour can be changed by lexer and unlexer

flags, as will be explained later "Rseclexing. Therefore it is usually

not correct to have a space in a token. Writing

"hello world"

in a grammar would give the parser the task to find a token with a space

in it, rather than two tokens "hello" and "world". If the latter is

what is meant, it is possible to use the shorthand

["hello world"] === "hello" ++ "world"

The empty string is denoted by [] or, equivalently, `` or ``[].

An important functionality of the GF system is static type checking. This means that the grammars are controlled to be well-formed, so that all run-time errors are eliminated. The main type checking principles are the following:

lincat of each cat and a lin

for each fun in the abstract syntax that it is "of"

lin rules are type checked with respect to the lincat and fun

rules

In this chapter, we will write a grammar that has much more structure than

the Hello grammar. We will look at how the abstract syntax

is divided into suitable categories, and how infinitely many

phrases can be generated by using recursive rules. We will also

introduce modularity by showing how a grammar can be

divided into modules, and how functional programming

can be used to share code in and among modules.

We will write a grammar that defines a set of phrases usable for speaking about food:

Phrase

Phrase can be built by assigning a Quality to an Item

(e.g. this cheese is Italian)

Item are build from a Kind by prefixing this or that

(e.g. this wine)

Kind is either atomic (e.g. cheese), or formed

qualifying a given Kind with a Quality (e.g. Italian cheese)

Quality is either atomic (e.g. Italian,

or built by modifying a given Quality with the word very (e.g. very warm)

These verbal descriptions can be expressed as the following abstract syntax:

abstract Food = {

flags startcat = Phrase ;

cat

Phrase ; Item ; Kind ; Quality ;

fun

Is : Item -> Quality -> Phrase ;

This, That : Kind -> Item ;

QKind : Quality -> Kind -> Kind ;

Wine, Cheese, Fish : Kind ;

Very : Quality -> Quality ;

Fresh, Warm, Italian, Expensive, Delicious, Boring : Quality ;

}

In this abstract syntax, we can build Phrases such as

Is (This (QKind Delicious (QKind Italian Wine))) (Very (Very Expensive))

In the English concrete syntax, we will want to linearize this into

this delicious Italian wine is very very expensive

The English concrete syntax gives no surprises:

concrete FoodEng of Food = {

lincat

Phrase, Item, Kind, Quality = {s : Str} ;

lin

Is item quality = {s = item.s ++ "is" ++ quality.s} ;

This kind = {s = "this" ++ kind.s} ;

That kind = {s = "that" ++ kind.s} ;

QKind quality kind = {s = quality.s ++ kind.s} ;

Wine = {s = "wine"} ;

Cheese = {s = "cheese"} ;

Fish = {s = "fish"} ;

Very quality = {s = "very" ++ quality.s} ;

Fresh = {s = "fresh"} ;

Warm = {s = "warm"} ;

Italian = {s = "Italian"} ;

Expensive = {s = "expensive"} ;

Delicious = {s = "delicious"} ;

Boring = {s = "boring"} ;

}

Let us test how the grammar works in parsing:

> import FoodEng.gf

> parse "this delicious wine is very very Italian"

Is (This (QKind Delicious Wine)) (Very (Very Italian))

We can also try parsing in other categories than the startcat,

by setting the command-line cat flag:

p -cat=Kind "very Italian wine"

QKind (Very Italian) Wine

Exercise. Extend the Food grammar by ten new food kinds and

qualities, and run the parser with new kinds of examples.

Exercise. Add a rule that enables question phrases of the form is this cheese Italian.

Exercise. Enable the optional prefixing of phrases with the words "excuse me but". Do this in such a way that the prefix can occur at most once.

When we have a grammar above a trivial size, especially a recursive one, we need more efficient ways of testing it than just by parsing sentences that happen to come to our minds. One way to do this is based on automatic generation, which can be either random generation or exhaustive generation.

Random generation (generate_random = gr) is an operation that

builds a random tree in accordance with an abstract syntax:

> generate_random

Is (This (QKind Italian Fish)) Fresh

By using a pipe, random generation can be fed into linearization:

> generate_random | linearize

this Italian fish is fresh

Random generation is a good way to test a grammar. It can also give results that are surprising, which shows how fast we lose intuition when we write complex grammars.

By using the number flag, several trees can be generated

in one command:

> gr -number=10 | l

that wine is boring

that fresh cheese is fresh

that cheese is very boring

this cheese is Italian

that expensive cheese is expensive

that fish is fresh

that wine is very Italian

this wine is Italian

this cheese is boring

this fish is boring

To generate all phrases that a grammar can produce,

GF provides the command generate_trees = gt.

> generate_trees | l

that cheese is very Italian

that cheese is very boring

that cheese is very delicious

that cheese is very expensive

that cheese is very fresh

...

this wine is expensive

this wine is fresh

this wine is warm

We get quite a few trees but not all of them: only up to a given

depth of trees. The default depth is 3; the depth can be

set by using the depth flag:

> generate_trees -depth=5 | l

Other options to the generation commands (like all commands) can be seen

by GF's help = h command:

> help gr

> help gt

Exercise. If the command gt generated all

trees in your grammar, it would never terminate. Why?

Exercise. Measure how many trees the grammar gives with depths 4 and 5,

respectively. Hint. You can

use the Unix word count command wc to count lines.

A pipe of GF commands can have any length, but the "output type" (either string or tree) of one command must always match the "input type" of the next command, in order for the result to make sense.

The intermediate results in a pipe can be observed by putting the

tracing option -tr to each command whose output you

want to see:

> gr -tr | l -tr | p

Is (This Cheese) Boring

this cheese is boring

Is (This Cheese) Boring

This facility is useful for test purposes: the pipe above can show if a grammar is ambiguous, i.e. contains strings that can be parsed in more than one way.

Exercise. Extend the Food grammar so that it produces ambiguous

strings, and try out the ambiguity test.

To save the outputs of GF commands into a file, you can

pipe it to the write_file = wf command,

> gr -number=10 | linearize | write_file exx.tmp

You can read the file back to GF with the

read_file = rf command,

> read_file exx.tmp | parse -lines

Notice the flag -lines given to the parsing

command. This flag tells GF to parse each line of

the file separately. Without the flag, the grammar could

not recognize the string in the file, because it is not

a sentence but a sequence of ten sentences.

Files with examples can be used for regression testing of grammars. The most systematic way to do this is by generating treebanks; see here.

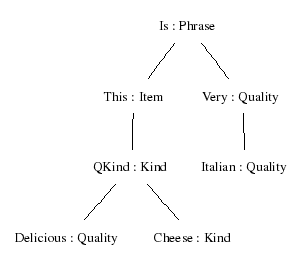

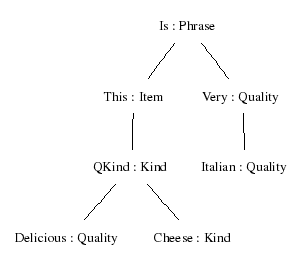

The gibberish code with parentheses returned by the parser does not

look like trees. Why is it called so? From the abstract mathematical

point of view, trees are a data structure that

represents nesting: trees are branching entities, and the branches

are themselves trees. Parentheses give a linear representation of trees,

useful for the computer. But the human eye may prefer to see a visualization;

for this purpose, GF provides the command visualize_tree = vt, to which

parsing (and any other tree-producing command) can be piped:

> parse "this delicious cheese is very Italian" | visualize_tree

This command uses the programs Graphviz and Ghostview, which you might not have, but which are freely available on the web.

Alternatively, you can print the tree into a file

e.g. a .png file that

can be be viewed with e.g. an HTML browser and also included in an

HTML document. You can do this

by saving the file grphtmp.dot, which the command vt

produces. Then you can process this file with the dot

program (from the Graphviz package).

% dot -Tpng grphtmp.dot > mytree.png

If you don't have Ghostview, or want to view graphs in some other way,

you can call dot and a suitable

viewer (e.g. open in Mac) without leaving GF, by using

a system command: ! followed by a Unix command,

> ! dot -Tpng grphtmp.dot > mytree.png

> ! open mytree.png

Another form of system commands are those that receive arguments from

GF pipes. The escape symbol

is then ?.

> generate_trees | ? wc

Exercise. (Exercise drom 3.3.1 revisited.)

Measure how many trees the grammar FoodEng gives with depths 4 and 5,

respectively. Use the Unix word count command wc to count lines, and

a pipe from a GF command into a Unix command.

We write the Italian grammar in a straightforward way, by replacing English words with their dictionary equivalents:

concrete FoodIta of Food = {

lincat

Phrase, Item, Kind, Quality = {s : Str} ;

lin

Is item quality = {s = item.s ++ "è" ++ quality.s} ;

This kind = {s = "questo" ++ kind.s} ;

That kind = {s = "quello" ++ kind.s} ;

QKind quality kind = {s = kind.s ++ quality.s} ;

Wine = {s = "vino"} ;

Cheese = {s = "formaggio"} ;

Fish = {s = "pesce"} ;

Very quality = {s = "molto" ++ quality.s} ;

Fresh = {s = "fresco"} ;

Warm = {s = "caldo"} ;

Italian = {s = "italiano"} ;

Expensive = {s = "caro"} ;

Delicious = {s = "delizioso"} ;

Boring = {s = "noioso"} ;

}

An alert reader, or one who already knows Italian, may notice one point in which the change is more substantial than just replacement of words: the order of a quality and the kind it modifies in

QKind quality kind = {s = kind.s ++ quality.s} ;

Thus Italian says vino italiano for Italian wine. (Some Italian adjectives

are put before the noun. This distinction can be controlled by parameters, which

are introduced in the fourth chapter.)

Exercise. Write a concrete syntax of Food for some other language.

You will probably end up with grammatically incorrect linearizations --- but don't

worry about this yet.

Exercise. If you have written Food for German, Swedish, or some

other language, test with random or exhaustive generation what constructs

come out incorrect, and prepare a list of those ones that cannot be helped

with the currently available fragment of GF. You can return to your list

after having worked out the fourth chapter.

Sometimes there are alternative ways to define a concrete syntax.

For instance, if we use the Food grammars in a restaurant phrase

book, we may want to accept different words for expressing the quality

"delicious" ---- and different languages can differ in how many

such words they have. Then we don't want to put the distinctions into

the abstract syntax, but into concrete syntaxes. Such semantically

neutral distinctions are known as free variation in linguistics.

The variants construct of GF expresses free variation. For example,

lin Delicious = {s = variants {"delicious" ; "exquisit" ; "tasty"}} ;

says that Delicious can be linearized to any of delicious,

exquisit, and tasty. As a consequence, both these words result in the

tree Delicious when parsed. By default, the linearize command

shows only the first variant from each variants list; to see them

all, the option -all can be used:

> p "this exquisit wine is delicious" | l -all

this delicious wine is delicious

this delicious wine is exquisit

...

In linguistics, it is well known that free variation is almost non-existing, if all aspects of expressions are taken into account, including style. Therefore, free variation should not be used in grammars that are meant as libraries for other grammars, as in the fifth chapter. However, in a specific application, free variation is an excellent way to scale up the parser to variations in user input that make no difference in the semantic treatment.

An example that clearly illustrates these points is the

English negation. If we added to the Food grammar the negation

of a quality, we could accept both contracted and uncontracted not:

fun IsNot : Item -> Quality -> Phrase ;

lin IsNot item qual =

{s = item.s ++ variants {"isn't" ; ["is not"]} ++ qual.s} ;

Both forms are likely to occur in user input. Since there is no corresponding contrast in Italian, we do not want to put the distinction in the abstract syntax. Yet there is a stylistic difference between these two forms. In particular, if we are doing generation rather than parsing, we will want to choose the one or the other depending on the kind of language we want to generate.

A limiting case of free variation is an empty variant list

variants {}

It can be used e.g. if a word lacks a certain inflection form.

Free variation works for all types in concrete syntax; all terms in

a variants list must be of the same type.

Exercise. Modify FoodIta in such a way that a quality can

be assigned to an item by using two different word orders, exemplified

by questo vino è delizioso and è delizioso questo vino

(a real variation in Italian),

and that it is impossible to say that something is boring

(a rather contrived example).

A multilingual treebank is a set of trees with their translations in different languages:

> gr -number=2 | tree_bank

Is (That Cheese) (Very Boring)

quello formaggio è molto noioso

that cheese is very boring

Is (That Cheese) Fresh

quello formaggio è fresco

that cheese is fresh

There is also an XML format for treebanks and a set of commands

suitable for regression testing; see help tb for more details.

If translation is what you want to do with a set of grammars, a convenient

way to do it is to open a translation_session = ts. In this session,

you can translate between all the languages that are in scope.

A dot . terminates the translation session.

> ts

trans> that very warm cheese is boring

quello formaggio molto caldo è noioso

that very warm cheese is boring

trans> questo vino molto italiano è molto delizioso

questo vino molto italiano è molto delizioso

this very Italian wine is very delicious

trans> .

>

This is a simple language exercise that can be automatically

generated from a multilingual grammar. The system generates a set of

random sentences, displays them in one language, and checks the user's

answer given in another language. The command translation_quiz = tq

makes this in a subshell of GF.

> translation_quiz FoodEng FoodIta

Welcome to GF Translation Quiz.

The quiz is over when you have done at least 10 examples

with at least 75 % success.

You can interrupt the quiz by entering a line consisting of a dot ('.').

this fish is warm

questo pesce è caldo

> Yes.

Score 1/1

this cheese is Italian

questo formaggio è noioso

> No, not questo formaggio è noioso, but

questo formaggio è italiano

Score 1/2

this fish is expensive

You can also generate a list of translation exercises and save it in a

file for later use, by the command translation_list = tl

> translation_list -number=25 FoodEng FoodIta | write_file transl.txt

The number flag gives the number of sentences generated.

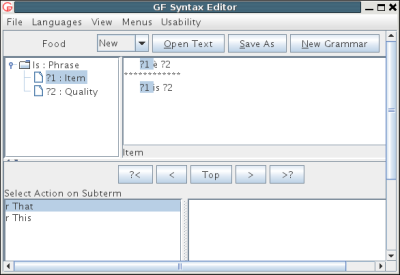

Any multilingual grammar can be used in the graphical syntax editor, which is

opened by the shell

command gfeditor followed by the names of the grammar files.

Thus

% gfeditor FoodEng.gf FoodIta.gf

opens the editor for the two Food grammars.

The editor supports commands for manipulating an abstract syntax tree.

The process is started by choosing a category from the "New" menu.

Choosing Phrase creates a new tree of type Phrase. A new tree

is in general completely unknown: it consists of a metavariable

?1. However, since the category Phrase in Food has

only one possible constructor, Is, the tree is readily

given the form Is ?1 ?2. Here is what the editor looks like at

this stage:

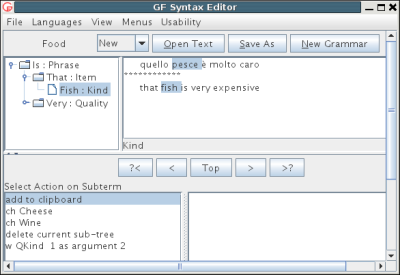

Editing goes on by refinements, i.e. choices of constructors from the menu, until no metavariables remain. Here is a tree resulting from the current editing session:

Editing can be continued even when the tree is finished. The user can shift the focus to some of the subtrees by clicking at it or the corresponding part of a linearization. In the picture, the focus is on "fish". Since there are no metavariables, the menu shows no refinements, but some other possible actions:

QKind ? Fish, where the quality can be given in a later refinement

In addition to menu-based editing, the tool supports refinement by parsing, which is accessible by middle-clicking in the tree or in the linearization field.

Exercise. Construct the sentence

this very expensive cheese is very very delicious

and its Italian translation by using gfeditor.

Readers not familar with context-free grammars, also known as BNF grammars, can skip this section. Those that are familar with them will find here the exact relation between GF and context-free grammars. We will moreover show how the BNF format can be used as input to the GF program; it is often more concise than GF proper, but also more restricted in expressive power.

The grammar FoodEng could be written in a BNF format as follows:

Is. Phrase ::= Item "is" Quality ;

That. Item ::= "that" Kind ;

This. Item ::= "this" Kind ;

QKind. Kind ::= Quality Kind ;

Cheese. Kind ::= "cheese" ;

Fish. Kind ::= "fish" ;

Wine. Kind ::= "wine" ;

Italian. Quality ::= "Italian" ;

Boring. Quality ::= "boring" ;

Delicious. Quality ::= "delicious" ;

Expensive. Quality ::= "expensive" ;

Fresh. Quality ::= "fresh" ;

Very. Quality ::= "very" Quality ;

Warm. Quality ::= "warm" ;

In this format, each rule is prefixed by a label that gives

the constructor function GF gives in its fun rules. In fact,

each context-free rule is a fusion of a fun and a lin rule:

it states simultaneously that

fun Is : Item -> Quality -> Phrase

lin Is item quality = {s = item.s ++ "is" ++ quality.s}

The translation from BNF to GF described above is in fact used in

the GF system to convert BNF grammars into GF. BNF files are recognized

by the file name suffix .cf; thus the grammar above can be

put into a file named food.cf and read into GF by

> import food.cf

Even though we managed to write FoodEng in the context-free format,

we cannot do this for GF grammars in general. It is enough to try this

with FoodIta at the same time as FoodEng,

we lose an important aspect of multilinguality:

that the order of constituents is defined only in concrete syntax.

Thus we could not use context-free FoodEng and FoodIta in a multilingual

grammar that supports translation via common abstract syntax: the

qualification function QKind has different types in the two

grammars.

In general terms, the separation of concrete and abstract syntax allows three deviations from context-free grammar:

The third property is the one that definitely shows that GF is

stronger than context-free: GF can define the copy language

{x x | x <- (a|b)*}, which is known not to be context-free.

The other properties have more to do with the kind of trees that

the grammar can associate with strings: permutation is important

in multilingual grammars, and suppression is exploited in grammars

where trees carry some hidden semantic information (see the sixth chapter

below).

Of course, context-free grammars are also restricted from the grammar engineering point of view. They give no support to modules, functions, and parameters, which are so central for the productivity of GF. Despite the initial conciseness of context-free grammars, GF can easily produce grammars where 30 lines of GF code would need hundreds of lines of context-free grammar code to produce; see exercises here and here.

Exercise. GF can also interpret unlabelled BNF grammars, by creating labels automatically. The right-hand sides of BNF rules can moreover be disjunctions, e.g.

Quality ::= "fresh" | "Italian" | "very" Quality ;

Experiment with this format in GF, possibly with a grammar that you import from some other source, such as a programming language document.

Exercise. Define the copy language {x x | x <- (a|b)*} in GF.

GF uses suffixes to recognize different file formats. The most important ones are:

.gf

.gfc

When you import FoodEng.gf, you see the target files being

generated:

> i FoodEng.gf

- compiling Food.gf... wrote file Food.gfc 16 msec

- compiling FoodEng.gf... wrote file FoodEng.gfc 20 msec

You also see that the GF program does not only read the file

FoodEng.gf, but also all other files that it

depends on --- in this case, Food.gf.

For each file that is compiled, a .gfc file

is generated. The GFC format (="GF Canonical") is the

"machine code" of GF, which is faster to process than

GF source files. When reading a module, GF decides whether

to use an existing .gfc file or to generate

a new one, by looking at modification times.

In GF version 3, the gfc format is replaced by the format suffixed

gfo, "GF object".

Exercise. What happens when you import FoodEng.gf for

a second time? Try this in different situations:

empty (e), which clears the memory

of GF.

FoodEng.gf, be it only an added space.

Food.gf.

When writing a grammar, you have to type lots of characters. You have probably done this by the copy-and-paste method, which is a universally available way to avoid repeating work.

However, there is a more elegant way to avoid repeating work than the copy-and-paste method. The golden rule of functional programming says that

A function separates the shared parts of different computations from the changing parts, its arguments, or parameters. In functional programming languages, such as Haskell, it is possible to share much more code with functions than in languages such as C and Java, because of higher-order functions (functions that takes functions as arguments).

GF is a functional programming language, not only in the sense that

the abstract syntax is a system of functions (fun), but also because

functional programming can be used when defining concrete syntax. This is

done by using a new form of judgement, with the keyword oper (for

operation), distinct from fun for the sake of clarity.

Here is a simple example of an operation:

oper ss : Str -> {s : Str} = \x -> {s = x} ;

The operation can be applied to an argument, and GF will compute the application into a value. For instance,

ss "boy" ===> {s = "boy"}

We use the symbol === to indicate how an expression is

computed into a value; this symbol is not a part of GF.

Thus an oper judgement includes the name of the defined operation,

its type, and an expression defining it. As for the syntax of the defining

expression, notice the lambda abstraction form \x -> t of

the function. It reads: function with variable x and function body

t. Any occurrence of x in t is said to be bound in t.

For lambda abstraction with multiple arguments, we have the shorthand

\x,y -> t === \x -> \y -> t

The notation we have used for linearization rules, where variables are bound on the left-hand side, is actually syntactic sugar for abstraction:

lin f x = t === lin f = \x -> t

Operator definitions can be included in a concrete syntax. But they are usually not really tied to a particular set of linearization rules. They should rather be seen as resources usable in many concrete syntaxes.

The resource module type is used to package

oper definitions into reusable resources. Here is

an example, with a handful of operations to manipulate

strings and records.

resource StringOper = {

oper

SS : Type = {s : Str} ;

ss : Str -> SS = \x -> {s = x} ;

cc : SS -> SS -> SS = \x,y -> ss (x.s ++ y.s) ;

prefix : Str -> SS -> SS = \p,x -> ss (p ++ x.s) ;

}

Any number of resource modules can be

opened in a concrete syntax, which

makes definitions contained

in the resource usable in the concrete syntax. Here is

an example, where the resource StringOper is

opened in a new version of FoodEng.

concrete FoodEng of Food = open StringOper in {

lincat

S, Item, Kind, Quality = SS ;

lin

Is item quality = cc item (prefix "is" quality) ;

This k = prefix "this" k ;

That k = prefix "that" k ;

QKind k q = cc k q ;

Wine = ss "wine" ;

Cheese = ss "cheese" ;

Fish = ss "fish" ;

Very = prefix "very" ;

Fresh = ss "fresh" ;

Warm = ss "warm" ;

Italian = ss "Italian" ;

Expensive = ss "expensive" ;

Delicious = ss "delicious" ;

Boring = ss "boring" ;

}

Exercise. Use the same string operations to write FoodIta

more concisely.

GF, like Haskell, permits partial application of functions. An example of this is the rule

lin This k = prefix "this" k ;

which can be written more concisely

lin This = prefix "this" ;

The first form is perhaps more intuitive to write but, once you get used to partial application, you will appreciate its conciseness and elegance. The logic of partial application is known as currying, with a reference to Haskell B. Curry. The idea is that any n-place function can be seen as a 1-place function whose value is an n-1 -place function. Thus

oper prefix : Str -> SS -> SS ;

can be used as a 1-place function that takes a Str into a

function SS -> SS. The expected linearization of This is exactly

a function of such a type, operating on an argument of type Kind

whose linearization is of type SS. Thus we can define the

linearization directly as prefix "this".

An important part of the art of functional programming is to decide the order

of arguments in a function, so that partial application can be used as much

as possible. For instance, of the operation prefix we know that it

will be typically applied to linearization variables with constant strings.

This is the reason to put the Str argument before the SS argument --- not

the prefixity. A postfix function would have exactly the same order of arguments.

Exercise. Define an operation infix analogous to prefix,

such that it allows you to write

lin Is = infix "is" ;

To test a resource module independently, you must import it

with the flag -retain, which tells GF to retain oper definitions

in the memory; the usual behaviour is that oper definitions

are just applied to compile linearization rules

(this is called inlining) and then thrown away.

> import -retain StringOper.gf

The command compute_concrete = cc computes any expression

formed by operations and other GF constructs. For example,

> compute_concrete prefix "in" (ss "addition")

{

s : Str = "in" ++ "addition"

}

The module system of GF makes it possible to write a new module that extends

an old one. The syntax of extension is

shown by the following example. We extend Food into MoreFood by

adding a category of questions and two new functions.

abstract Morefood = Food ** {

cat

Question ;

fun

QIs : Item -> Quality -> Question ;

Pizza : Kind ;

}

Parallel to the abstract syntax, extensions can be built for concrete syntaxes:

concrete MorefoodEng of Morefood = FoodEng ** {

lincat

Question = {s : Str} ;

lin

QIs item quality = {s = "is" ++ item.s ++ quality.s} ;

Pizza = {s = "pizza"} ;

}

The effect of extension is that all of the contents of the extended and extending module are put together. We also say that the new module inherits the contents of the old module.

At the same time as extending a module of the same type, a concrete

syntax module may open resources. Since open takes effect in

the module body and not in the extended module, its logical place

in the module header is after the extend part:

concrete MorefoodIta of Morefood = FoodIta ** open StringOper in {

lincat

Question = SS ;

lin

QIs item quality = ss (item.s ++ "è" ++ quality.s) ;

Pizza = ss "pizza" ;

}

Resource modules can extend other resource modules, in the same way as modules of other types can extend modules of the same type. Thus it is possible to build resource hierarchies.

Specialized vocabularies can be represented as small grammars that only do "one thing" each. For instance, the following are grammars for fruit and mushrooms

abstract Fruit = {

cat Fruit ;

fun Apple, Peach : Fruit ;

}

abstract Mushroom = {

cat Mushroom ;

fun Cep, Agaric : Mushroom ;

}

They can afterwards be combined into bigger grammars by using multiple inheritance, i.e. extension of several grammars at the same time:

abstract Foodmarket = Food, Fruit, Mushroom ** {

fun

FruitKind : Fruit -> Kind ;

MushroomKind : Mushroom -> Kind ;

}

The main advantages with splitting a grammar to modules are

reusability, separate compilation, and division of labour.

Reusability means

that one and the same module can be put into different uses; for instance,

a module with mushroom names might be used in a mycological information system

as well as in a restaurant phrasebook. Separate compilation means that a module

once compiled into .gfc need not be compiled again unless changes have

taken place.

Division of labour means simply that programmers that are experts in

special areas can work on modules belonging to those areas.

Exercise. Refactor Food by taking apart Wine into a special

Drink module.

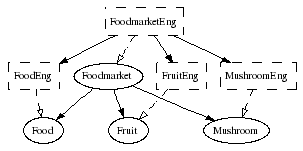

When you have created all the abstract syntaxes and

one set of concrete syntaxes needed for Foodmarket,

your grammar consists of eight GF modules. To see how their

dependences look like, you can use the command

visualize_graph = vg,

> visualize_graph

and the graph will pop up in a separate window:

The graph uses

Just as the visualize_tree = vt command, the freely available tools

Ghostview and Graphviz are needed. As an alternative, you can again print

the graph into a .dot file by using the command print_multi = pm:

> print_multi -printer=graph | write_file Foodmarket.dot

> ! dot -Tpng Foodmarket.dot > Foodmarket.png

The general form of a module is

= (Extends **)? (open Opens in)? Body

abstract, concrete, and resource.

If Moduletype is concrete, the Of-part has the form of A,

where A is the name of an abstract module. Otherwise it is empty.

The name of the module is given by the identifier M.

The optional Extends part is a comma-separated

list of module names, which have to be modules of

the same Moduletype. The contents of these modules are inherited by

M. This means that they are both usable in Body and exported by M,

i.e. inherited when M is inherited and available when M is opened.

(Exception: oper and param judgements are not inherited from

concrete modules.)

The optional Opens part is a comma-separated list of resource module names. The contents of these modules are usable in the Body, but they are not exported.

Opening can be qualified, e.g.

concrete C of A = open (P = Prelude) in ...

This means that the names from Prelude are only available in the form

P.name. This form of qualifying a name is always possible, and it can

be used to resolve name conflicts, which result when the same name is

declared in more than one module that is in scope.

The Body part consists of judgements. The judgement form table #secjment is extended with the following forms:

| form | reading | module type | |

|---|---|---|---|

oper h : T = t |

operation h of type T is defined as t | resource, concrete | |

param P = C1 | ... | Cn |

parameter type P has constructors C1...Cn | resource, concrete | |

The param judgement will be explained in the next chapter.

The type part of an oper judgement can be omitted, if the type can be inferred

by the GF compiler.

oper hello = "hello" ++ "world" ;

As a rule, type inference works for all terms except lambda abstracts.

Lambda abstracts are expressions of the form \x -> t,

where x is a variable bound in the expression t, which is the

body of the lambda abstract. The type of the lambda abstract is

A ->B, where A is the type of the variable x and

B the type of the body t.

For multiple lambda abstractions, there is a shorthand

\x,y -> t === \x -> \y -> t

For lin judgements, there is the shorthand

lin f x = t === lin f = \x -> t

The variants construct of GF can be used to give a list of

concrete syntax terms, of the same type, in free variation. For example,

variants {["does not"] ; "doesn't"}

A limiting case is the empty variant list variants {}.

The .cf file format is used for context-free grammars, which are

always interpretable as GF grammars. Files of this format consist of

rules of the form

.)? Cat ::= RHS ;

The Extended BNF format (EBNF) can also be used, in files suffixed .ebnf.

This format does not allow user-written labels. The right-hand-side of a rule

can contain everything that is possible in the .cf format, but also

optional parts (p ?), sequences (p *) and non-empty sequences (p +).

For example, the phrases in FoodEng could be recognized with the following

EBNF grammar:

Phrase ::=

("this" | "that") Quality* ("wine" | "cheese" | "fish") "is" Quality ;

Quality ::=

("very"* ("fresh" | "warm" | "boring" | "Italian" | "expensive")) ;

The default encoding is iso-latin-1. UTF-8 can be set by the flag coding=utf8

in the grammar. The resource grammar libraries are in iso-latin-1, except Russian

and Arabic, which are in UTF-8. The resources may be changed to UTF-8 in future.

Letters in identifiers must currently be iso-latin-1.

In this chapter, we will introduce the techniques needed for describing the inflection of words, as well as the rules by which correct word forms are selected in syntactic combinations. These techniques are already needed in a very slight extension of the Food grammar of the previous chapter. While explaining how the linguistic problems are solved for English and Italian, we also cover all the language constructs GF has for defining concrete syntax.

It is in principle possible to skip this chapter and go directly to the next, since the use of the GF Resource Grammar library makes it unnecessary to use any more constructs of GF than we have already covered: parameters could be left to library implementors.

Suppose we want to say, with the vocabulary included in

Food.gf, things like

The introduction of plural forms requires two things:

Different languages have different types of inflection and agreement. For instance, Italian has also agreement in gender (masculine vs. feminine). In a multilingual grammar, we want to express such differences between languages in the concrete syntax while ignoring them in the abstract syntax.

To be able to do all this, we need one new judgement form and some new expression forms. We also need to generalize linearization types from strings to more complex types.

Exercise. Make a list of the possible forms that nouns, adjectives, and verbs can have in some languages that you know.

We define the parameter type of number in English by using a new form of judgement:

param Number = Sg | Pl ;

This judgement defines the parameter type Number by listing

its two constructors, Sg and Pl (common shorthands for

singular and plural).

To state that Kind expressions in English have a linearization

depending on number, we replace the linearization type {s : Str}

with a type where the s field is a table depending on number:

lincat Kind = {s : Number => Str} ;

The table type Number => Str is in many respects similar to

a function type (Number -> Str). The main difference is that the

argument type of a table type must always be a parameter type. This means

that the argument-value pairs can be listed in a finite table. The following

example shows such a table:

lin Cheese = {

s = table {

Sg => "cheese" ;

Pl => "cheeses"

}

} ;

The table consists of branches, where a pattern on the

left of the arrow => is assigned a value on the right.

The application of a table to a parameter is done by the selection

operator !, which is computed by pattern matching: it returns

the value from the first branch whose pattern matches the

selection argument. For instance,

table {Sg => "cheese" ; Pl => "cheeses"} ! Pl

===> "cheeses"

As syntactic sugar for table selections, we can define the case expressions, which are common in functional programming and also handy to use in GF.

case e of {...} === table {...} ! e

A parameter type can have any number of constructors, and these can also take arguments from other parameter types. For instance, an accurate type system for English verbs (except be) is

param VerbForm = VPresent Number | VPast | VPastPart | VPresPart ;

This system expresses accurately the fact that only the present tense has number variation. (Agreement also requires variation in person, but this can be defined in syntax rules, by picking the singular form for third person singular subjects and the plural forms for all others). As an example of a table, here are the forms of the verb drink:

table {

VPresent Sg => "drinks" ;

VPresent Pl => "drink" ;

VPast => "drank" ;

VPastPart => "drunk" ;

VPresPart => "drinking"

}

Exercise. In an earlier exercise (previous section),

you made a list of the possible

forms that nouns, adjectives, and verbs can have in some languages that

you know. Now take some of the results and implement them by

using parameter type definitions and tables. Write them into a resource

module, which you can test by using the command compute_concrete.

All English common nouns are inflected for number, most of them in the same way: the plural form is obtained from the singular by adding the ending s. This rule is an example of a paradigm --- a formula telling how a class of words is inflected.

From the GF point of view, a paradigm is a function that takes

a lemma --- also known as a dictionary form or a citation form --- and

returns an inflection

table of desired type. Paradigms are not functions in the sense of the

fun judgements of abstract syntax (which operate on trees and not

on strings), but operations defined in oper judgements.

The following operation defines the regular noun paradigm of English:

oper regNoun : Str -> {s : Number => Str} = \dog -> {

s = table {

Sg => dog ;

Pl => dog + "s"

}

} ;

The gluing operator + tells that

the string held in the variable dog and the ending "s"

are written together to form one token. Thus, for instance,

(regNoun "cheese").s ! Pl ===> "cheese" + "s" ===> "cheeses"

A more complex example are regular verbs:

oper regVerb : Str -> {s : VerbForm => Str} = \talk -> {

s = table {

VPresent Sg => talk + "s" ;

VPresent Pl => talk ;

VPresPart => talk + "ing" ;

_ => talk + "ed"

}

} ;

Notice how a catch-all case for the past tense and the past participle

is expressed by using a wild card pattern _. Here again, pattern matching

tries all patterns in order until it finds a matching pattern;

and it is the wild card that is the first match for both VPast and

VPastPart.

Exercise. Identify cases in which the regNoun paradigm does not

apply in English, and implement some alternative paradigms.

Exercise. Implement some regular paradigms for other languages you have considered in earlier exercises.

We can now enrich the concrete syntax definitions to comprise morphology. This will permit a more radical variation between languages (e.g. English and Italian) than just the use of different words. In general, parameters and linearization types are different in different languages --- but this does not prevent using a the common abstract syntax.

We consider a grammar Foods, which is similar to

Food, with the addition two rules for forming plural items:

fun These, Those : Kind -> Item ;

We also add a noun which in Italian has the feminine case; all nouns in

Food were carefully chosen to be masculine!

fun Pizza : Kind ;

This noun will force us to deal with gender in the Italian grammar, which is what we need for the grammar to scale up for larger applications.

In the English Foods grammar, we need just one type of parameters:

Number as defined above. The phrase-forming rule

fun Is : Item -> Quality -> Phrase ;

is affected by the number because of subject-verb agreement. In English, agreement says that the verb of a sentence must be inflected in the number of the subject. Thus we will linearize

Is (This Pizza) Warm ===> "this pizza is warm"

Is (These Pizza) Warm ===> "these pizzas are warm"

Here it is the copula, i.e. the verb be that is affected. We define the copula as the operation

oper copula : Number -> Str = \n ->

case n of {

Sg => "is" ;

Pl => "are"

} ;

We don't need to inflect the copula for person and tense in this grammar.

The form of the copula in a sentence depends on the

subject of the sentence, i.e. the item

that is qualified. This means that an Item must have such a number to provide.

The obvious way to guarantee this is by including a number field in

the linearization type:

lincat Item = {s : Str ; n : Number} ;

Now we can write precisely the Is rule that expresses agreement:

lin Is item qual = {s = item.s ++ copula item.n ++ qual.s} ;

The copula receives the number that it needs from the subject item.

Let us turn to Item subjects and see how they receive their

numbers. The two rules

fun This, These : Kind -> Item ;

form Items from Kinds by adding determiners, either

this or these. The determiners

require different numbers of their Kind arguments: This

requires the singular (this pizza) and These the plural

(these pizzas). The Kind is the same in both cases: Pizza.

Thus a Kind must have both singular and plural forms.

The obvious way to express this is by using a table:

lincat Kind = {s : Number => Str} ;

The linearization rules for This and These can now be written

lin This kind = {

s = "this" ++ kind.s ! Sg ;

n = Sg

} ;

lin These kind = {

s = "these" ++ kind.s ! Pl ;

n = Pl

} ;

The grammatical relation between the determiner and the noun is similar to agreement, but due to some differences into which we don't go here it is often called government.

Since the same pattern for determination is used four times in

the FoodsEng grammar, we codify it as an operation,

oper det :

Str -> Number -> {s : Number => Str} -> {s : Str ; n : Number} =

\det,n,kind -> {

s = det ++ kind.s ! n ;

n = n

} ;

Now we can write, for instance,

lin This = det Sg "this" ;

lin These = det Pl "these" ;

Notice the order of arguments that permits partial application (here).

In a more lexicalized grammar, determiners would be made into a category of their own and given an inherent number:

lincat Det = {s : Str ; n : Number} ;

fun Det : Det -> Kind -> Item ;

lin Det det kind = {

s = det.s ++ kind.s ! det.n ;

n = det.n

} ;

Linguistically motivated grammars, such as the GF resource grammars,

usually favour lexicalized treatments of words; see here below.

Notice that the fields of the record in Det are precisely the two

arguments needed in the det operation.

Kinds, as in general common nouns in English, have both singular

and plural forms; what form is chosen is determined by the construction

in which the noun is used. We say that the number is a

parametric feature of nouns. In GF, parametric features

appear as argument types of tables in linearization types.

lincat Kind = {s : Number => Str} ;

Items, as in general noun phrases in English, don't

have variation in number. The number is instead an inherent feature,

which the noun phrase passes to the verb. In GF, inherent features

appear as record fields in linearization types.

lincat Item = {s : Str ; n : Number} ;

A category can have both parametric and inherent features. As we will see

in the Italian Foods grammar, nouns have parametric number and

inherent gender:

lincat Kind = {s : Number => Str ; g : Gender} ;

Nothing prevents the same parameter type from appearing both as parametric and inherent feature, or the appearance of several inherent features of the same type, etc. Determining the linearization types of categories is one of the most crucial steps in the design of a GF grammar. These two conditions must be in balance:

Grammar books and dictionaries give good advice on existence; for instance, an Italian dictionary has entries such as

The distinction between parametric and inherent features can be stated in object-oriented programming terms: a linearization type is like a class, which has a method for linearization and also some attributes. In this class, the parametric features appear as arguments to the linearization method, whereas the inherent features appear as attributes.

For words, inherent features are usually given ad hoc as lexical information. For combinations, they are typically inherited from some part of the construction. For instance, qualified noun constructs in Italian inherit their gender from noun part (called the head of the construction in linguistics):

lin QKind qual kind =

let gen = kind.g in {

s = table {n => kind.s ! n ++ qual.s ! gen ! n} ;

g = gen

} ;

This rule uses a local definition (also known as a let expression) to

avoid computing kind.g twice, and also to express the linguistic

generalization that it is the same gender that is both passed to

the adjective and inherited by the construct.

The parametric number feature is in this rule passed to both the noun and

the adjective. In the table, a variable pattern is used to match

any possible number. Variables introduced in patterns are in scope in

the right-hand sides of corresponding branches. Again, it is good to

use a variable to express the linguistic generalization that the number

is passed to the parts, rather than expand the table into Sg and Pl

branches.

Sometimes the puzzle of making agreement and government work in a grammar has several solutions. For instance, precedence in programming languages can be equivalently described by a parametric or an inherent feature (see here below).

In natural language applications that use the resource grammar library, all parameters are hidden from the user, who thereby does not need to bother about them. The only thing that she has to think about is what linguistic categories are given as linearization types to each semantic category.

For instance, the GF resource grammar library has a category NP of

noun phrases, AP of adjectival phrases, and Cl of sentence-like clauses.

In the implementation of Foods here, we will define

lincat Phrase = Cl ; Item = NP ; Quality = AP ;

To express that an item has a quality, we will use a resource function

mkCl : NP -> AP -> Cl ;

in the linearization rule:

lin Is = mkCl ;

In this way, we have no need to think about parameters and agreement.

the fifth chapter will show a complete implementation of Foods by the

resource grammar, port it to many new languages, and extend it with

many new constructs.

We repeat some of the rules above by showing the entire

module FoodsEng, equipped with parameters. The parameters and

operations are, for the sake of brevity, included in the same module

and not in a separate resource. However, some string operations

from the library Prelude are used.

--# -path=.:prelude

concrete FoodsEng of Foods = open Prelude in {

lincat

S, Quality = SS ;

Kind = {s : Number => Str} ;

Item = {s : Str ; n : Number} ;

lin

Is item quality = ss (item.s ++ copula item.n ++ quality.s) ;

This = det Sg "this" ;

That = det Sg "that" ;

These = det Pl "these" ;

Those = det Pl "those" ;

QKind quality kind = {s = table {n => quality.s ++ kind.s ! n}} ;

Wine = regNoun "wine" ;

Cheese = regNoun "cheese" ;

Fish = noun "fish" "fish" ;

Pizza = regNoun "pizza" ;

Very = prefixSS "very" ;

Fresh = ss "fresh" ;

Warm = ss "warm" ;

Italian = ss "Italian" ;

Expensive = ss "expensive" ;

Delicious = ss "delicious" ;

Boring = ss "boring" ;

param

Number = Sg | Pl ;

oper

det : Number -> Str -> {s : Number => Str} -> {s : Str ; n : Number} =

\n,d,cn -> {

s = d ++ cn.s ! n ;

n = n

} ;

noun : Str -> Str -> {s : Number => Str} =

\man,men -> {s = table {

Sg => man ;

Pl => men

}

} ;

regNoun : Str -> {s : Number => Str} =

\car -> noun car (car + "s") ;

copula : Number -> Str =

\n -> case n of {

Sg => "is" ;

Pl => "are"

} ;

}

To find the Prelude library --- or in general,

GF files located in other directories, a path directive is needed

either on the command line or as the first line of

the topmost file compiled.

The paths in the path list are separated by colons (:), and every item

is interpreted primarily relative to the current directory and, secondarily,

to the value of GF_LIB_PATH (GF library path). Hence it is a

good idea to make GF_LIB_PATH to point into your GF/lib/ whenever

you start working in GF. For instance, in the Bash shell this is done by

% export GF_LIB_PATH=<the location of GF/lib in your file system>

Let us try to extend the English noun paradigms so that we can deal with all nouns, not just the regular ones. The goal is to provide a morphology module that is maximally easy to use when words are added to the lexicon. In fact, we can think of a division of labour where a linguistically trained grammarian writes a morphology and hands it over to the lexicon writer who knows much less about the rules of inflection.

In passing, we will introduce some new GF constructs: local definitions, regular expression patterns, and operation overloading.

To start with, it is useful to perform data abstraction from the type of nouns by writing a constructor operation, a worst-case function:

oper mkNoun : Str -> Str -> Noun = \x,y -> {

s = table {

Sg => x ;

Pl => y

}

} ;

This presupposes that we have defined

oper Noun : Type = {s : Number => Str} ;

Using mkNoun, we can define

lin Mouse = mkNoun "mouse" "mice" ;

and

oper regNoun : Str -> Noun = \x -> mkNoun x (x + "s") ;

instead of writing the inflection tables explicitly.

Nouns like mouse-mice, are so irregular that it hardly makes sense to see them as instances of a paradigm that forms the plural from the singular form. But in general, as we will see, there can be different regular patterns in a language.

The grammar engineering advantage of worst-case functions is that

the author of the resource module may change the definitions of

Noun and mkNoun, and still retain the

interface (i.e. the system of type signatures) that makes it

correct to use these functions in concrete modules. In programming

terms, Noun is then treated as an abstract datatype:

its definition is not available, but only an indirect way of constructing

its objects.

A case where a change of the Noun type could

actually happen is if we introduces case (nominative or

genitive) in the noun inflection:

param Case = Nom | Gen ;

oper Noun : Type = {s : Number => Case => Str} ;

Now we have to redefine the worst-case function

oper mkNoun : Str -> Str -> Noun = \x,y -> {

s = table {

Sg => table {

Nom => x ;

Gen => x + "'s"

} ;

Pl => table {

Nom => y ;

Gen => y + case last y of {

"s" => "'" ;

_ => "'s"

}

}

} ;

But up from this level, we can retain the old definitions

lin Mouse = mkNoun "mouse" "mice" ;

oper regNoun : Str -> Noun = \x -> mkNoun x (x + "s") ;

which will just compute to different values now.

In the last definition of mkNoun, we used a case expression

on the last character of the plural form to decide if the genitive

should be formed with an ' (as in dogs-dogs') or with

's (as in mice-mice's). The expression last y

uses the Prelude operation

last : Str -> Str ;

The case expression uses pattern matching over strings, which is supported in GF, alongside with pattern matching over parameters.

Between the completely regular dog-dogs and the completely irregular mouse-mice, there are some predictable variations:

One way to deal with them would be to provide alternative paradigms:

noun_y : Str -> Noun = \fly -> mkNoun fly (init fly + "ies") ;

noun_s : Str -> Noun = \bus -> mkNoun bus (bus + "es") ;

The Prelude function init drops the last character of a token.

But this solution has some drawbacks:

To help the lexicon builder in this task, the morphology programmer can put some intelligence in the regular noun paradigm. The easiest way to express this in GF is by the use of regular expression patterns:

regNoun : Str -> Noun = \w ->

let

ws : Str = case w of {

_ + ("a" | "e" | "i" | "o") + "o" => w + "s" ; -- bamboo

_ + ("s" | "x" | "sh" | "o") => w + "es" ; -- bus, hero

_ + "z" => w + "zes" ;-- quiz

_ + ("a" | "e" | "o" | "u") + "y" => w + "s" ; -- boy

x + "y" => x + "ies" ;-- fly

_ => w + "s" -- car

}

in

mkNoun w ws

In this definition, we have used a local definition just in order to structure the code, even though there is no multiple evaluation to eliminate. In the case expression itself, we have used

| Q

+ Q

The patterns are ordered in such a way that, for instance,

the suffix "oo" prevents bamboo from matching the suffix

"o".

Exercise. The same rules that form plural nouns in English also

apply in the formation of third-person singular verbs.

Write a regular verb paradigm that uses this idea, but first

rewrite regNoun so that the analysis needed to build s-forms

is factored out as a separate oper, which is shared with

regVerb.

Exercise. Extend the verb paradigms to cover all verb forms in English, with special care taken of variations with the suffix ed (e.g. try-tried, use-used).

Exercise. Implement the German Umlaut operation on word stems. The operation changes the vowel of the stressed stem syllable as follows: a to ä, au to äu, o to ö, and u to ü. You can assume that the operation only takes syllables as arguments. Test the operation to see whether it correctly changes Arzt to Ärzt, Baum to Bäum, Topf to Töpf, and Kuh to Küh.

In the sixth chapter, we will introduce dependent function types, where the value type depends on the argument. For this end, we need a notation that binds a variable to the argument type, as in

switchOff : (k : Kind) -> Action k

Function types without variables are actually a shorthand notation: writing

PredVP : NP -> VP -> S

is shorthand for

PredVP : (x : NP) -> (y : VP) -> S

or any other naming of the variables. Actually the use of variables sometimes shortens the code, since they can share a type:

octuple : (x,y,z,u,v,w,s,t : Str) -> Str

If a bound variable is not used, it can here, as elsewhere in GF, be replaced by a wildcard:

octuple : (_,_,_,_,_,_,_,_ : Str) -> Str

A good practice for functions with many arguments of the same type is to indicate the number of arguments:

octuple : (x1,_,_,_,_,_,_,x8 : Str) -> Str

One can also use heuristic variable names to document what information each argument is expected to provide. This is very handy in the types of inflection paradigms:

mkNoun : (mouse,mice : Str) -> Noun

In grammars intended as libraries, it is useful to separate oparation

definitions from their type signatures. The user is only interested

in the type, whereas the definition is kept for the implementor and

the maintainer. This is possible by using separate oper fragments

for the two parts:

oper regNoun : Str -> Noun ;

oper regNoun s = mkNoun s (s + "s") ;

The type checker combines the two into one oper judgement to see

if the definition matches the type. Notice that, in this syntax, it

is moreover possible to bind the argument variables on the left hand side

instead of using lambda abstration.

In the library module, the type signatures are typically placed in