Purpose

Background

Coverage

Structure

How to use

How to implement a new language

How to extend the API

High-level access to grammatical rules

E.g. You have k new messages rendered in ten languages X

render X (Have (You (Number (k (New Message)))))

Usability for different purposes

Often in NLP, a grammar is just high-level code for a parser.

But writing a grammar can be inadequate for parsing:

Moreover, a grammar fine-tuned for parsing may not be reusable

Linguistic ontology: abstract syntax

E.g. adjectival modification rule

AdjCN : AP -> CN -> CN ;

Rendering in different languages: concrete syntax

AdjCN (PositA even_A) (UseN number_N)

even number, even numbers

jämnt tal, jämna tal

nombre pair, nombres pairs

Abstract away from inflection, agreement, word order.

Resource grammars have generation perspective, rather than parsing

Division of labour: resource grammars hide linguistic details

AdjCN : AP -> CN -> CN hides agreement, word order,...

Presentation: "school grammar" concepts, dictionary-like conventions

bird_N = reg2N "Vogel" "Vögel" masculine

API = Application Programmer's Interface

Documentation: gfdoc

IDE = Interactive Development Environment (forthcoming)

Example-based grammar writing

render Ita (parse Eng "you have k messages")

Linguistics

Computer science

2002: v. 0.2

2003: v. 0.6

2005: v. 0.9

2006: v. 1.0

Janna Khegai (Russian modules, forthcoming), Bjorn Bringert (many Swadesh lexica), Inger Andersson and Therese Söderberg (Spanish morphology), Ludmilla Bogavac (Russian morphology), Carlos Gonzalia (Spanish cardinals), Harald Hammarström (German morphology), Partik Jansson (Swedish cardinals), Aarne Ranta.

We are grateful for contributions and comments to several other people who have used this and the previous versions of the resource library, including Ana Bove, David Burke, Lauri Carlson, Gloria Casanellas, Karin Cavallin, Hans-Joachim Daniels, Kristofer Johannisson, Anni Laine, Wanjiku Ng'ang'a, Jordi Saludes.

CLE (Core Language Engine, Book 1992)

Rosetta Machine Translation (Book 1994)

The current GF Resource Project covers ten languages:

Danish

English

Finnish

French

German

Italian

Norwegian (bokmål)

Russian

Spanish

Swedish

In addition, parts of Arabic, Estonian, Latin, and Urdu

API 1.0 not yet implemented for Danish and Russian

Complete inflection engine

Basic lexicon

It is more important to enable lexicon extensions than to provide a huge lexicon.

Texts: sequences of phrases with punctuation

Phrases: declaratives, questions, imperatives, vocatives

Tense, mood, and polarity: present, past, future, conditional ; simultaneous, anterior ; positive, negative

Questions: yes-no, "wh" ; direct, indirect

Clauses: main, relative, embedded (subject, object, adverbial)

Verb phrases: intransitive, transitive, ditransitive, prepositional

Noun phrases: proper names, pronouns, determiners, possessives, cardinals and ordinals

Coordination: lists of sentences, noun phrases, adverbs, adjectival phrases

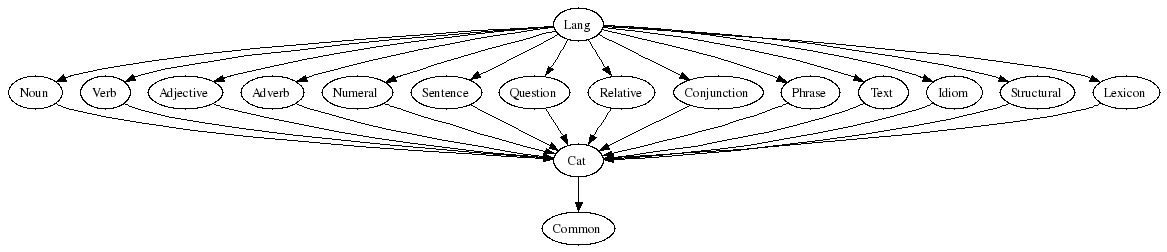

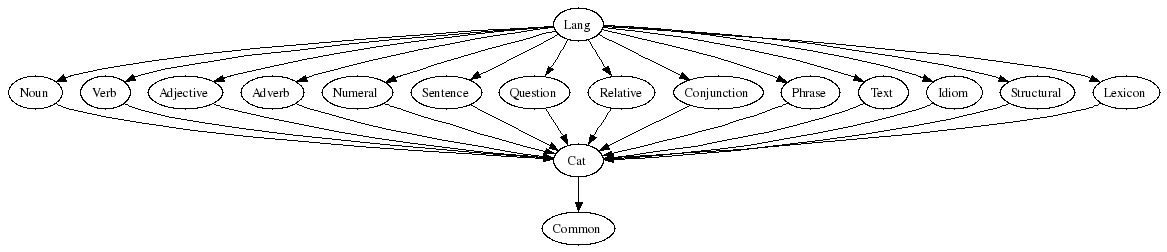

67 categories

150 abstract syntax combination rules

100 structural words

340 content words in a test lexicon

35 kLines of source code (4/3/2006):

abstract 1131

english 2344

german 2386

finnish 3396

norwegian 1257

swedish 1465

scandinavian 1023

french 3246 -- Besch + Irreg + Morpho 2111

italian 7797 -- Besch 6512

spanish 7120 -- Besch 5877

romance 1066

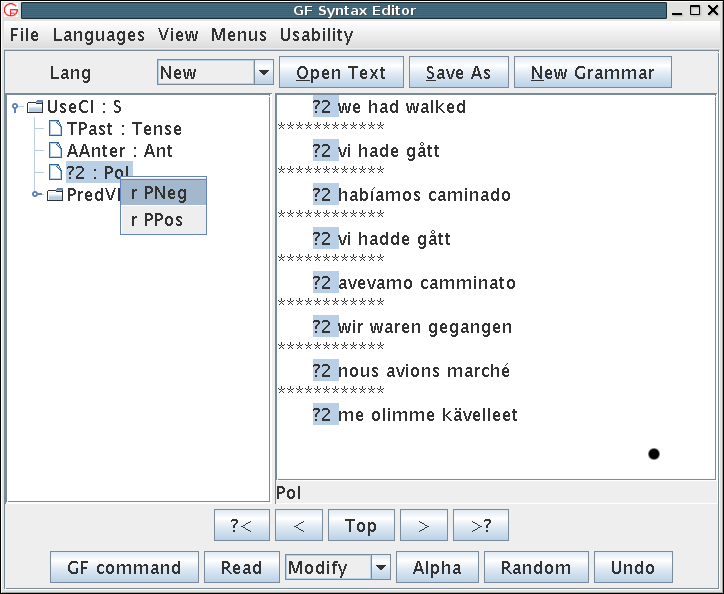

John walks.

TFullStop : Phr -> Text -> Text | TQuestMark, TExclMark

(PhrUtt : PConj -> Utt -> Voc -> Phr | PhrYes, PhrNo, ...

NoPConj | but_PConj, ...

(UttS : S -> Utt | UttQS, UttImp, UttNP, ...

(UseCl : Tense -> Anter -> Pol -> Cl -> S

TPres

ASimul

PPos

(PredVP : NP -> VP -> Cl | ImpersNP, ExistNP, ...

(UsePN : PN -> NP

john_PN)

(UseV : V -> VP | ComplV2, UseComp, ...

walk_V))))

NoVoc) | VocNP, please_Voc, ...

TEmpty

Every language implements these regular patterns that take "dictionary forms" as arguments.

regN : Str -> N

regA : Str -> A

regV : Str -> V

Their usefulness varies. For instance, they all are quite good in Finnish and English. In Swedish, less so:

regN "val" ---> val, valen, valar, valarna

Initializing a lexicon with regX for every entry is

usually a good starting point in grammar development.

In Swedish, giving the gender of N improves a lot

regGenN "val" neutrum ---> val, valet, val, valen

There are also special constructs taking other forms:

mk2N : (nyckel,nycklar : Str) -> N

mk1N : (bilarna : Str) -> N

irregV : (dricka, drack, druckit : Str) -> V

Regular verbs are actually implemented the Lexin way

regV : (talar : Str) -> V

To cover all situations, worst-case paradigms are given. E.g. Swedish

mkN : (apa,apan,apor,aporna : Str) -> N

mkA : (liten, litet, lilla, små, mindre, minst, minsta : Str) -> A

mkV : (supa,super,sup,söp,supit,supen : Str) -> V

Iregular words in IrregX, e.g. Swedish:

draga_V : V =

mkV

(variants { "dra" ; "draga"})

(variants { "drar" ; "drager"})

(variants { "dra" ; "drag" })

"drog"

"dragit"

"dragen" ;

Goal: eliminate the user's need of worst-case functions.

Syntactic structures that are not shared by all languages.

Alternative (and often more idiomatic) ways to say what is already covered by the API.

Not implemented yet.

Candidates:

bilen min

est-ce que tu dors ?

Mathematical

Multimodal

Present

Minimal

Shallow

It is a good idea to compile the library, so that it can be opened faster

GF/lib/resource-1.0% make

writes GF/lib/alltenses

GF/lib/present

GF/lib/resource-1.0/langs.gfcm

If you don't intend to change the library, you never need to process the source files again. Just do some of

gf -nocf langs.gfcm -- all 8 languages

gf -nocf -path=alltenses:prelude alltenses/LangSwe.gfc -- Swedish only

gf -nocf -path=present:prelude present/LangSwe.gfc -- Swedish in present tense only

The default parser does not work! (It is obsolete anyway.)

The MCFG parser (the new standard) works in theory, but can in practice be too slow to build.

But it does work in some languages, after waiting appr. 20 seconds

p -mcfg -lang=LangEng -cat=S "I would see her"

p -mcfg -lang=LangSwe -cat=S "jag skulle se henne"

Parsing in present/ versions is quicker.

Remedies:

Multilingual treebank entry = tree + linearizations

Some examples on treebank generation, assuming langs.gfcm

gr -cat=S -number=10 -cf | tb -- 10 random S

gt -cat=Phr -depth=4 | tb -xml | wf ex.xml -- all Phr to depth 4, into file ex.xml

Regression testing

rf ex.xml | tb -c -- read treebank from file and compare to present grammars

Updating a treebank

rf old.xml | tb -trees | tb -xml | wf new.xml -- read old from file, write new to file

Tree + linearizations

> gr -cat=Cl | tb

PredVP (UsePron they_Pron) (PassV2 seek_V2)

They are sought

Elles sont cherchées

Son buscadas

Vengono cercate

De blir sökta

De blir lette

Sie werden gesucht

Heidät etsitään

These can also be wrapped in XML tags (tb -xml)

Brute-force method that helps if real parsing is more expensive.

make treebank -- make treebank with all languages

gf -treebank langs.xml -- start GF by reading the treebank

> ut -strings -treebank=LangIta -- show all Ita strings

> ut -treebank=LangIta -raw "Quello non si romperebbe" -- look up a string

> i -nocf langs.gfcm -- read grammar to be able to linearize

> ut -treebank=LangIta "Quello non si romperebbe" | l -multi -- translate to all

Use morphological analyser

gf -nocf -retain -path=alltenses:prelude alltenses/LangSwe.gf

> ma "jag kan inte höra vad du säger"

Try out a morphology quiz

> mq -cat=V

Try out inflection patterns

gf -retain -path=alltenses:prelude alltenses/ParadigmsSwe.gfr

> cc regV "lyser"

The simplest way to start editing with all grammars is

gfeditor langs.gfcm

The forthcoming IDE will extend the syntax editor with

a Paradigms file browser and a control on what

parts of an application grammar remain to be implemented.

Get rid of discontinuous constituents (in particular, VP)

Example: mathematical/Predication:

predV2 : V2 -> NP -> NP -> Cl

instead of PredVP np (ComplV2 v2 np')

The application grammar is implemented with reference to the resource API

Individual languages are instantiations

Example: tram

Instead of parametrized modules:

select resource functions differently for different languages

Example: imperative vs. infinitive in mathematical exercises

Lexicon in language-dependent moduls

Combination rules in a parametrized module

Example: animal

--# -resource=present/LangEng.gf

--# -path=.:present:prelude

-- to compile: gf -examples QuestionsI.gfe

incomplete concrete QuestionsI of Questions = open Lang in {

lincat

Phrase = Phr ;

Entity = N ;

Action = V2 ;

lin

Who love_V2 man_N = in Phr "who loves men" ;

Whom man_N love_V2 = in Phr "whom does the man love" ;

Answer woman_N love_V2 man_N = in Phr "the woman loves men" ;

}

See Resource-HOWTO

Write a concrete syntax module for each abstract module in the API

Write a Paradigms module

Examples: English, Finnish, German, Russian

Examples: Romance (French, Italian, Spanish), Scandinavian (Danish, Norwegian, Swedish)

Write a Diff interface for a family of languages

Write concrete syntaxes as functors opening the interface

Write separate Paradigms modules for each language

Advantages:

Problems:

Everything else is variations of this

cat

Cl ; -- clause

VP ; -- verb phrase

V2 ; -- two-place verb

NP ; -- noun phrase

CN ; -- common noun

Det ; -- determiner

AP ; -- adjectival phrase

fun

PredVP : NP -> VP -> Cl ; -- predication

ComplV2 : V2 -> NP -> VP ; -- complementization

DetCN : Det -> CN -> NP ; -- determination

ModCN : AP -> CN -> CN ; -- modification

This toy Latin grammar shows in a nutshell how the core can be implemented.

param

Number = Sg | Pl ;

Person = P1 | P2 | P3 ;

Tense = Pres | Past ;

Polarity = Pos | Neg ;

Case = Nom | Acc | Dat ;

Gender = Masc | Fem | Neutr ;

oper

Agr = {g : Gender ; n : Number ; p : Person} ; -- agreement features

lincat

Cl = {

s : Tense => Polarity => Str

} ;

VP = {

verb : Tense => Polarity => Agr => Str ; -- finite verb

neg : Polarity => Str ; -- negation

compl : Agr => Str -- complement

} ;

V2 = {

s : Tense => Number => Person => Str ;

c : Case -- complement case

} ;

NP = {

s : Case => Str ;

a : Agr -- agreement features

} ;

CN = {

s : Number => Case => Str ;

g : Gender

} ;

Det = {

s : Gender => Case => Str ;

n : Number

} ;

AP = {

s : Gender => Number => Case => Str

} ;

lin

PredVP np vp = {

s = \\t,p =>

let

agr = np.a ;

subject = np.s ! Nom ;

object = vp.compl ! agr ;

verb = vp.neg ! p ++ vp.verb ! t ! p ! agr

in

subject ++ object ++ verb

} ;

ComplV2 v np = {

verb = \\t,p,a => v.s ! t ! a.n ! a.p ;

compl = \\_ => np.s ! v.c ;

neg = table {Pos => [] ; Neg => "non"}

} ;

DetCN det cn =

let

g = cn.g ;

n = det.n

in {

s = \\c => det.s ! g ! c ++ cn.s ! n ! c ;

a = {g = g ; n = n ; p = P3}

} ;

ModCN ap cn =

let

g = cn.g

in {

s = \\n,c => cn.s ! n ! c ++ ap.s ! g ! n ! c ;

g = g

} ;

LangX all the time

Extend old modules or add a new one?

Usually better to start a new one: then you don't have to implement it for all languages at once.

Exception: if you are working with a language-specific API extension, you can work directly in that module.