Introduction

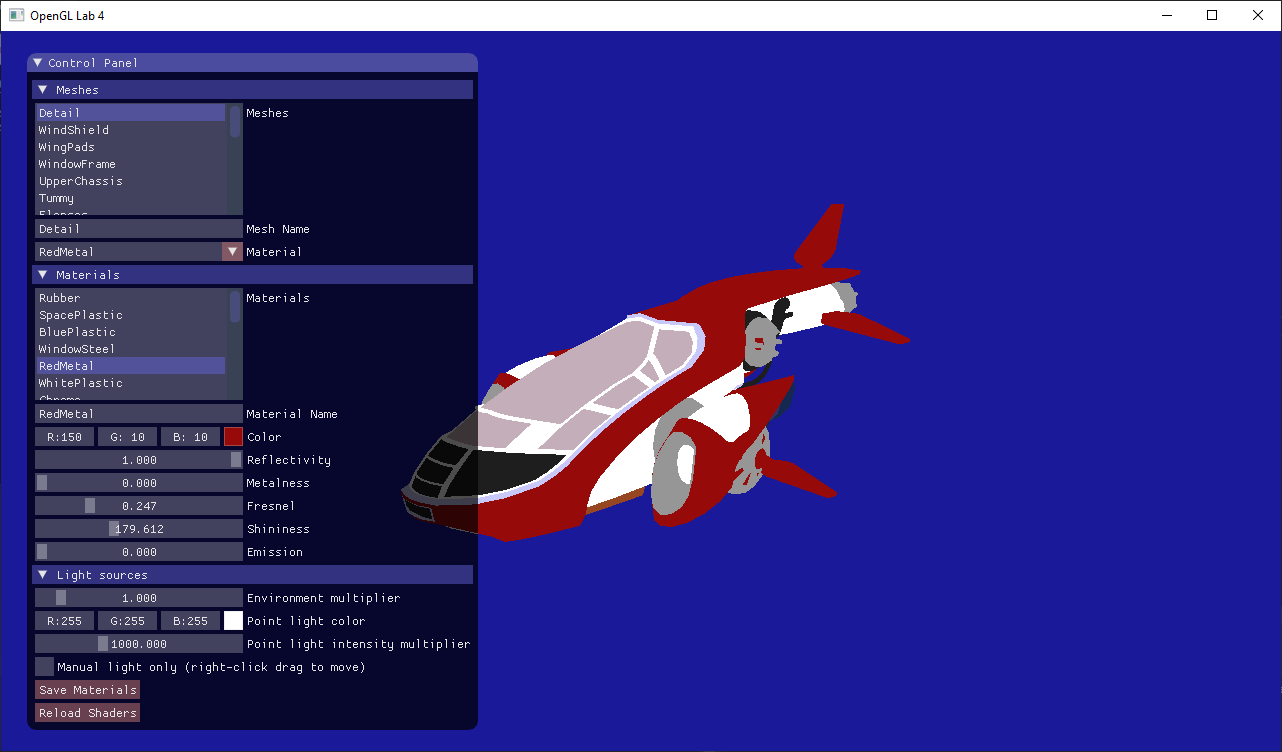

In this tutorial, we will learn to write a physically based shader. Compile and run the project, and you will see that we have supplied some starting code for you:

The code reads an .obj file with an associated material (.mtl) file. We have also added a GUI (in the function gui()) where you can change the properties of the materials. Currently, the only property that your shader respects is the color attribute. Go through the lab4_main.cpp file and make sure you understand what's going on. There shouldn't be anything really new to you here. Ask an assistant if some part confuses you.

Understand the GUI

In this tutorial, you can press the "reload shaders" button to recompile your shaders without restarting your program.

Part 1: Direct illumination

///////////////////////////////////////////////////////////////////////////////

// Material

///////////////////////////////////////////////////////////////////////////////

uniform vec3 material_color;

uniform float material_reflectivity;

uniform float material_metalness;

uniform float material_fresnel;

uniform float material_shinyness;

uniform float material_emission;main() function. So far it does not do very much, and it's up to you to fill it in. Let's start with calculating a proper diffuse reflection.

Diffuse term.

calculateDirectIllumination(), we need to figure out its input parameters

///////////////////////////////////////////////////////////////////////////

// Task 1.1 - Fill in the outgoing direction, wo, and the normal, n. Both

// shall be normalized vectors in view-space.

///////////////////////////////////////////////////////////////////////////

vec3 wo = vec3(0.0);

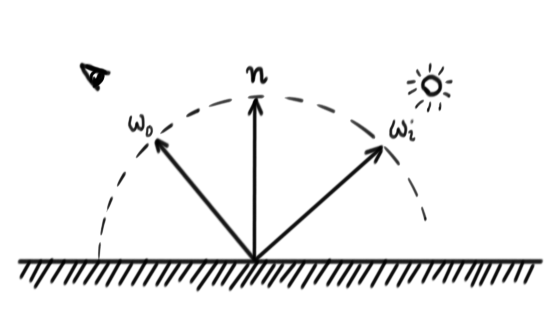

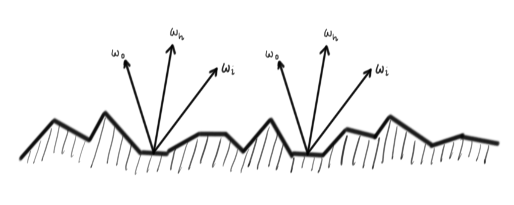

vec3 n = vec3(0.0);Later in the course, you will learn about the BRDF (Bidirectional Reflectance Distribution Function), but we will use the term already in this lab. The BRDF is a function that says how much of the incoming radiance (light) from a specific direction $\omega_i$ is reflected some outgoing direction $\omega_o$.

calculateDirectIllumination(), we need to calculate the incoming radiance from the light,

$ L_i = $

point_light_intensity_multiplier * point_light_color * $ \frac{1}{d^2} $

We divide the color of the material with $\pi$ to get the diffuse BRDF.

We multiply the incoming radiance with $(n\cdot \omega_i)$ to get the incoming irradiance

diffuse_term = material_color * $ \frac{1.0}{\pi}$ * $|n \cdot w_i|$ * $L_i$

material_emission * material_color

Change Scene.

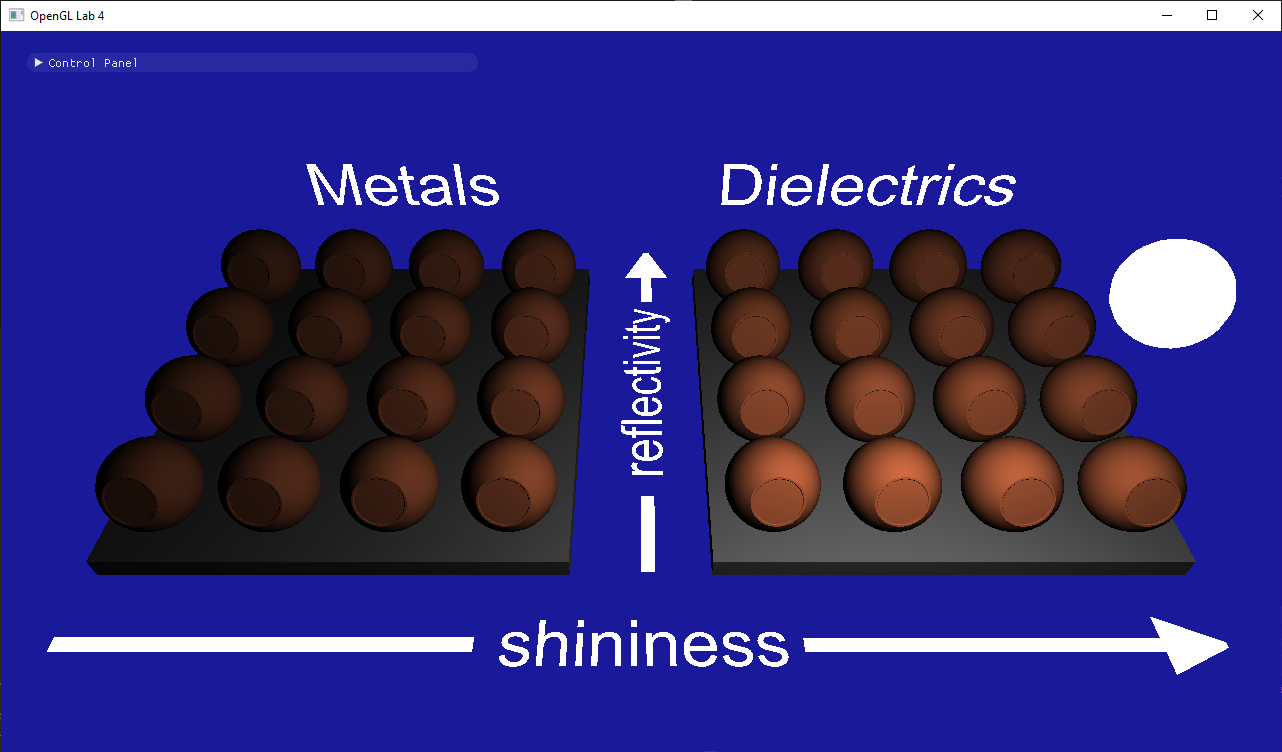

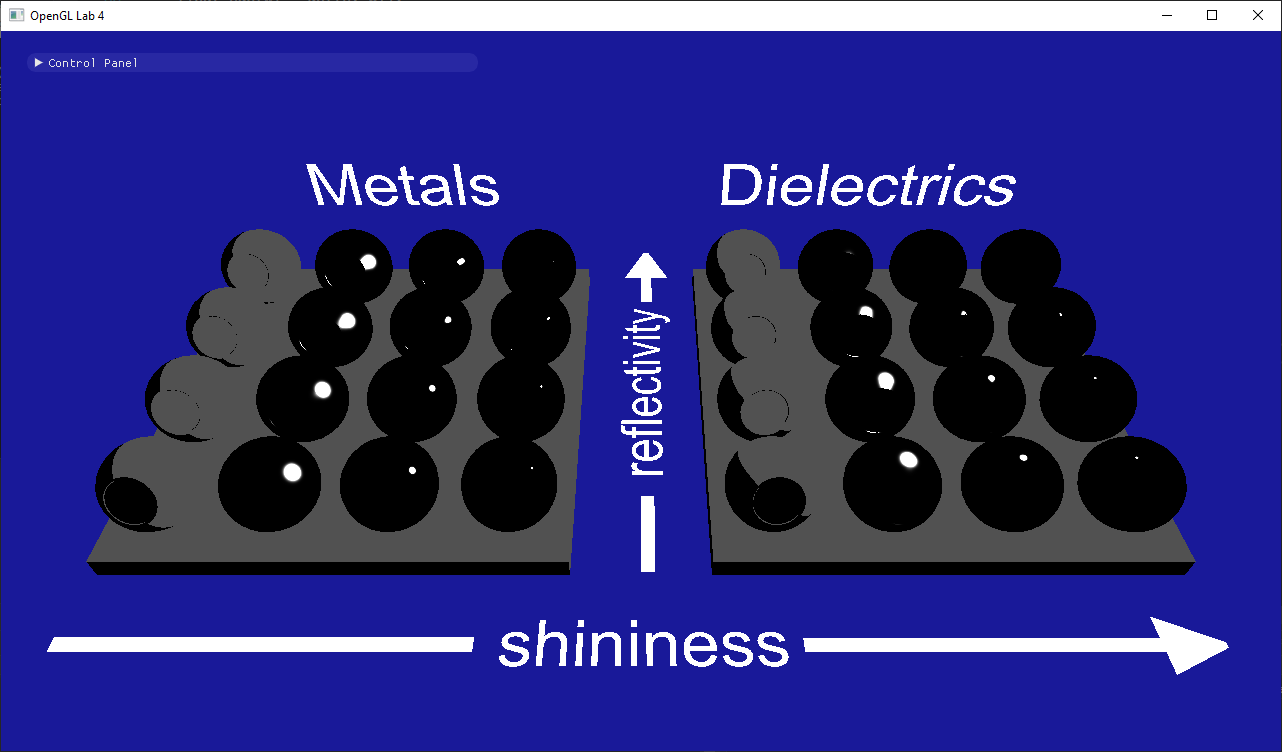

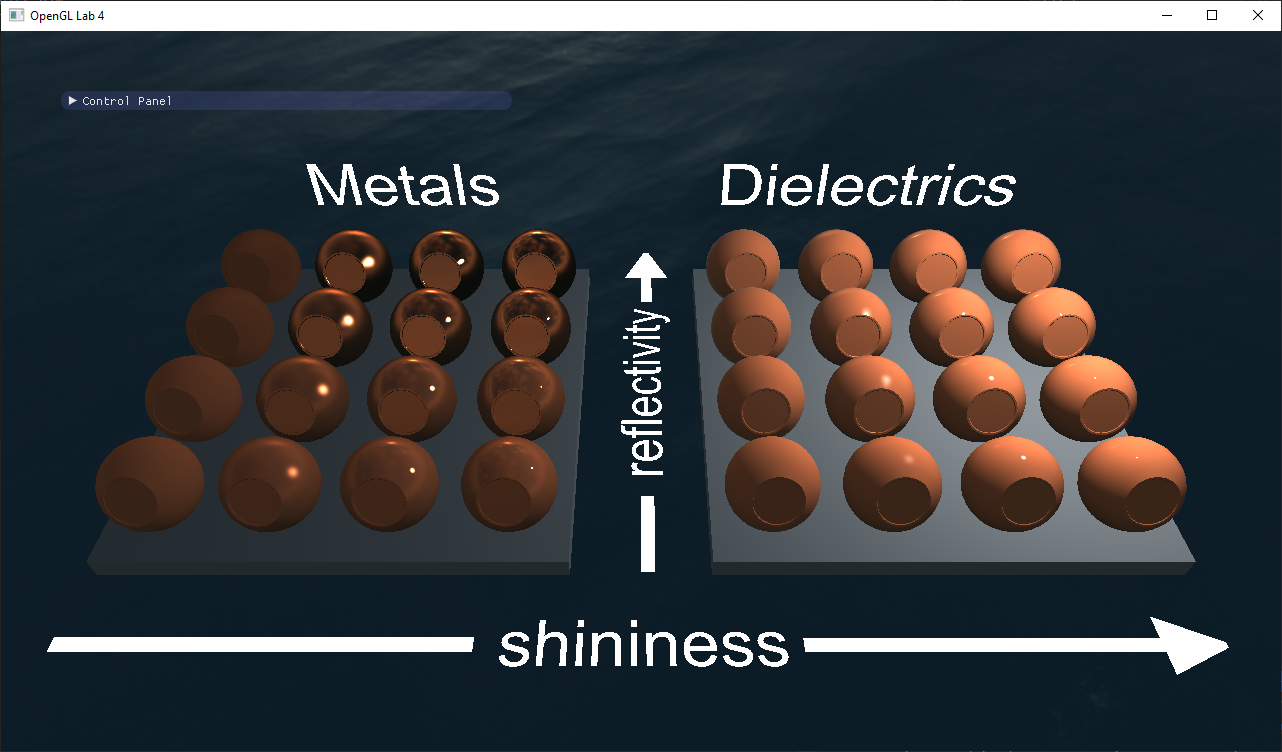

lab4_main.cpp, find where the NewShip.obj model is chosen, and choose the materialtest.obj scene instead. Run the program again:

// MaterialTest ///////////////////////////////////////////////////////////////

vec3 cameraPosition(0.0f, 30.0f, 30.0f);

vec3 cameraDirection = normalize(vec3(0.0f) - cameraPosition);

vec3 worldUp(0.0f, 1.0f, 0.0f);

const std::string model_filename = "../scenes/materialtest.obj";

///////////////////////////////////////////////////////////////////////////////

// NewShip ////////////////////////////////////////////////////////////////////

//vec3 cameraPosition(-30.0f, 10.0f, 30.0f);

//vec3 cameraDirection = normalize(vec3(0.0f) - cameraPosition);

//vec3 worldUp(0.0f, 1.0f, 0.0f);

//const std::string model_filename = "../scenes/NewShip.obj";

///////////////////////////////////////////////////////////////////////////////

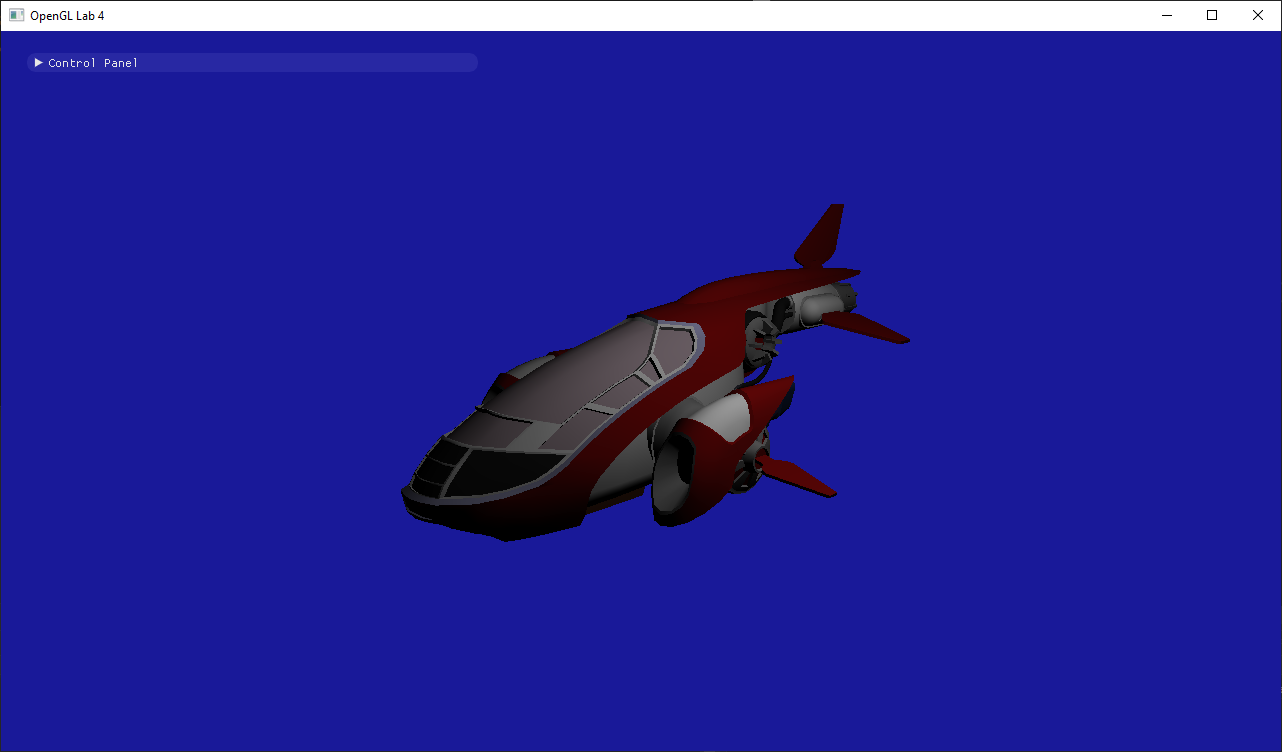

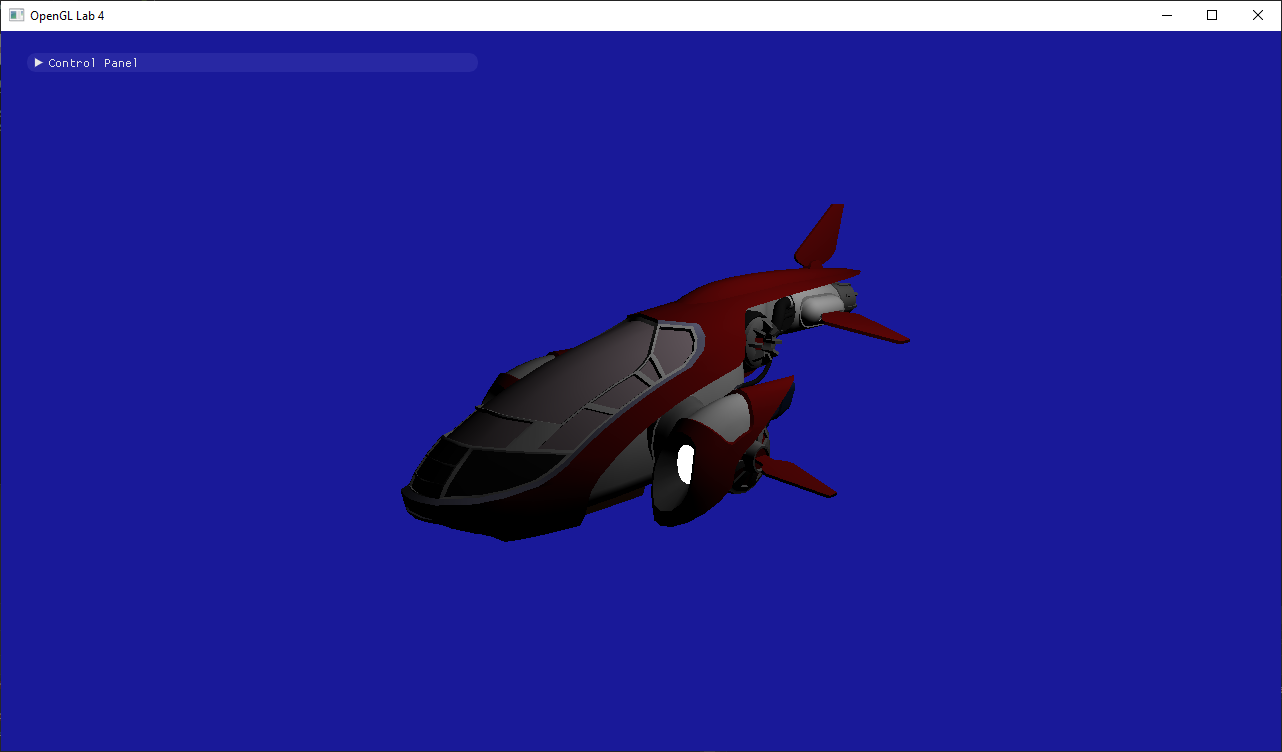

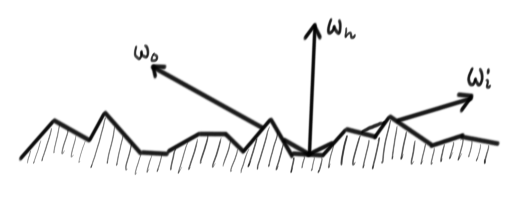

Microfacet BRDF

brdf = $ \frac{F(\omega_i) D(\omega_h) G(\omega_i, \omega_o)}{4(n \cdot \omega_o)(n \cdot \omega_i)} $

where $R_0$ (the

material_fresnel uniform in your shader) is the amount of reflection when looking straight at a surface.

Only the microfacets whose normal is $\omega_h$ will reflect in direction $\omega_o$. $D(\omega_h)$ gives us the density of such facets.

normalize($\omega_i + \omega_o$)$s = $

material_shininess$ D(\omega_h) = \frac{(s + 2)}{2\pi} (n \cdot \omega_h)^s$

When $\omega_o$ or $\omega_i$ are at grazing angles, radiance might be blocked by other microfacets. This is what $G(\omega_i,\omega_o)$ models.

min(1, min($2\frac{(n \cdot \omega_h)(n \cdot \omega_o)}{\omega_o \cdot \omega_h}, 2\frac{(n \cdot \omega_h)(n \cdot \omega_i)}{\omega_o \cdot \omega_h}$))

return brdf * dot(n, wi) * Li;

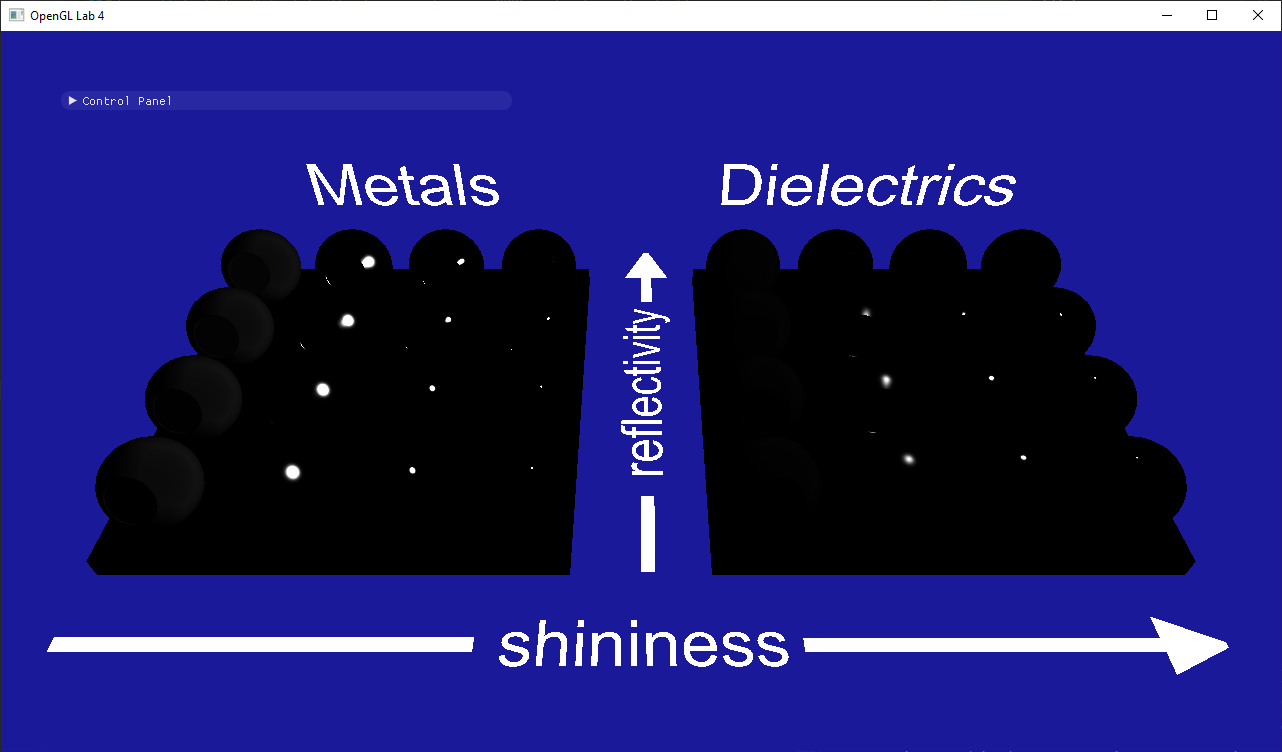

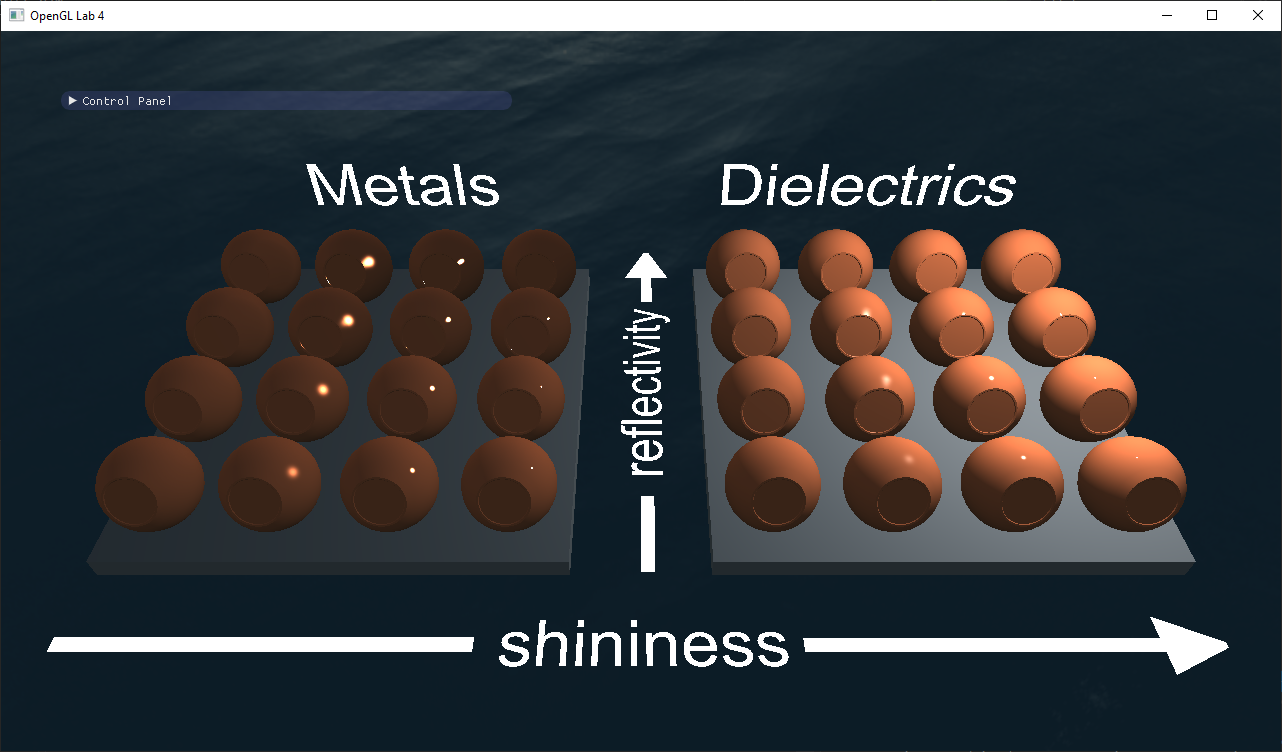

- Why are there no colors?

- Why are the metals with shininess 0 grayish while the dielectrics are black?

lightManualOnly=true in lab4_main.cpp to get the light stopped in the right place from start.

|

|

|

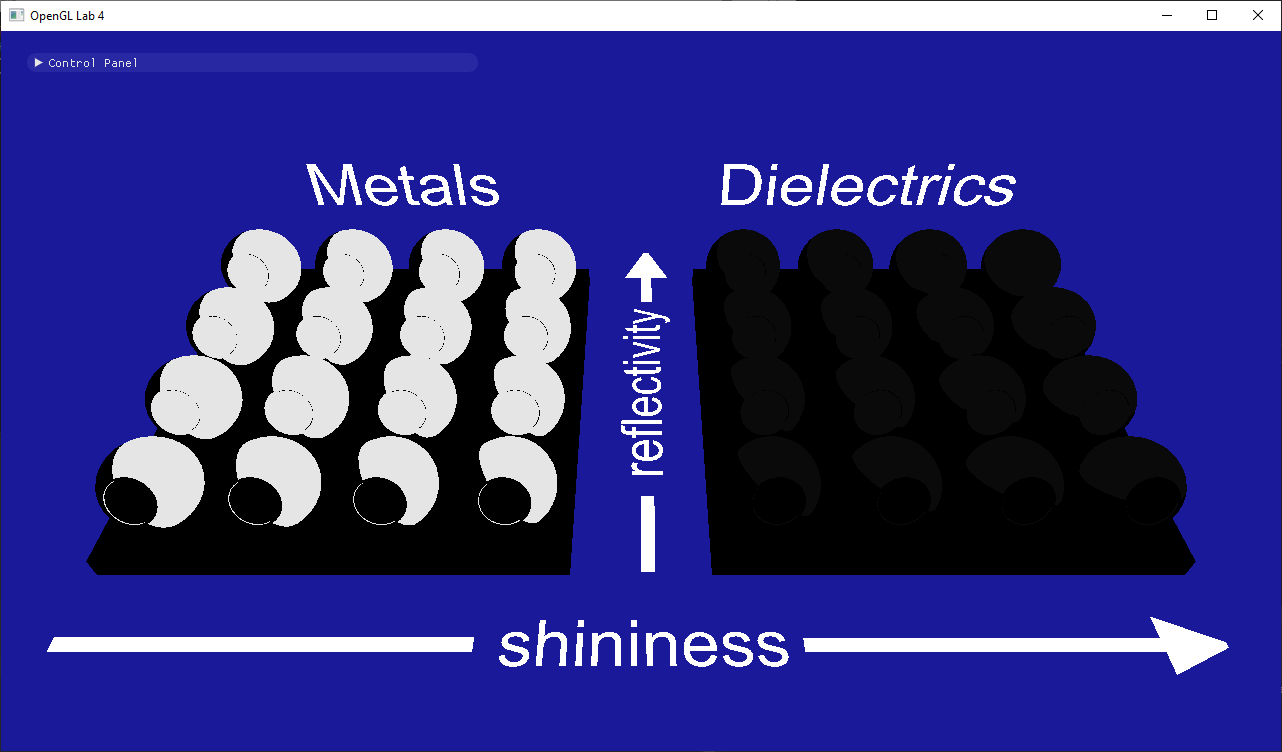

vec3(D) |

vec3(G) |

vec3(F) |

We do this because, in later labs, having single pixels with NaN value will spread around the image when we apply post-processing effects. NaN outputs should be fixed so they don't appear.

The most common operations that can result in NaNs are:

- Division

0/0. Also - Multiplying

0*∞. This is basically the same problem as before, as1/0 = ∞. Basically, try to avoid dividing by 0 (or numbers very close to it). - Trying to normalize a null or very short vector. Normalizing like that might internally become a

0/0problem. - Square root of negative numbers. Since we are only dealing with real numbers we don't get to play with imaginary ones.

- Power of negative numbers to exponents that are in the range $(-1, 1)$. Exponentiating to a number smaller than 1 is the same as taking some root of the number, so it's the same case as before. For example, $x^{0.5} = \sqrt{x}$.

acos()orasin()of values outside the $[-1, 1]$ range.

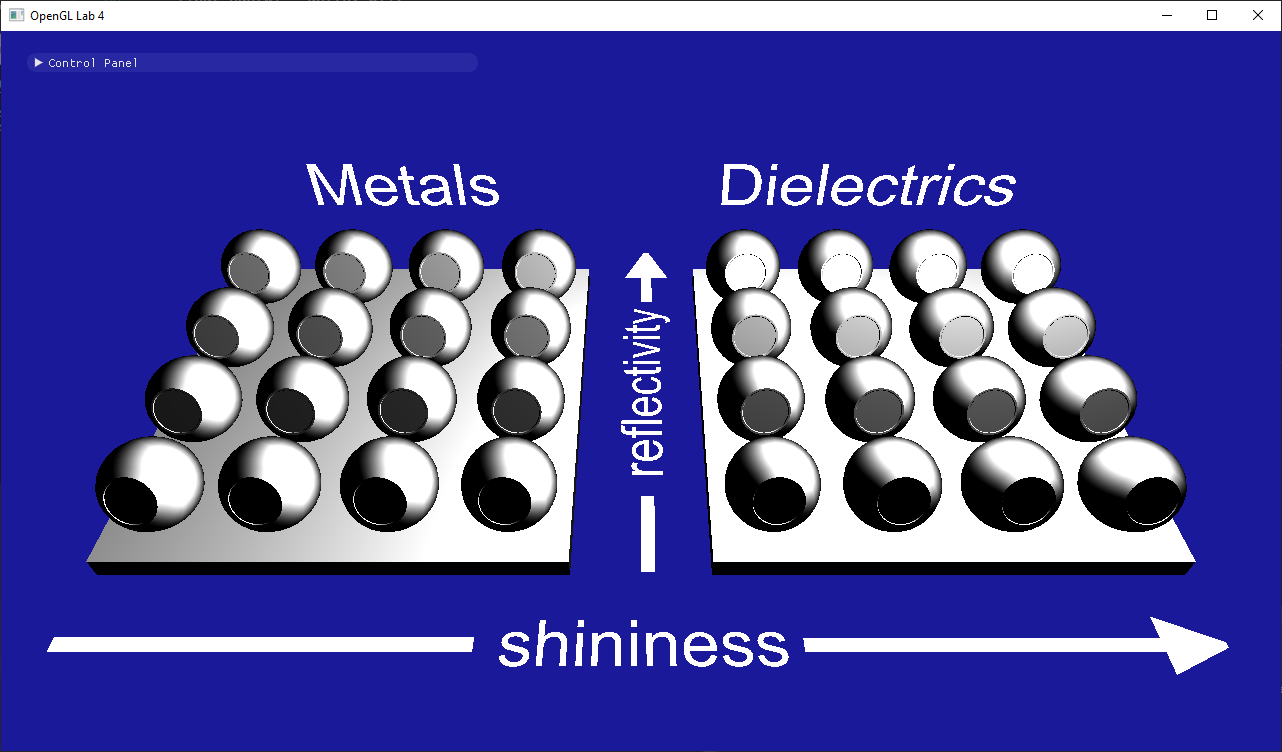

Material parameters

dielectric_term = brdf * $(n\cdot \omega_i) L_i$ + $(1-F(\omega_i))$ * diffuse_term

metal_term = brdf * material_color * $(n\cdot \omega_i) L_i$

material_metalness parameter:

microfacet_term = $m$ * metal_term + $(1-m)$ * dielectric_term

material_reflectivity parameter:

return $r$ * microfacet_term + $(1-r)$ * diffuse_term

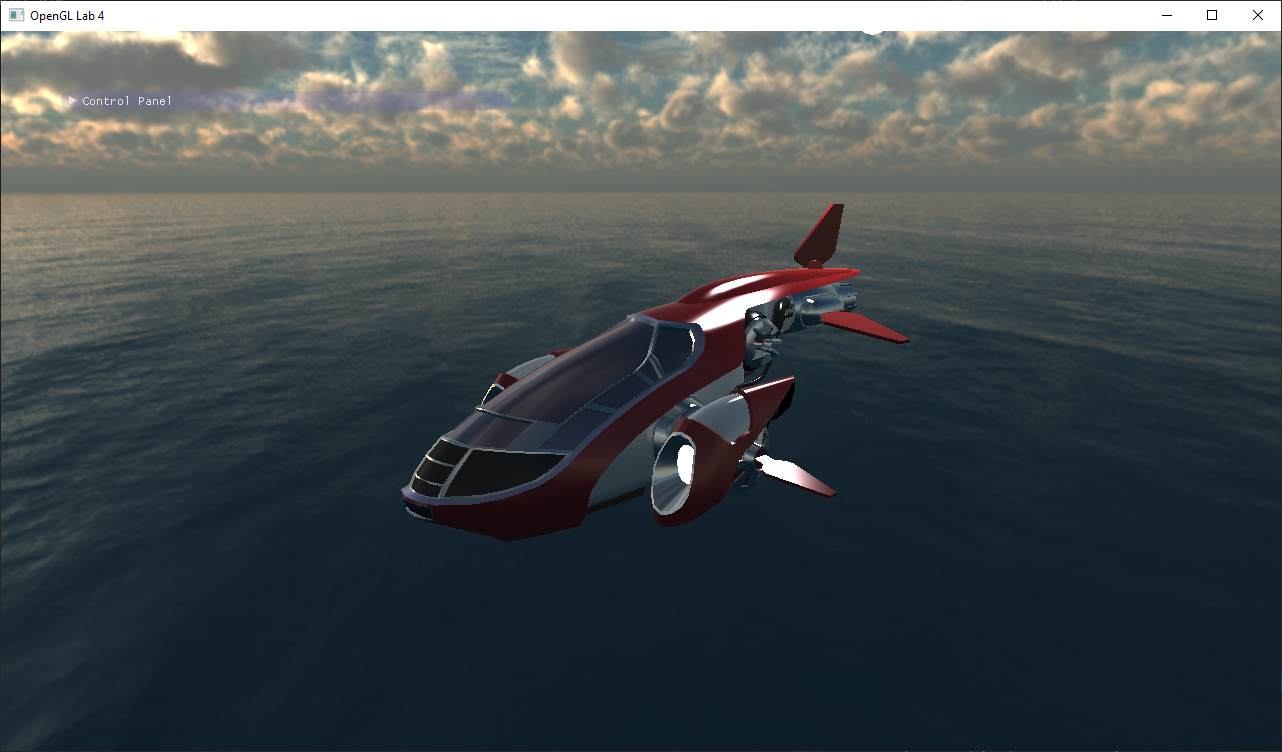

Part 2: Indirect illumination

Now stop playing, and let's get some indirect illumination going. While the first part of this tutorial was an exercise in doing everything as physically correct as we could, this part is going to contain some heavy-duty cheating. You may have noted that your scenes looks pretty dark, since they are only lit by a single point-light source. In this part we are going to add an environment map to the scene and then use that to illuminate our model.

Loading and viewing the environment map

initFullScreenQuad in lab4_main.cpp. Here you need to create the vertex array object with the geometry that will be sent to the gpu when we render a full screen quad later. Since we are always rendering the full screen we don't want to apply any projection or view matrix to these two triangles, so we can send screen space positions to the gpu directly, that way the vertex shader only has to pass them through (you can see that if you look at background.vert). The screen coordinates go from $(-1, -1)$ (bottom left) to $(1, 1)$ (top right).

Hint: In the code from lab 1 and 2 more than the position is sent to the shader. Here you should only send the positions, no normals or colors.

Also take into account that the vertex shader expects a 2 components per vertex, instead of 3 components as we sent in in previous labs.

Now go to drawFullScreenQuad, also in lab4_main.cpp, and implement here the code to draw the vertex array object you just created.

Note: you need to disable depth testing before drawing, and restore it's previous state when you are done. You can use glDisable(GL_DEPT_TEST) and glGetBooleanv(GL_DEPTH_TEST, &depth_test_enabled) to accomplish that.

After this, we're actually already loading the environment map for you, along with some preconvolved irradiance and reflection maps (more on that later). All you have to do to see it is to add the following lines after Task 4.3 in lab4_main.cpp.

glUseProgram(backgroundProgram);

labhelper::setUniformSlow(backgroundProgram, "environment_multiplier", environment_multiplier);

labhelper::setUniformSlow(backgroundProgram, "inv_PV", inverse(projectionMatrix * viewMatrix));

labhelper::setUniformSlow(backgroundProgram, "camera_pos", cameraPosition);

drawFullScreenQuad();

scenes/envmaps/001.hdr

Diffuse lighting with the irradiance map

scenes/envmaps/001_irradiance.hdr. Look at the contents of that image (below) and make sure you understand what it contains. We also load it and send it to the shader so all you have to do is the lookup.

scenes/envmaps/001_irradience.hdr

calculateIndirectIllumination() function, steal the code from the background shader that calculates the spherical coordinates of a direction to fetch radiance, and use it to fetch the irradiance you want from the irradiance map using the world-space normal $n_{ws} = $ viewInverse * $n$.

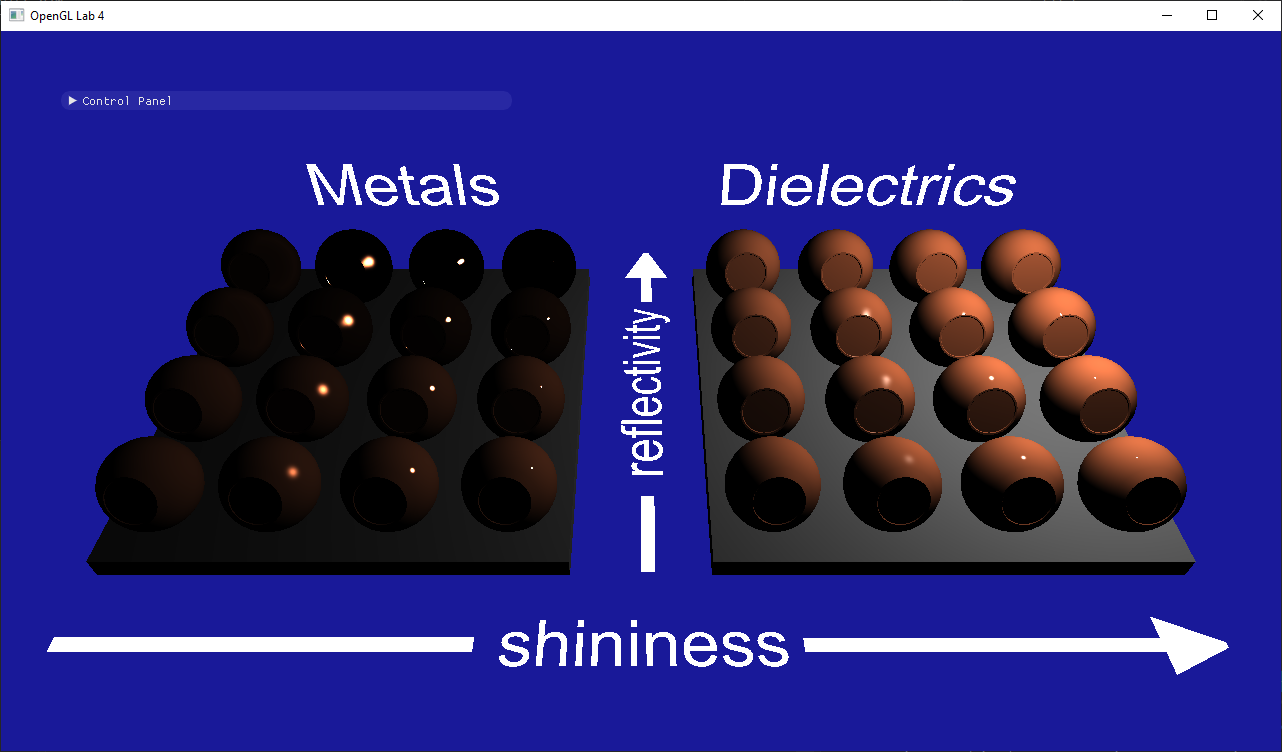

Then use this to calculate your diffuse_term = material_color * (1.0 / PI) * irradiance and return that value. Now run you code and enjoy the result:

Glossy reflections using preconvolved environment maps

scenes/envmaps/ folder (or the first four below) to see what we have done.

scenes/envmaps/001_dl_[0-4].hdr

reflect() function). Then calculate the spherical coordinates (Hint: Look in the background.frag shader) and look up the pre-convolved incoming radiance using: roughness = $\sqrt{\sqrt{2 / (s + 2)}}$$L_i$ =

environment_multiplier * textureLod(reflectionMap, lookup, roughness * 7.0).xyz

dielectric_term = $F(\omega_i) L_i$ + $(1-F(\omega_i))$ * diffuse_termmetal_term = $F(\omega_i)$ * material_color * $L_i$

material_metalness and material_reflectivity just as you did for the direct illumination. Now start the program again and pat yourself on the shoulder.

When done, show your result to one of the assistants. Have the finished program running and be prepared to explain what you have done.