Introduction

In the previous tutorials, we have rendered directly to the default framebuffer. In this tutorial, we are going to introduce framebuffer objects, so that we can render to a texture (updating it every frame) and do post processing effects.

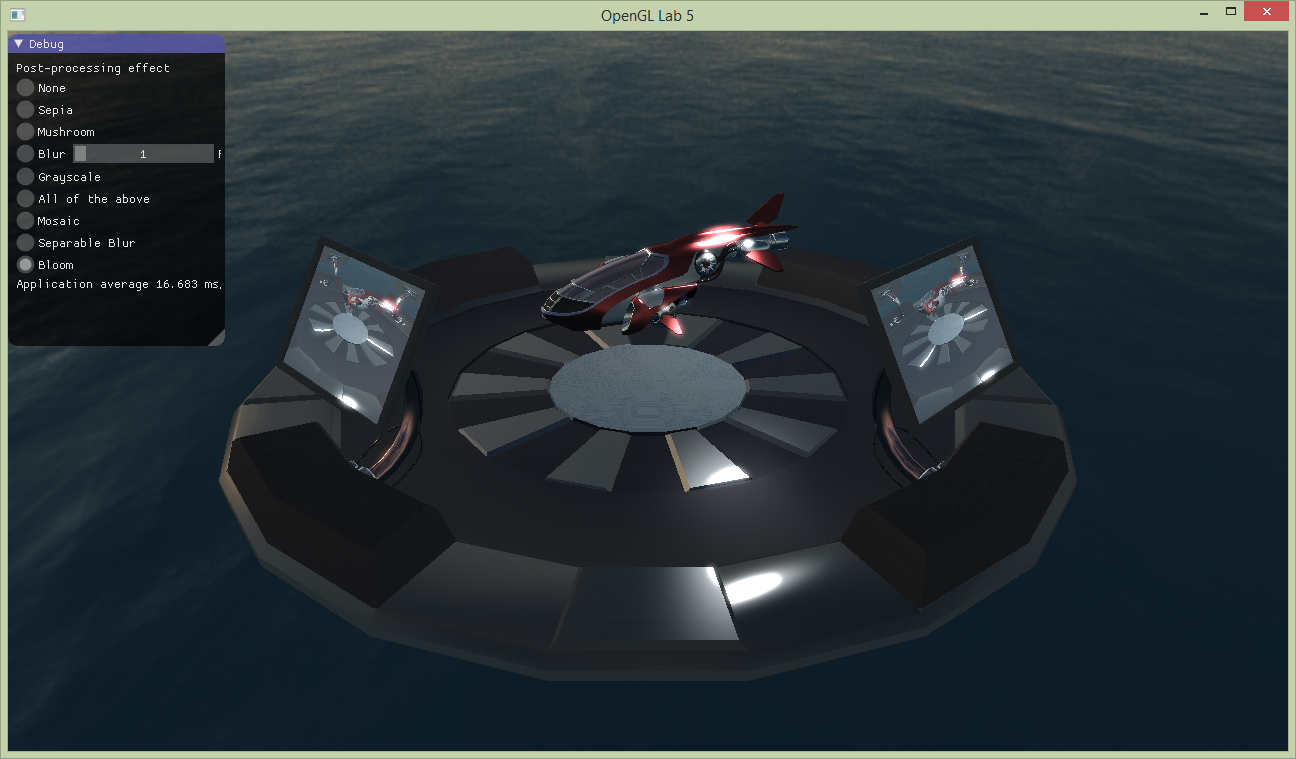

Run the code and observe the fighter on the landing pad. The shading is very dull. Start by copying your implementation of calculateDirectIllumination() and calculateIndirectIllumination() in simple.frag from tutorial 4 to the place-holder implementation in this tutorial (the shader is called simple.frag also in this tutorial). The result should look like below:

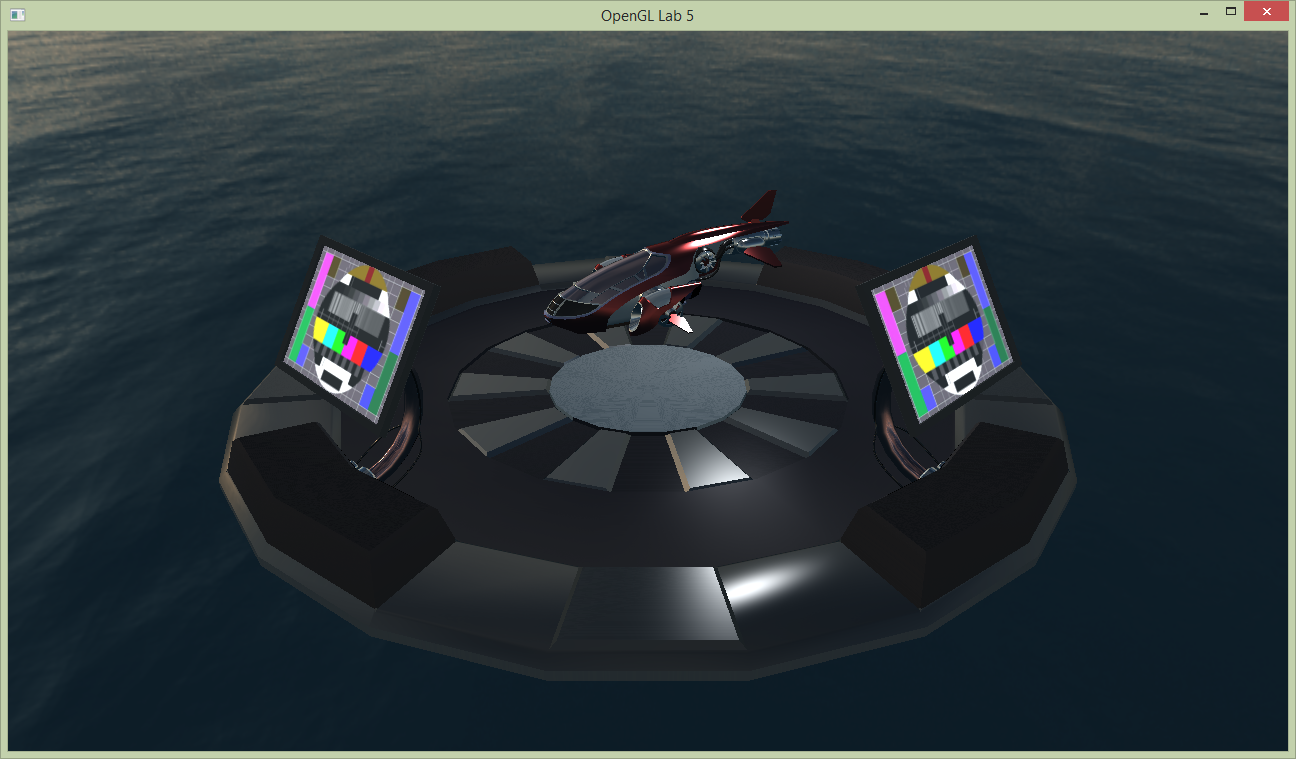

There are monitors beside the landing pad which currently have a static emissive texture showing a tv test image. We will replace this texture with a video feed from a security camera hoovering on the opposite side of the platform. This is done in two steps:

- Render from the security cameras point of view to a framebuffer.

- Use the color texture from the framebuffer as emissive texture.

First we need to make a framebuffer. We have provided a skeleton for making framebuffers in FboInfo, but it is not completed yet. In the constructor, we have generated two textures, one for color and one for depth, but we have not yet bound them together to a framebuffer.

Task 1: Setting up Framebuffer objects

Similarily to how we create a vertex array object and bind buffers to it (see tutorial 1 and 2), we create a framebuffer and attach textures to it. At task 1, generate a framebuffer and bind it:

// >>> @task 1

glGenFramebuffers(1, &framebufferId);

glBindFramebuffer(GL_FRAMEBUFFER, framebufferId);

// bind the texture as color attachment 0 (to the currently bound framebuffer)

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, GL_TEXTURE_2D, colorTextureTarget, 0);

glDrawBuffer(GL_COLOR_ATTACHMENT0);

// bind the texture as depth attachment (to the currently bound framebuffer)

glFramebufferTexture2D(GL_FRAMEBUFFER, GL_DEPTH_ATTACHMENT, GL_TEXTURE_2D, depthBuffer, 0);

initGL(), there is a section devoted to creation of framebuffers. We will create five framebuffers and push them to the vector fboList, enough framebuffers for the mandatory and optional assignments in this tutorial.

int w, h;

SDL_GetWindowSize(g_window, &w, &h);

const int numFbos = 5;

for (int i = 0; i < numFbos; i++)

fboList.push_back(FboInfo(w, h));

display(). Have a look at how this is implemented.

Task 2: Rendering to the FBO

We will now render from the security cameras point of view, and we do this before we render from the users camera. Let's render to the first framebuffer in our fboList. Bind the framebuffer at @task 2 with:

// >>> @task 2

FboInfo &securityFB = fboList[0];

glBindFramebuffer(GL_FRAMEBUFFER, securityFB.framebufferId);

glViewport(0, 0, securityFB.width, securityFB.height);

glClearColor(0.2, 0.2, 0.8, 1.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

securityCamViewMatrix and securityCamProjectionMatrix.

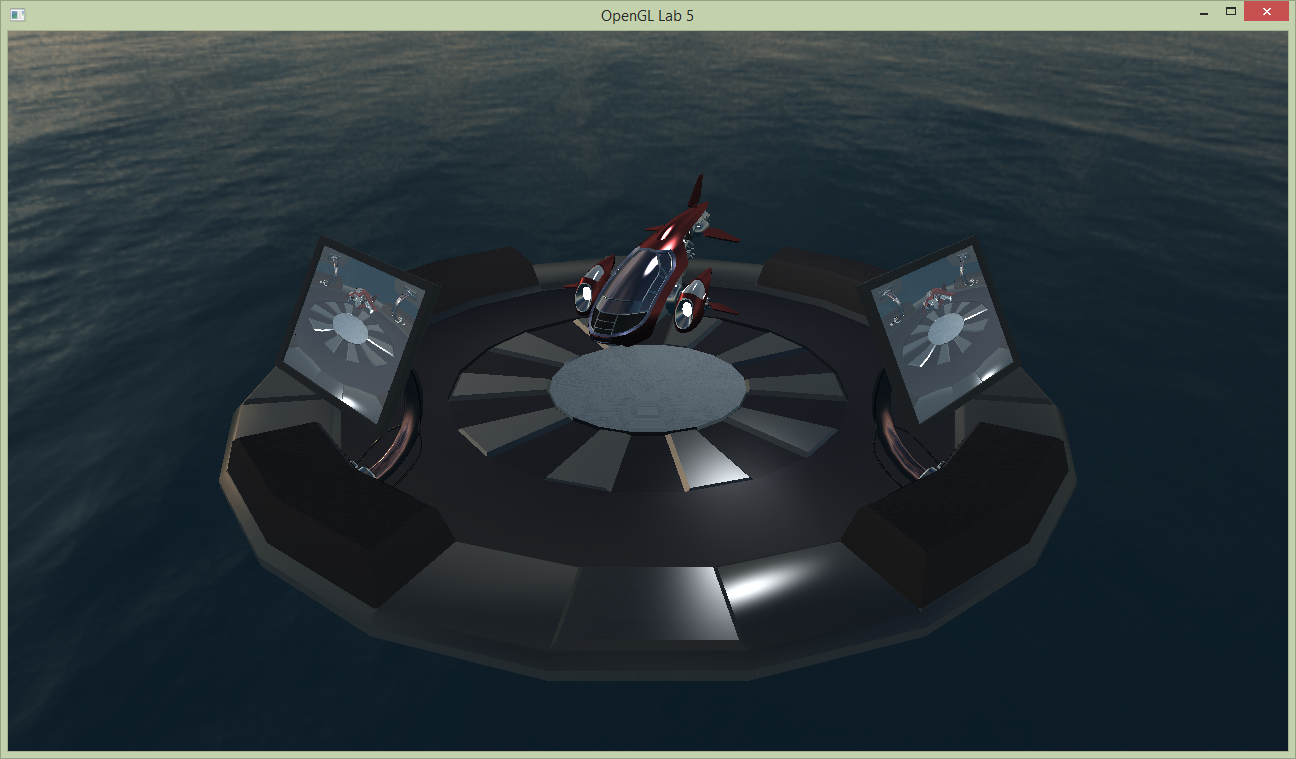

Now we are to use the color attachment as a texture (emissiveMap in simple.frag) when we render the landing pad from the users view below. We will just change the texture id used in the landing pad model.

labhelper::Material &screen = landingpadModel->m_materials[8];

screen.m_emission_texture.gl_id = securityFB.colorTextureTarget;

Task 3: Post processing

Post processing is perhaps the most common use for render to texture today, with the very likely exception of shadow maps. Most, if not all, games make use of a post-processing pass to change aspects of the look of the game, creating effects such as motion blur, depth of field, bloom, simple color changes, magic mushrooms, and more.

Conceptually post processing is simple: instead of rendering the scene to the screen, it is rendered an off-screen render target of the same size. Next, this render target is used as a texture when rendering a full screen quad and a fragment shader can be used to change the appearance. Remember that a fragment shader is executed once for each fragment, and for a full screen quad this is the same as each pixel.

Now, replace the default framebuffer with the next (unused) FBO in fboList when rendering from the user camera. We will use this as a texture in the post processing pass. After this rendering, bind the color texture of the just used framebuffer to texture unit 0. Next, we will perform the post processing pass:

- Bind the default framebuffer and clear it.

- Set

postFxShaderas the active shader program. - Set uniforms for

postFxShader(see below). - (Remember to bind the texture to texture unit 0.)

- Draw two triangles that cover the viewport to start a fragment shader per pixel (see below).

labhelper::setUniformSlow(postFxShader, "time", currentTime);

labhelper::setUniformSlow(postFxShader, "currentEffect", currentEffect);

labhelper::setUniformSlow(postFxShader, "filterSize", filterSizes[filterSize - 1]);

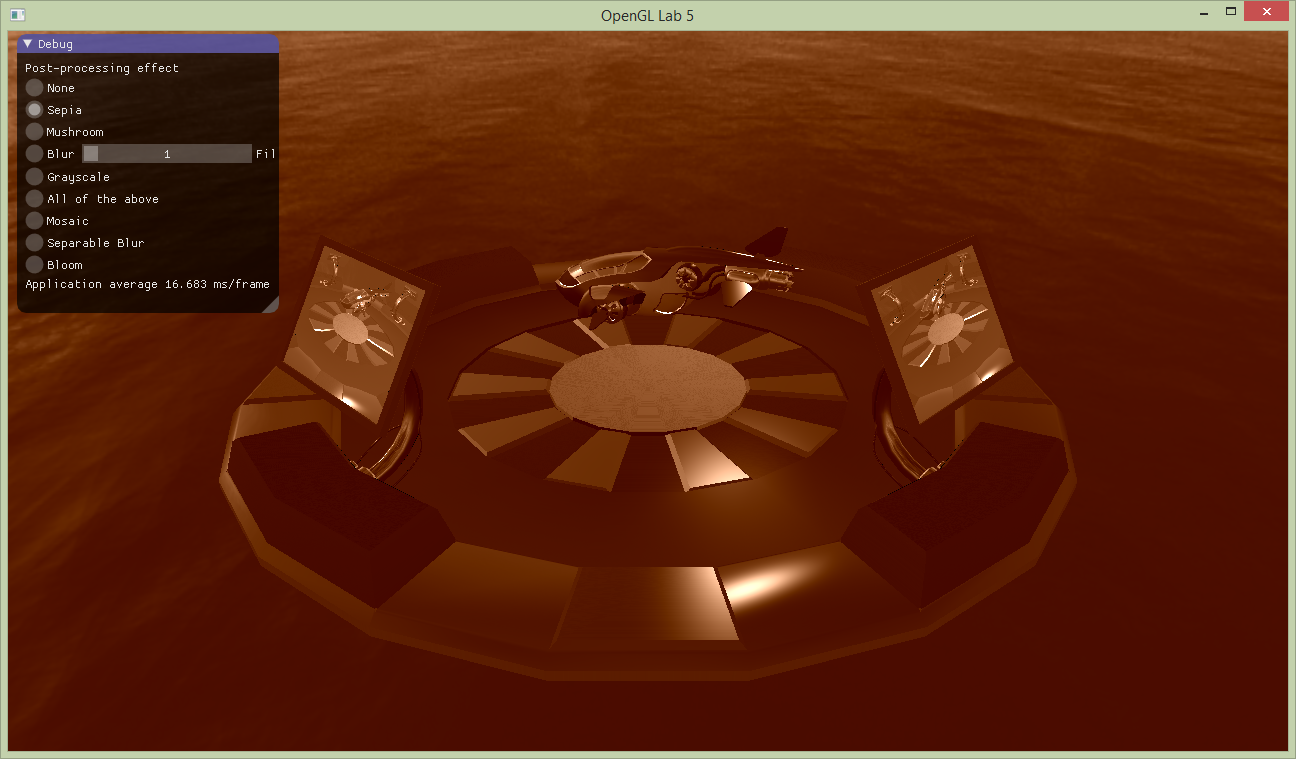

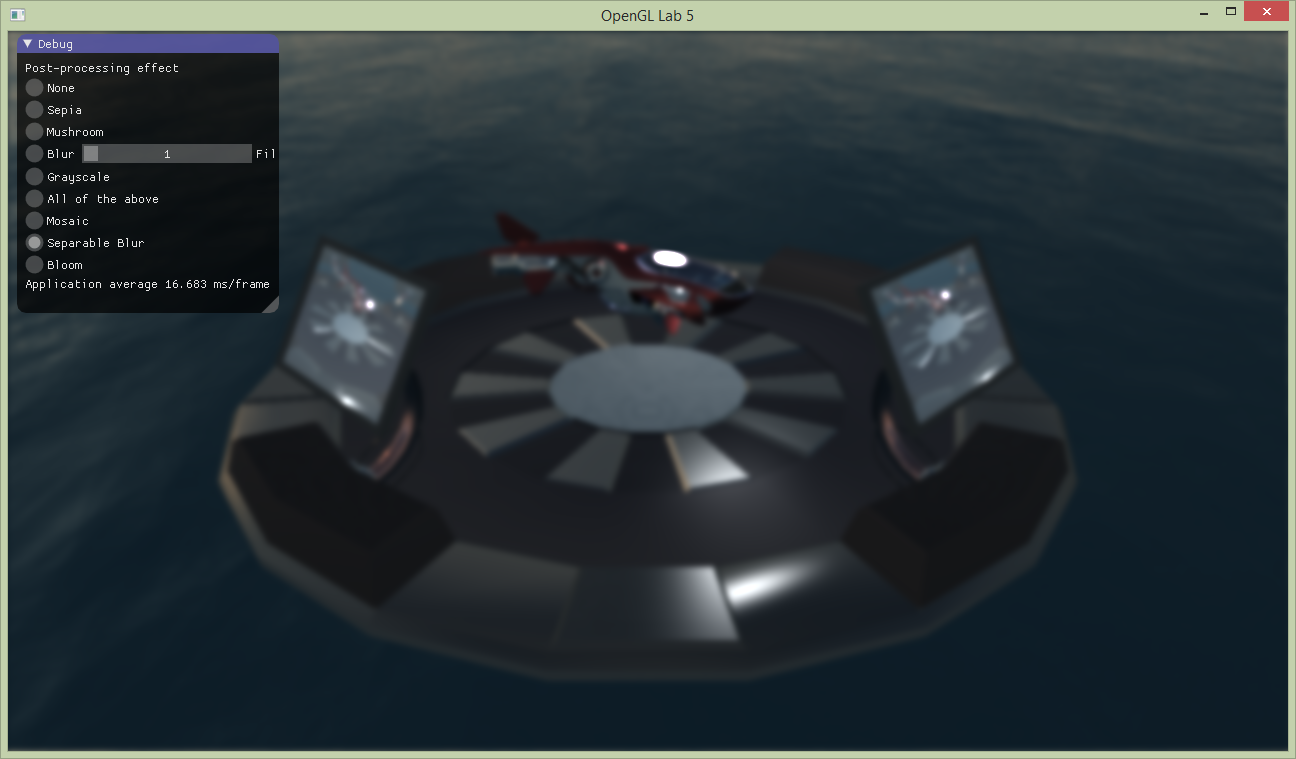

labhelper::drawFullScreenQuad();postFxShader. The currently used effect can be controlled with the uniform currentEffect which is set from the gui (just uncomment gui(); in the rendering loop in main()). With the Sepia post processing, that mimics a toning technique of black-and-white photography, the result looks like below.

Inspect the postFx.frag shader again. There are several functions defined that can be used to achieve different effects. Notice that they affect different properties to achieve the effect: the wobbliness is affected by changing the input coordinate, blur samples the input many times, while the two last simply change the color sample value.

Note that we use a helper function to access the texture:

vec4 textureRect(in sampler2D tex, vec2 rectangleCoord)

{

return texture(tex, rectangleCoord / textureSize(tex, 0));

}

The functions are used from the main function in the shader, try out different ones, and combine them. Notice the effect which is a variation that chains all effects (except grayscale).

vec2 mushrooms(vec2 inCoord);vec3 blur(vec2 coord);vec3 grayscale(vec3 sample);vec3 toSepiaTone(vec3 rgbSample);Experiment with the different effects, for example change the colorization in the sepia tone effect, can you make it red? Also try combining them. Try to understand how each one produces its result.

Task 4: Post processing - Mosaic

You shall now add another effect. The effect is called Mosaic and the result is shown below. Each square block of pixels shows the color of the same pixel (single sample, no averaging needed), for example the top right or some such. Implement this effect by adding a new function in the fragment shader. Consider the pre-made effects: what part of the data do you need to change?

When done, show your result to one of the assistants. Have the finished program running and be prepared to explain what you have done.

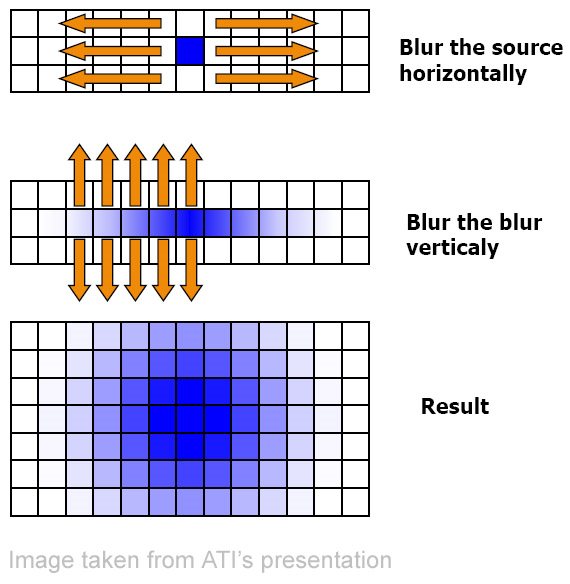

Optional 5: Efficient Blur and Bloom

Heavy blur requires sampling a large area. To implement such large filter kernels efficiently, we can exploit the fact that the Gaussian filter kernel can be decomposed into a vertical and horizontal component, which are then executed as two consecutive passes. The process is illustrated below.

To implement this in our tutorial, we will use two more FBOs: one to store the result of the first, horizontal, blur pass, and then another to receive the final blur after the vertical blur pass. Note that, in practice, we can just ping-pong between buffers, to save storage space. However, this adds confusion, and we want the blur in a separate buffer to create bloom.

We have provided you with shaders implementing the horizontal and vertical filter kernels, see shaders/horizontal_blur.frag and shaders/vertical_blur.frag. Load these together with the vertex shadershaders/postFx.vert, and store the references in variables named horizontalBlurShader and verticalBlurShader.To render the blur, use this algorithm:

- Render a full-screen quad into an fbo (here called

horizontalBlurFbo).- Use the shader horizontalBlurShader.

- Bind the postProcessFbo.colorTextureTarget as input frame texture.

- Render a full-screen quad into an fbo (here called

verticalBlurFbo).- Use the shader verticalBlurShader.

- Bind the horizontalBlurFbo.colorTextureTarget as input frame texture.

Optional 6: Bloom

Bloom, or glow, makes bright parts of the image bleed onto darker neighboring bits. This creates an effect akin to what our optical system produces when things are really bright. Therefore this can create the impression that parts of the image are far brighter than what can actually be represented on a screen. Cool. But how to do that?

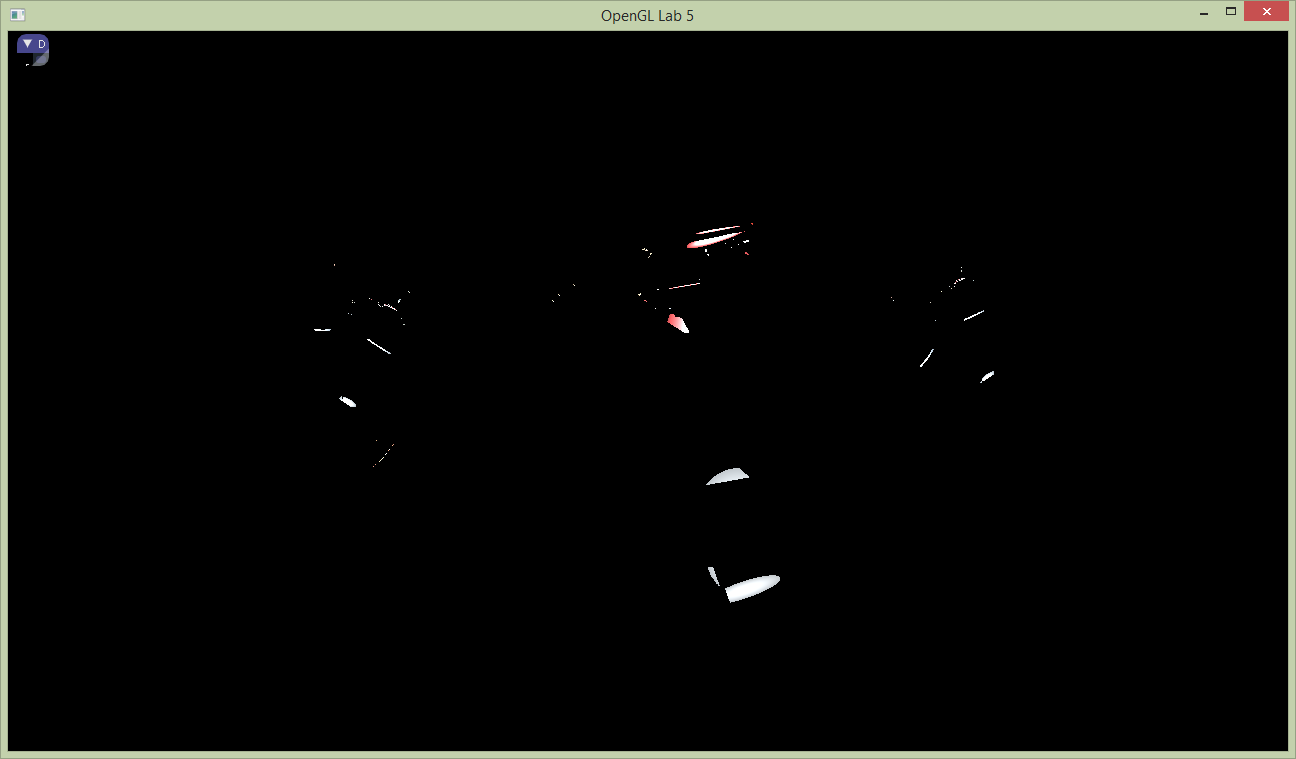

The only thing we really need to add is a cutoff pass, before blurring the image, to remove all the dark portions of the scene. There is a shader for this purpose too: shaders/cutoff.frag. Load the shader, use the fifth created FBO (here called cutoffFbo), and draw a full-screen pass into it. When visualized it should look like this:

Then use the cutoffFbo as input to the blur, which should produce a result, a lot like the image below.

Finally, all we need to do is to add this to the, unblurred, frame buffer (which should still be untouched in postProcessFbo). This can be achieved by simply rendering a full screen quad using additive blending, into this frame buffer. Another way is to bind it to a second texture unit during the post processing pass, and sample and add in the post processing shader. In our case, this last should be the easiest option. The screen shot below shows the bloom effect, where the blooming parts are also boosted by a factor of to, to create a somewhat over the top bloom effect.