Architecture

Emil Axelsson

AFP guest lecture, Feb 2012

Signal processing for mobile communication gets increasingly demanding

Old figures:

Industry needs to move to new, more parallel hardware architectures

Current signal processing code mostly written in C

A joint project with Ericsson, Chalmers and ELTE University (Budapest)

Aims to make digital signal processing (DSP) code more high-level

Method: Domain-specific language with associated compiler

Initial application: Radio base stations for mobile communication

Intension to be applicable for DSP in general

ANSI-C specification:

for (j = 0; j < L_frame; j++, p++, p1++)

{

t0 = L_mac (t0, *p, *p1);

}

corr[i] = t0;Equivalent loop optimized for specific processor:

#pragma MUST_ITERATE(80,160,80);

for (j = 0; j < L_frame; j++)

{

pj_pj = _pack2 (p[j], p[j]);

p0_p1 = _mem4_const(&p0[j+0]);

prod0_prod1 = _smpy2 (pj_pj, p0_p1);

t0 = _sadd (t0, _hi (prod0_prod1));

t1 = _sadd (t1, _lo (prod0_prod1));

p2_p3 = _mem4_const(&p0[j+2]);

prod0_prod1 = _smpy2 (pj_pj, p2_p3);

t2 = _sadd (t2, _hi (prod0_prod1));

t3 = _sadd (t3, _lo (prod0_prod1));

p4_p5 = _mem4_const(&p0[j+4]);

prod0_prod1 = _smpy2 (pj_pj, p4_p5);

t4 = _sadd (t4, _hi (prod0_prod1));

t5 = _sadd (t5, _lo (prod0_prod1));

p6_p7 = _mem4_const(&p0[j+6]);

prod0_prod1 = _smpy2 (pj_pj, p6_p7);

t6 = _sadd (t6, _hi (prod0_prod1));

t7 = _sadd (t7, _lo (prod0_prod1));

}

corr[i] = t0; corr[i+1] = t1;

corr[i+2] = t2; corr[i+3] = t3;

corr[i+4] = t4; corr[i+5] = t5;

corr[i+6] = t6; corr[i+7] = t7;Hand-optimized version probably not suited for a different processor; non-portable!

#pragma MUST_ITERATE(80,160,80);

for (j = 0; j < L_frame; j++)

{

pj_pj = _pack2 (p[j], p[j]);

p0_p1 = _mem4_const(&p0[j+0]);

prod0_prod1 = _smpy2 (pj_pj, p0_p1);

t0 = _sadd (t0, _hi (prod0_prod1));

t1 = _sadd (t1, _lo (prod0_prod1));

p2_p3 = _mem4_const(&p0[j+2]);

prod0_prod1 = _smpy2 (pj_pj, p2_p3);

t2 = _sadd (t2, _hi (prod0_prod1));

t3 = _sadd (t3, _lo (prod0_prod1));

p4_p5 = _mem4_const(&p0[j+4]);

prod0_prod1 = _smpy2 (pj_pj, p4_p5);

t4 = _sadd (t4, _hi (prod0_prod1));

t5 = _sadd (t5, _lo (prod0_prod1));

p6_p7 = _mem4_const(&p0[j+6]);

prod0_prod1 = _smpy2 (pj_pj, p6_p7);

t6 = _sadd (t6, _hi (prod0_prod1));

t7 = _sadd (t7, _lo (prod0_prod1));

}

corr[i] = t0; corr[i+1] = t1;

corr[i+2] = t2; corr[i+3] = t3;

corr[i+4] = t4; corr[i+5] = t5;

corr[i+6] = t6; corr[i+7] = t7;(Old) Idea: Automatically generate optimized code from high-level algoritm

Code generator:

High-level language more readable and maintainable

Flexible code generator makes code portable

Platform-specific optimizations still needed, but should ideally be specified separately from algorithm

Implementing a domain-specific language (DSL) from scratch is costly

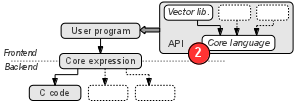

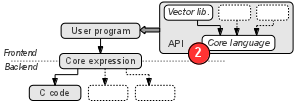

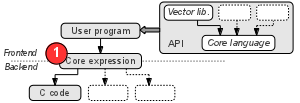

Embedded DSL (EDSL) [Hudak1998, Elliott2003]:

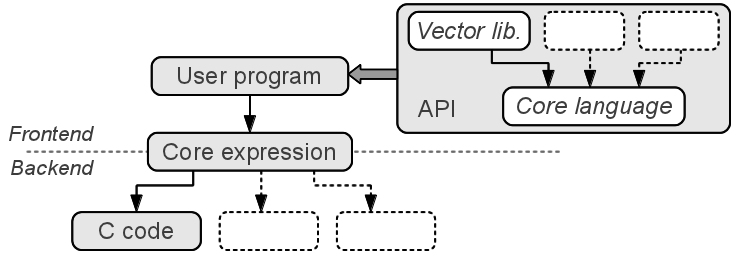

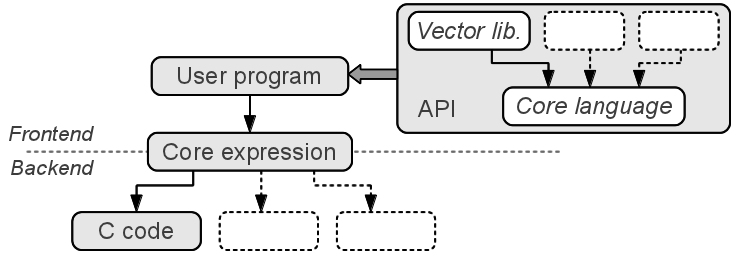

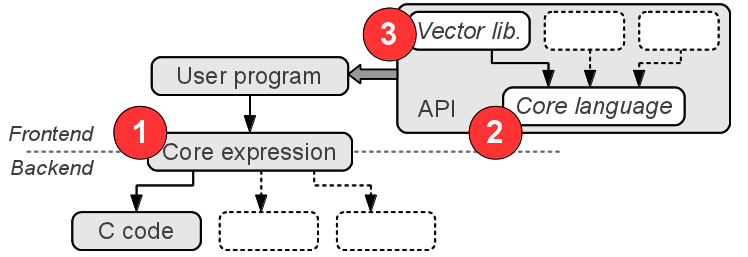

Language front-end:

Back-ends:

Sum of squares: $\sum_{i=1}^{n} i^{2}$

File header:

import qualified Prelude as P

import Feldspar -- Core language

import Feldspar.Vector -- List-like data structure

import Feldspar.Compiler -- C code generatorFeldspar code:

square x = x*x

sumSq :: Data Index -> Data Index

sumSq n = sum $ map square (1...n)Compare to standard Haskell:

sumSqHaskell :: Int -> Int

sumSqHaskell n = P.sum $ P.map square [1..n]Generated C code:

*Main> icompile sumSq

...

void test(struct array * mem, uint32_t v0, uint32_t * out)

{

uint32_t v2;

(* out) = 0;

for(uint32_t v1 = 0; v1 < v0; v1 += 1)

{

uint32_t v3;

v3 = (v1 + 1);

v2 = ((* out) + (v3 * v3));

(* out) = v2;

}

}Standard list operations:

length :: Vector a -> Data Length

sum :: Numeric a => Vector (Data a) -> Data a

map :: (a -> b) -> Vector a -> Vector b

zipWith :: (Syntax b, Syntax a) =>

(a -> b -> c) -> Vector a -> Vector b -> Vector c

...Enumeration:

(...) :: Data Index -> Data Index -> Vector (Data Index)Construction from a projection function:

indexed :: Data Length -> (Data Index -> a) -> Vector aEtc…

Scalar product: $\sum_{i=0}^{N-1} a_i \cdot b_i$

scProd :: Numeric a => Vector1 a -> Vector1 a -> Data a

scProd a b = sum $ indexed n $ \i -> a!i * b!i

where

n = length a `min` length bMore “functional”:

scProd2 :: Numeric a => Vector1 a -> Vector1 a -> Data a

scProd2 a b = sum $ zipWith (*) a bIdentical C code (see demo)!

sum, indexed and zipWith are meta-constructs implemented “on top of” FeldsparQuickCheck:

prop_scProd :: Vector1 Float -> Vector1 Float -> Data Bool

prop_scProd a b = scProd a b == scProd2 a b*Main> quickCheck prop_scProd

+++ OK, passed 100 tests.

Feldspar uses a special representation of the AST (see part III)

However, the representation is isomorphic to a recursive data type:

data Data a

where

Literal :: (...) => a -> Data a

Add :: (Num a, ...) => Data a -> Data a -> Data a

Sub :: (Num a, ...) => Data a -> Data a -> Data a

ForLoop :: (...)

=> Data Length

-> (Data Index -> Data st -> Data st)

-> Data st

...ForLoop)

A large number of primitive functions, e.g:

not :: Data Bool -> Data Bool

(&&) :: Data Bool -> Data Bool -> Data Bool

(||) :: Data Bool -> Data Bool -> Data Bool

div :: (Size a ~ Range a, Integral a) => Data a -> Data a -> Data a

mod :: (Size a ~ Range a, Integral a) => Data a -> Data a -> Data a

instance Numeric a => Num (Data a)

...Prelude functions

Complex constructs:

forLoop :: Type st

=> Data Length -- Number of steps

-> Data st -- Initial state

-> (Data Index -> Data st -> Data st) -- "Body" (single step)

-> Data st -- Final state

parallel :: Type a

=> Data Length -- Length of result

-> (Data Index -> Data a) -- Index projection

-> Data [a] -- Core language arrayAnd much more…

Core language version of sumSq:

sumSq2 :: Data Index -> Data Index

sumSq2 n = forLoop (n+1) 0 $ \i s -> s + i*i*Main> eval sumSq2 10

385

*Main> eval sumSq 10

385

The first n powers of 2:

powsOf2 :: Data Index -> Data [Index]

powsOf2 n = parallel n (2^)*Main> eval powsOf2 10

[1,2,4,8,16,32,64,128,256,512]

The Syntax class abstracts over Data:

class Syntax a

where

type Internal a

desugar :: a -> Data (Internal a)

sugar :: Data (Internal a) -> a

instance Type a => Syntax (Data a)

where

type Internal (Data a) = a

desugar = id

sugar = id

Vectors are syntactic sugar:

instance Syntax a => Syntax (Vector a)

where

type Internal (Vector a) = [Internal a]

...Tuples are also sugar, etc.

Type of vectors:

data Vector a

where

Indexed :: Data Length -> (Data Index -> a) -> Vector a

indexed :: Data Length -> (Data Index -> a) -> Vector a

indexed = Indexed

type Vector1 a = Vector (Data a)

type Vector2 a = Vector (Vector (Data a))(The Vector type provided by Feldspar is slightly more complicated.)

A vector is not a core expression, but it can be turned into one:

length :: Vector a -> Data Length

length (Indexed l _) = l

index :: Vector a -> Data Index -> a

index (Indexed _ ixf) i = ixf i

freeze :: Type a => Vector (Data a) -> Data [a]

freeze (Indexed len ixf) = parallel len ixf

fold :: Syntax a => (a -> b -> a) -> a -> Vector b -> a

fold f init b = forLoop (length b) init $

\i st -> f st (index b i)a and b are either core expressions or some other high-level type that can be turned into a core expression.See demo

Features:

http://hackage.haskell.org/package/syntacticdata AST dom a

where

Symbol :: ConsType a => dom a -> AST dom a

(:$:) :: Typeable a => AST dom (a :-> b)

-> AST dom (Full a)

-> AST dom b

type ASTF dom a = AST dom (Full a)Helper definitions:

newtype Full a = Full { result :: a }

newtype a :-> b = Partial (a -> b)

infixr :->

class ConsType a

instance ConsType (Full a)

instance ConsType b => ConsType (a :-> b)

infixl 1 :$:Standard expression GADT:

data Expr1 a

where

Lit1 :: Num a => a -> Expr1 a

Add1 :: Num a => Expr1 a -> Expr1 a -> Expr1 a

lit1 :: Int -> Expr1 Int

lit1 x = Lit1 x

add1 :: Expr1 Int -> Expr1 Int -> Expr1 Int

add1 x y = Add1 x y

eval1 :: Expr1 Int -> Int

eval1 (Lit1 x) = x

eval1 (Add1 x y) = eval1 x + eval1 y*Main> eval1 (lit1 4 `add1` lit1 5)

9

Same expression type represented using AST:

data NumDomain2 a

where

Lit2 :: Num a => a -> NumDomain2 (Full a)

Add2 :: Num a => NumDomain2 (a :-> a :-> Full a)

type Expr2 a = ASTF NumDomain2 a

lit2 :: Int -> Expr2 Int

lit2 a = Symbol (Lit2 a)

add2 :: Expr2 Int -> Expr2 Int -> Expr2 Int

add2 x y = Symbol Add2 :$: x :$: y

eval2 :: Expr2 a -> a

eval2 (Symbol (Lit2 a)) = a

eval2 (Symbol Add2 :$: a :$: b) = eval2 a + eval2 bThe underlying representation does not affect the user interface:

*Main> eval1 (lit1 4 `add1` lit1 5)

9

*Main> eval2 (lit2 4 `add2` lit2 5)

9

AST?Reason 1: Generic traversals

countSyms :: AST dom a -> Int

countSyms (Symbol _) = 1

countSyms (f :$: a) = countSyms f + countSyms a*Main> countSyms (lit2 4 `add2` lit2 5)

3

ASTReason 1: Generic traversals

Use type classes to make generic traversals aware of certain symbols:

class HasAddition dom

where

isAdd :: dom a -> Bool

instance HasAddition NumDomain2

where

isAdd Add2 = True

isAdd _ = False

countAdd :: HasAddition dom => AST dom a -> Int

countAdd (Symbol a) | isAdd a = 1

countAdd (Symbol _) = 0

countAdd (f :$: a) = countAdd f + countAdd aASTReason 2: Extensible symbol domains

Higher-kinded version of the Either type constructor:

data dom1 :+: dom2 :: * -> *

where

InjectL :: dom1 a -> (dom1 :+: dom2) a

InjectR :: dom2 a -> (dom1 :+: dom2) a

infixr :+:ASTReason 2: Extensible symbol domains

Splitting the domain:

data Lit3 a where Lit3 :: Int -> Lit3 (Full Int)

data Add3 a where Add3 :: Add3 (Int :-> Int :-> Full Int)

type NumDomain3 = Lit3 :+: Add3

type Expr3 a = ASTF NumDomain3 a

lit3 :: Int -> ASTF NumDomain3 Int

lit3 a = Symbol (InjectL (Lit3 a))

add3 :: ASTF NumDomain3 Int

-> ASTF NumDomain3 Int

-> ASTF NumDomain3 Int

add3 x y = Symbol (InjectR Add3) :$: x :$: yASTReason 2: Extensible symbol domains

Use type classes to make the domain abstract:

lit3 :: (Lit3 :<: dom) => Int -> ASTF dom Int

add3 :: (Add3 :<: dom) =>

ASTF dom Int -> ASTF dom Int -> ASTF dom Int

countAdd :: (Add3 :<: dom) => AST dom a -> Int

eval :: Eval dom => AST dom a -> a(:<:), Eval and many other type classes dealing with abstract domainsparallelparallelAbstract syntax symbol:

data Array a

where

Parallel :: Type a => Array

( Length -- Number of elements

:-> (Index -> a) -- Index projection

:-> Full [a] -- Result

)

-- Other array constructs omittedparallelAbstract syntax symbol:

data Array a

where

Parallel :: Type a => Array

( Length -- Number of elements

:-> (Index -> a) -- Index projection

:-> Full [a] -- Result

)

-- Other array constructs omittedUser interface (using type-class magic from Syntactic):

parallel :: Type a =>

Data Length -> (Data Index -> Data a) -> Data [a]

parallel = sugarSym ParallelparallelSemantics:

instance IsSymbol Array where

toSym Parallel = Sym

"parallel"

(\len ixf -> genericTake len $ map ixf [0..])parallelSemantics:

instance IsSymbol Array where

toSym Parallel = Sym

"parallel"

(\len ixf -> genericTake len $ map ixf [0..])Additionally:

parallelSummary: declarative implementation of parallel in ≈ 40 LOC

parallelSummary: declarative implementation of parallel in ≈ 40 LOC

What do we get?

parallelSummary: declarative implementation of parallel in ≈ 40 LOC

What do we get?

Feldspar expression type:

newtype Data a = Data { unData :: AST FeldDomainAll (Full a) }Feldspar expression type:

newtype Data a = Data { unData :: AST FeldDomainAll (Full a) }The symbol domain:

type FeldDomainAll = HODomain TypeCtx FeldDomain

type FeldDomain

=

-- Generic constructs (from Syntactic):

Decor SourceInfo1 (Identity TypeCtx)

:+: Condition TypeCtx

:+: Let TypeCtx TypeCtx

:+: Literal TypeCtx

:+: Select TypeCtx

:+: Tuple TypeCtx

-- Feldspar-specific constructs:

:+: Array

:+: BITS

:+: COMPLEX

:+: Conversion

:+: EQ

:+: Error

:+: FLOATING

:+: FRACTIONAL

:+: INTEGRAL

:+: Logic

:+: Loop

:+: LoopM Mut

:+: MONAD Mut

:+: Mutable

:+: MutableArray

:+: MutableReference

:+: NUM

:+: ORD

:+: PropSize

:+: Trace

:+: MutableToPure

:+: ConditionM Muttype FeldDomain

=

...

:+: Array

:+: BITS

:+: COMPLEX

:+: Conversion

:+: EQ

...The different “features” (e.g. Array) are developed independently and assembled “in the last minute”

Feldspar (upcoming version 0.5 is based on Syntactic):

http://hackage.haskell.org/package/feldspar-language

http://hackage.haskell.org/package/feldspar-compiler

Syntactic:

http://hackage.haskell.org/package/syntactic

> cabal unpack syntactic

Downloading syntactic-0.7...

Unpacking to syntactic-0.7/

> cd syntactic-0.7/Examples/NanoFeldspar/

The Resource-AWare Functional Programming project (RAWFP) invites to a Feldspar hacking session

Feldspar-related masters theses

Hudak. Modular Domain Specific Languages and Tools.

In ICSR ’98: Proceedings of the 5th International Conference on Software Reuse. 1998. IEEE Computer Society.

Elliott, Finne, de Moor. Compiling Embedded Languages.

Journal of Functional Programming. 2003. Cambridge University Press.